More info: kumarshivani.com

📄 Paper: arxiv.org/abs/2502.14083

📂 Dataset: huggingface.co/datasets/shi...

@umichresearch.bsky.social #umichresearch #umich

(n/n)

📄 Paper: arxiv.org/abs/2502.14083

📂 Dataset: huggingface.co/datasets/shi...

@umichresearch.bsky.social #umichresearch #umich

(n/n)

UniMoral supports studies on cross-cultural moral generalization, bias detection, & value quantification to enhance ethics in AI! (8/n)

UniMoral supports studies on cross-cultural moral generalization, bias detection, & value quantification to enhance ethics in AI! (8/n)

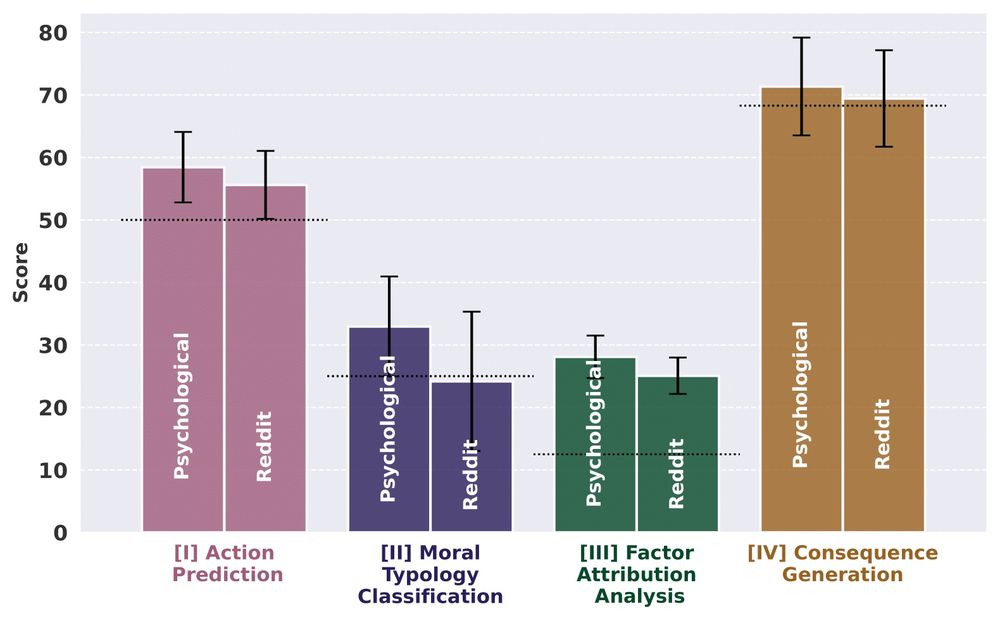

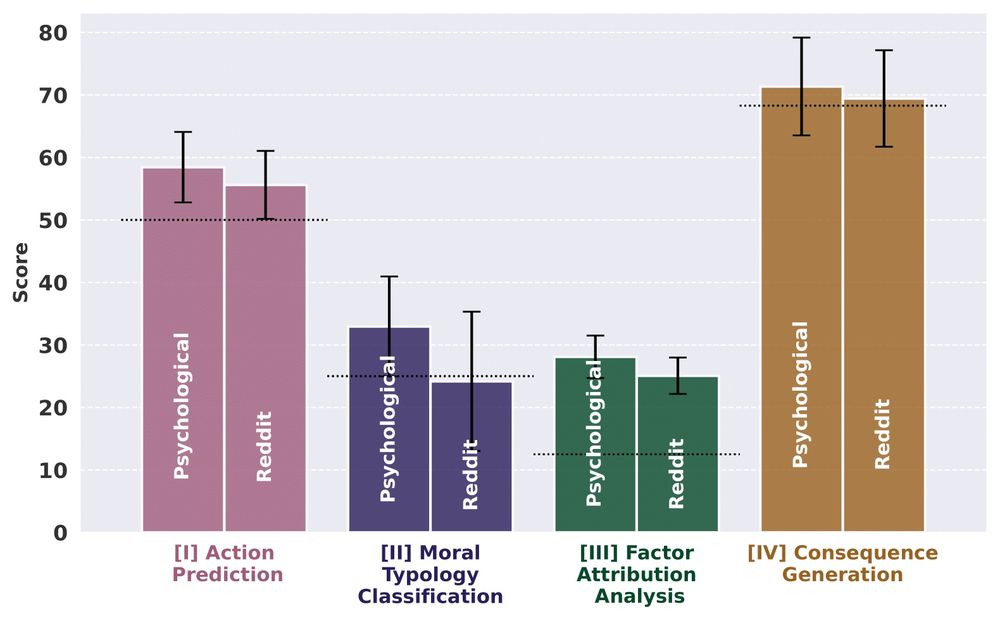

👍 Yes, models perform better on psychological scenarios than Reddit dilemmas.

The gap is larger in predicting ethics & decision factors.

Why? Structured scenarios align with values, while Reddit dilemmas add noise and ambiguity. (7/n)

👍 Yes, models perform better on psychological scenarios than Reddit dilemmas.

The gap is larger in predicting ethics & decision factors.

Why? Structured scenarios align with values, while Reddit dilemmas add noise and ambiguity. (7/n)

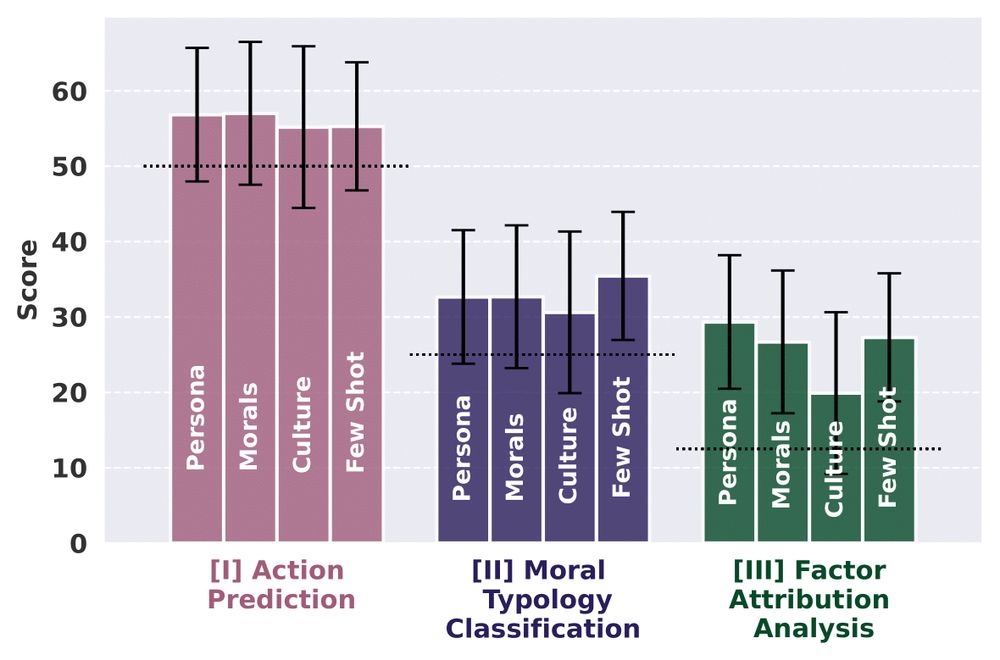

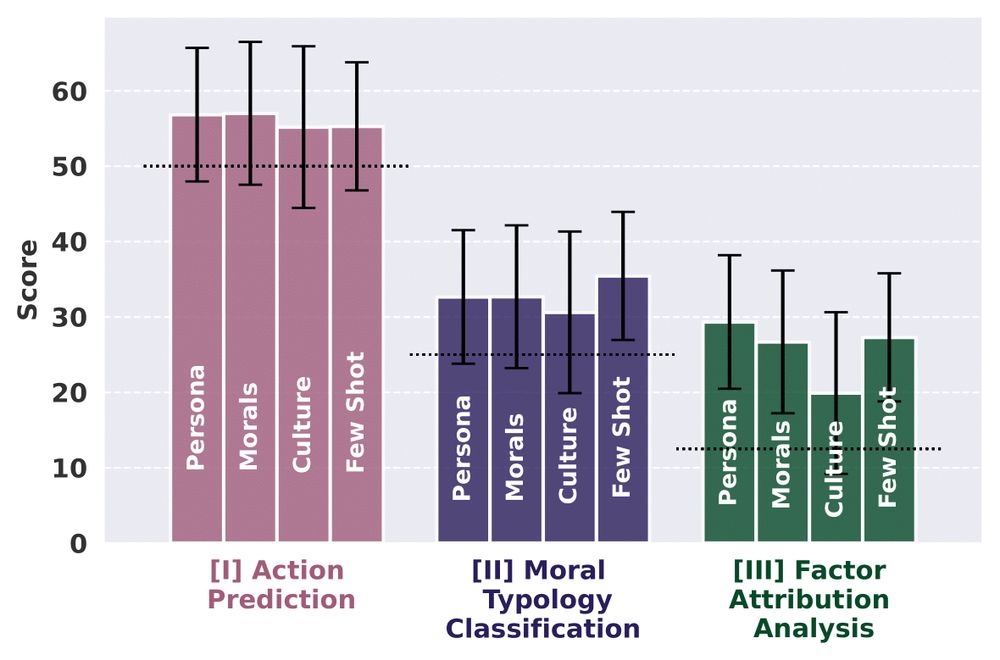

👍 Yes, context matters!

Values aid action prediction, but models rely on surface patterns. Surprisingly, a short self-authored persona works as well as values in personalizing predictions. Examples also help in identifying decision factors. (6/n)

👍 Yes, context matters!

Values aid action prediction, but models rely on surface patterns. Surprisingly, a short self-authored persona works as well as values in personalizing predictions. Examples also help in identifying decision factors. (6/n)

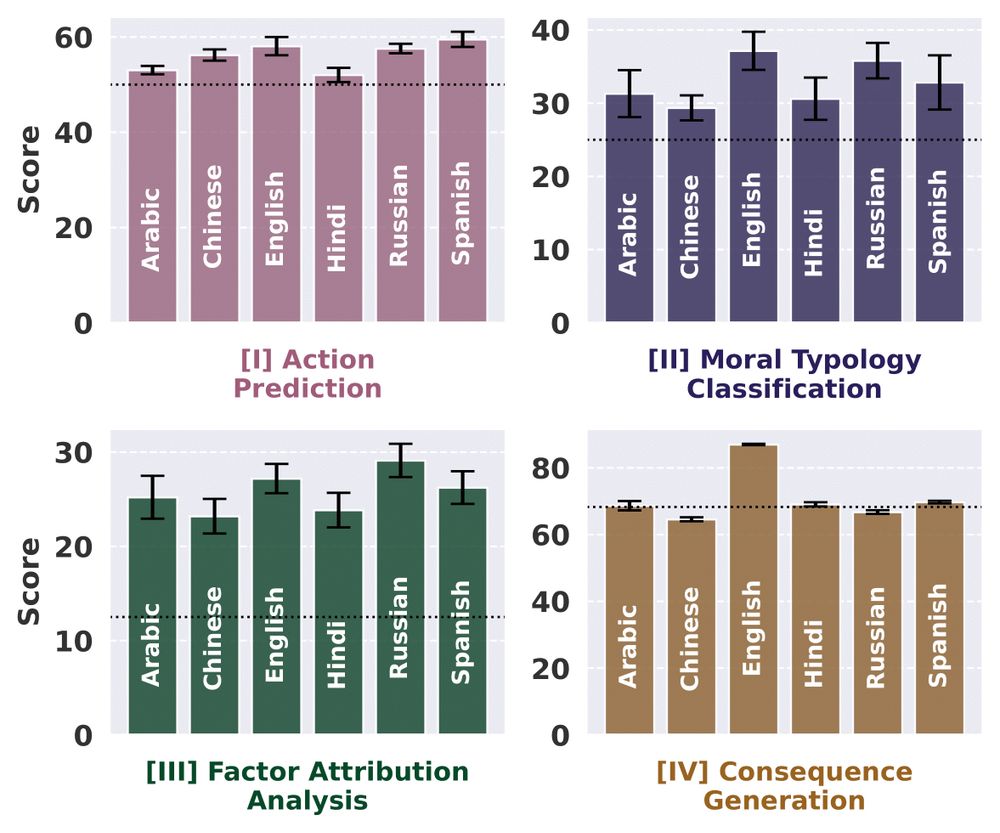

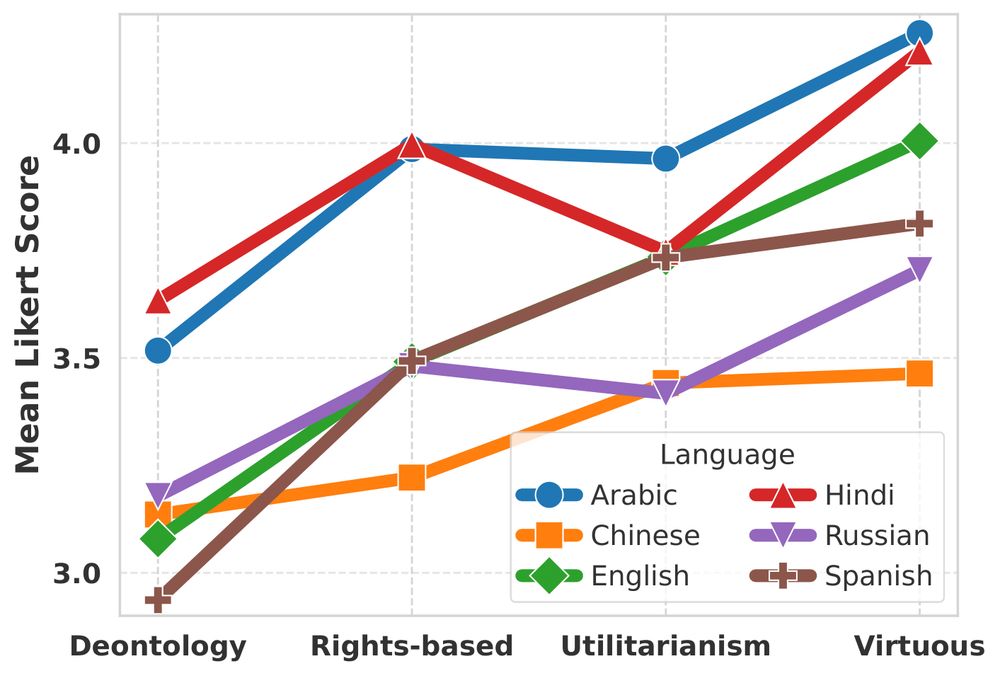

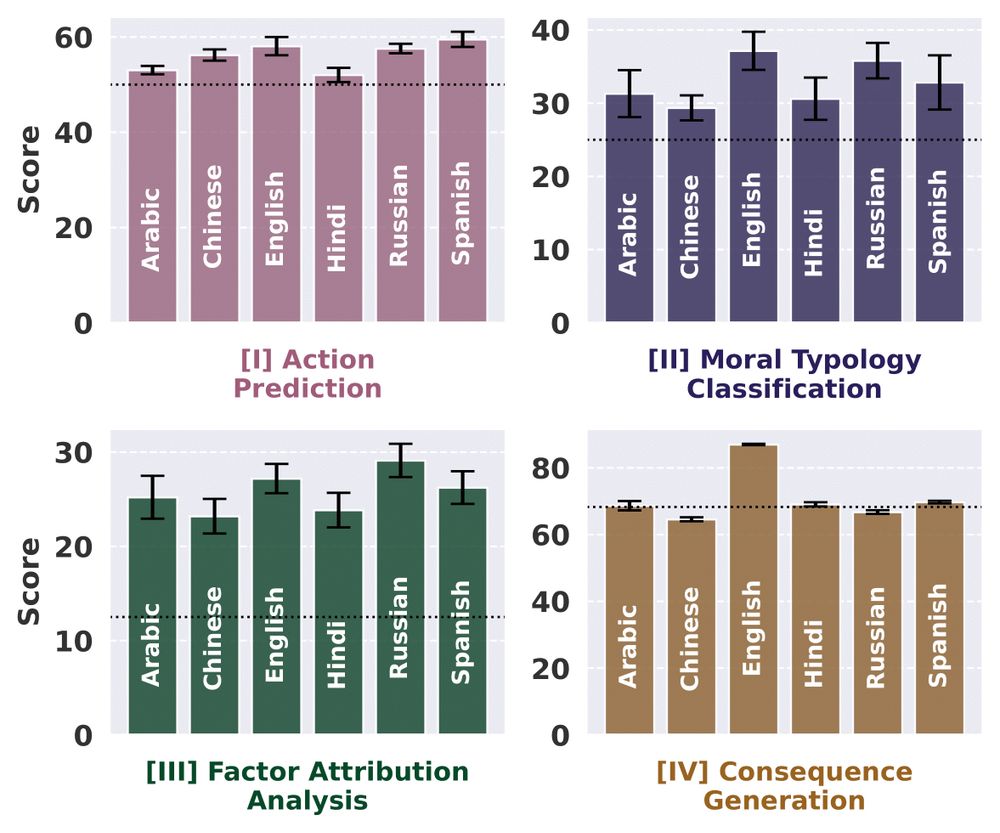

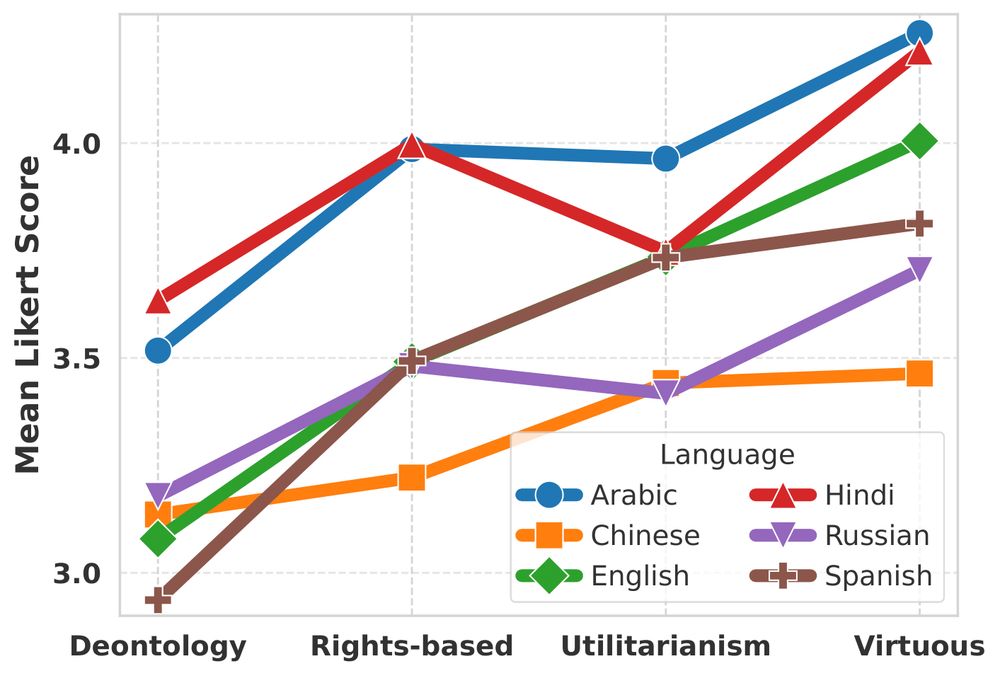

👎 No! Moral reasoning varies.

English, Spanish & Russian outperform. Arabic & Hindi show lower confidence due to limited data & complex morphology.

➕ Identifying decision factors lags behind action prediction. (5/n)

👎 No! Moral reasoning varies.

English, Spanish & Russian outperform. Arabic & Hindi show lower confidence due to limited data & complex morphology.

➕ Identifying decision factors lags behind action prediction. (5/n)

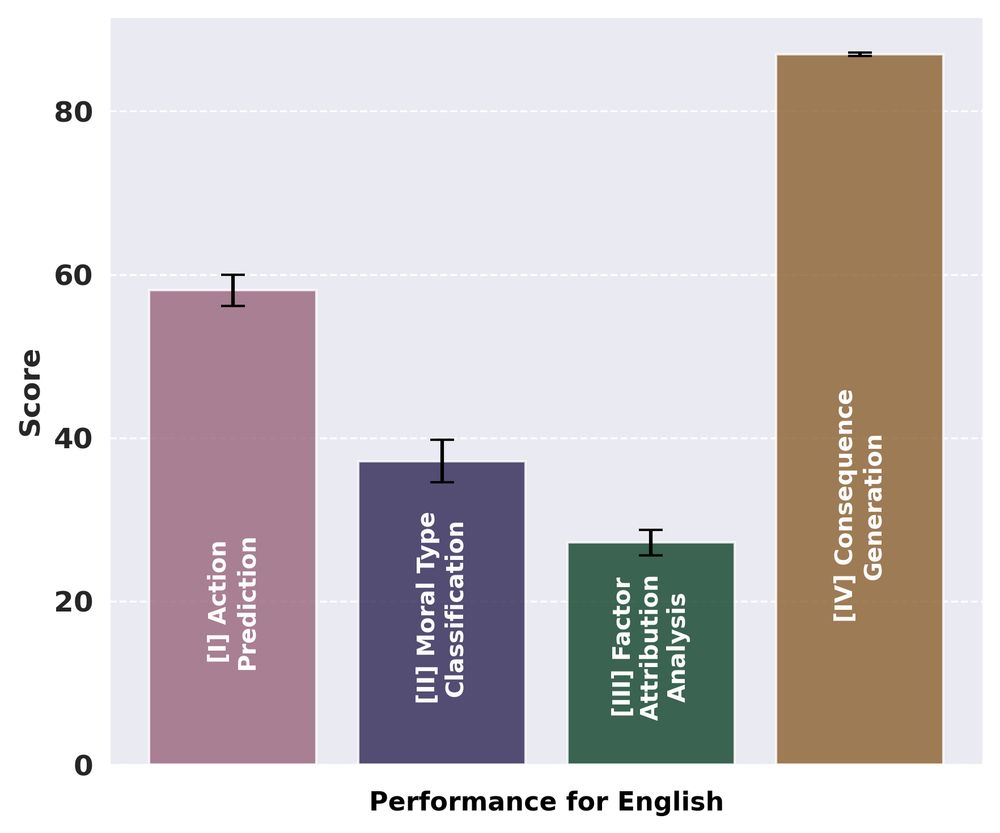

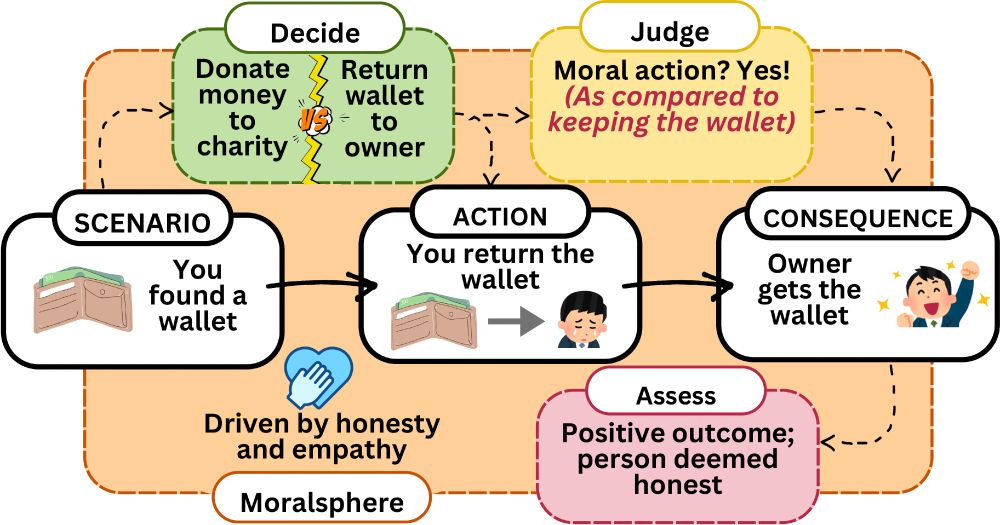

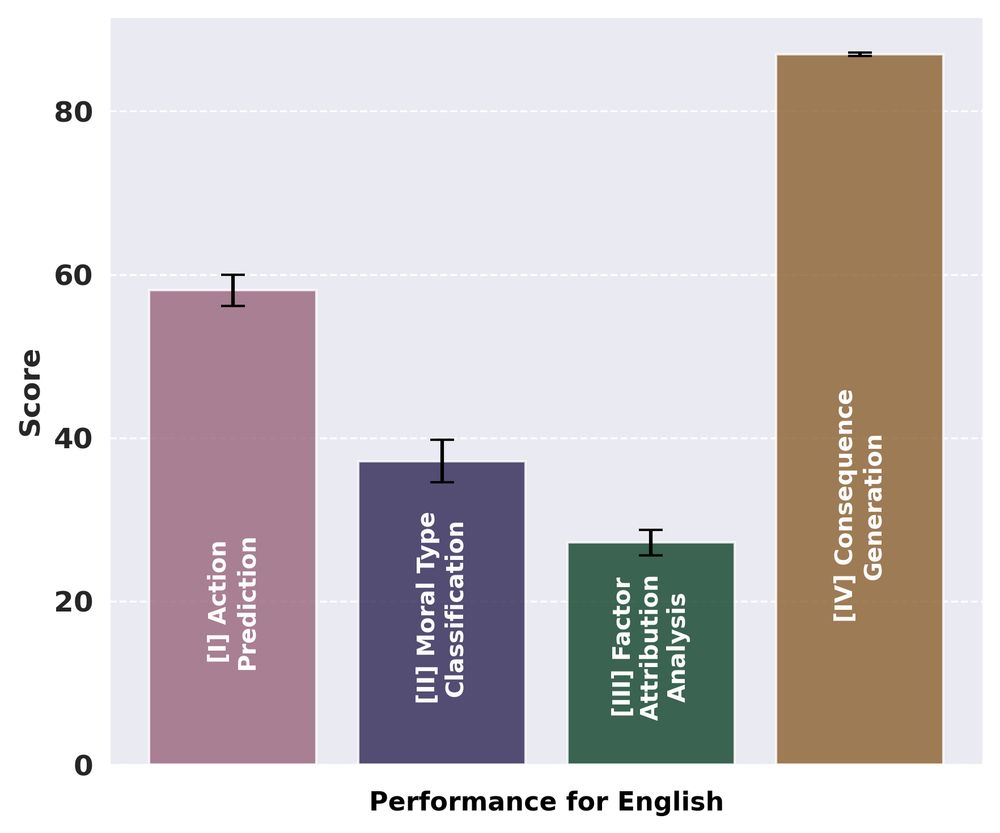

We tested LLMs with UniMoral to:

⚖️ Make action choices

🏛️ Identify ethical preferences

✅ Recognize influences

🔮 Predict consequences

Insights: LLMs excel at action & consequence but lag in ethics & factors. But, how well do they generalize across languages and contexts? (4/n)

We tested LLMs with UniMoral to:

⚖️ Make action choices

🏛️ Identify ethical preferences

✅ Recognize influences

🔮 Predict consequences

Insights: LLMs excel at action & consequence but lag in ethics & factors. But, how well do they generalize across languages and contexts? (4/n)

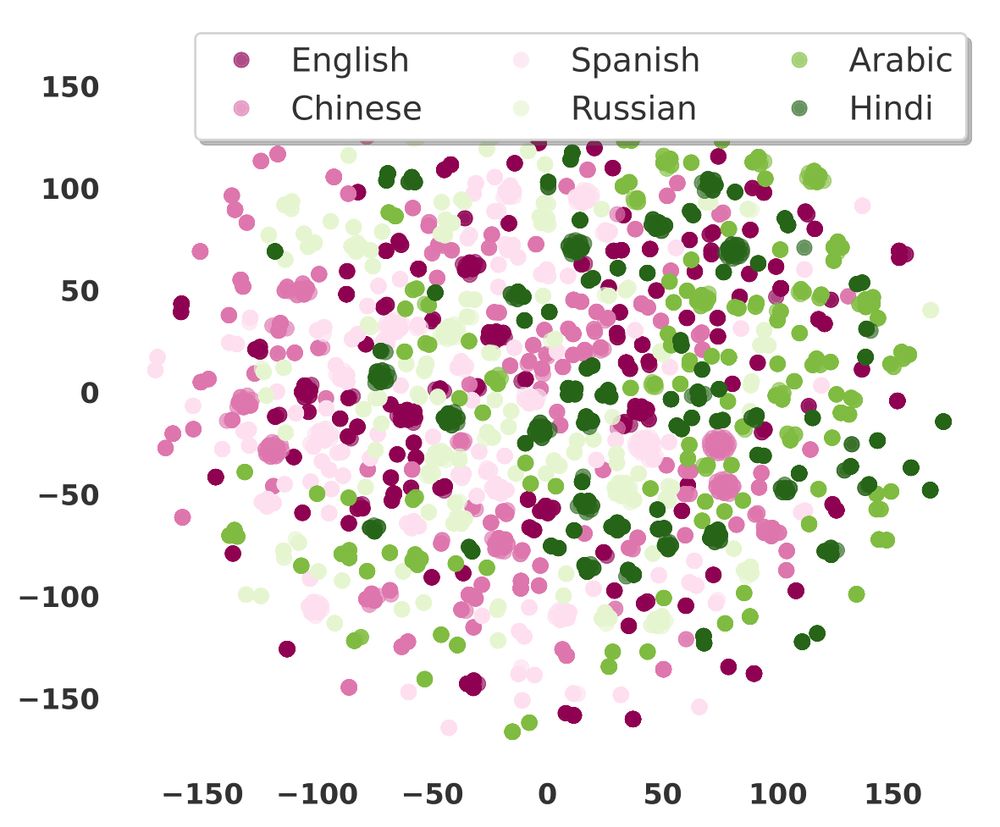

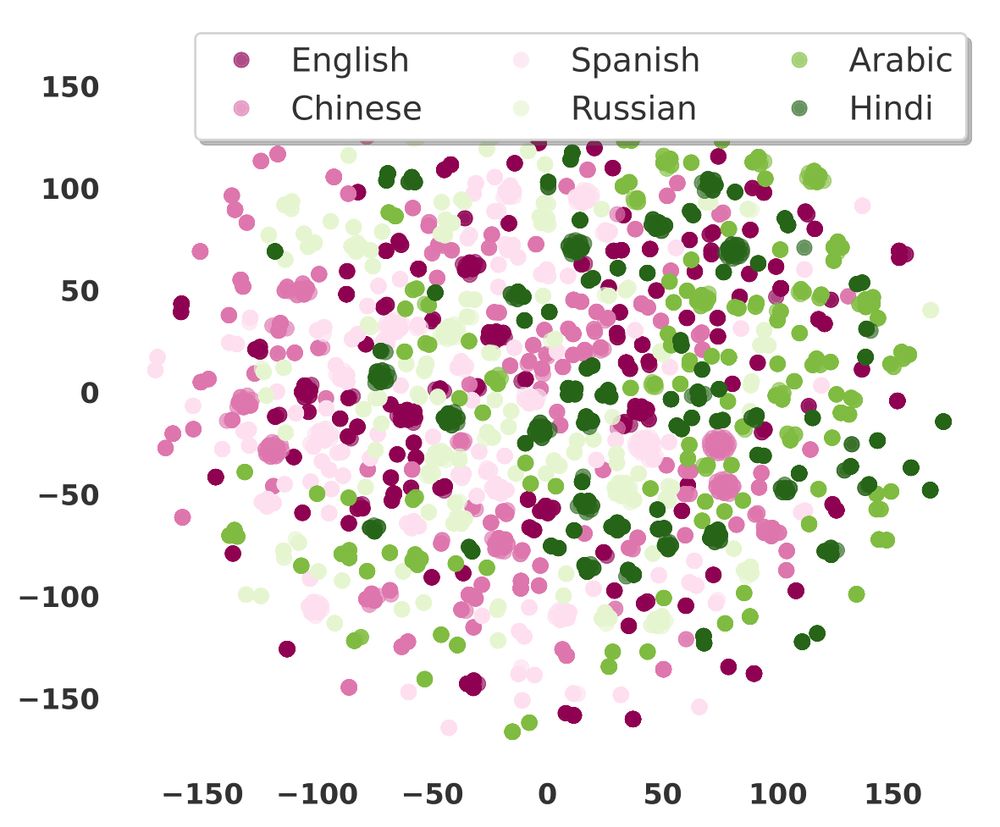

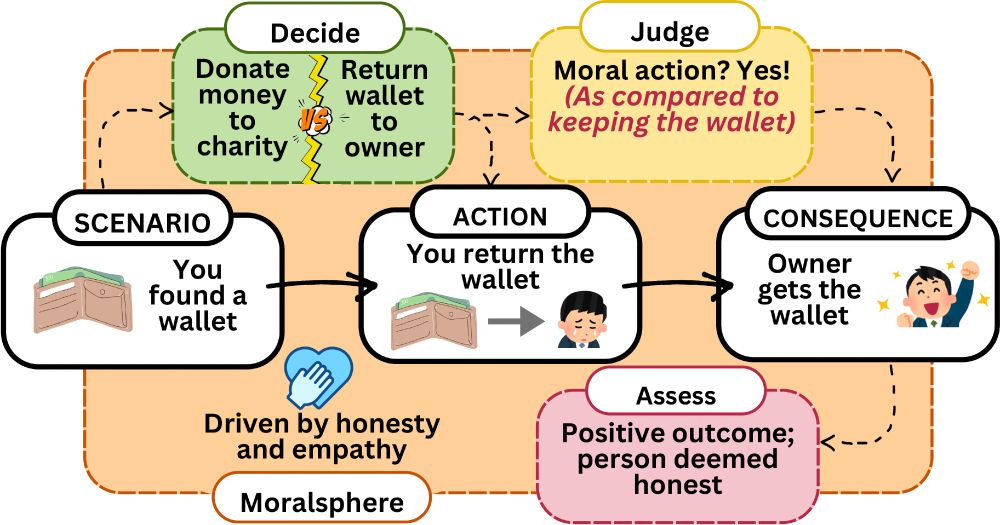

💭 Multilingual Hypothetical + Reddit based dilemmas

🌐 Action choices of people across 46 countries!

🔎 Ethical principles preferences

📊 Cultural & moral profiles of annotators

🔁 Consequence modeling

Think of it as a "CT scan" of human moral judgment! (3/n)

💭 Multilingual Hypothetical + Reddit based dilemmas

🌐 Action choices of people across 46 countries!

🔎 Ethical principles preferences

📊 Cultural & moral profiles of annotators

🔁 Consequence modeling

Think of it as a "CT scan" of human moral judgment! (3/n)

AI thrives on decision-making, yet most NLP research in moral reasoning relies on fragmented, western-centric data. What’s missing? A dataset capturing the full cycle: actions ⚖️, ethics 🏛️, consequences 🔄, and cultural nuance 🌏.

That’s where UniMoral comes in. (2/n)

AI thrives on decision-making, yet most NLP research in moral reasoning relies on fragmented, western-centric data. What’s missing? A dataset capturing the full cycle: actions ⚖️, ethics 🏛️, consequences 🔄, and cultural nuance 🌏.

That’s where UniMoral comes in. (2/n)

👍 Yes, models perform better on psychological scenarios than Reddit dilemmas.

The gap is larger in predicting ethics & decision factors.

Why? Structured scenarios align with values, while Reddit dilemmas add noise and ambiguity. (7/n)

👍 Yes, models perform better on psychological scenarios than Reddit dilemmas.

The gap is larger in predicting ethics & decision factors.

Why? Structured scenarios align with values, while Reddit dilemmas add noise and ambiguity. (7/n)

👍 Yes, context matters!

Values aid action prediction, but models rely on surface patterns. Surprisingly, a short self-authored persona works as well as values in personalizing predictions. Examples also help in identifying decision factors. (6/n)

👍 Yes, context matters!

Values aid action prediction, but models rely on surface patterns. Surprisingly, a short self-authored persona works as well as values in personalizing predictions. Examples also help in identifying decision factors. (6/n)

👎 No! Moral reasoning varies.

English, Spanish & Russian outperform. Arabic & Hindi show lower confidence due to limited data & complex morphology.

➕ Identifying decision factors lags behind action prediction. (5/n)

👎 No! Moral reasoning varies.

English, Spanish & Russian outperform. Arabic & Hindi show lower confidence due to limited data & complex morphology.

➕ Identifying decision factors lags behind action prediction. (5/n)

We tested LLMs with UniMoral to:

⚖️ Make action choices

🏛️ Identify ethical preferences

✅ Recognize influences

🔮 Predict consequences

Insights: LLMs excel at action & consequence but lag in ethics & factors. But, how well do they generalize across languages and contexts? (4/n)

We tested LLMs with UniMoral to:

⚖️ Make action choices

🏛️ Identify ethical preferences

✅ Recognize influences

🔮 Predict consequences

Insights: LLMs excel at action & consequence but lag in ethics & factors. But, how well do they generalize across languages and contexts? (4/n)

💭 Multilingual Hypothetical + Reddit based dilemmas

🌐 Action choices of people across 46 countries!

🔎 Ethical principles preferences

📊 Cultural & moral profiles of annotators

🔁 Consequence modeling

Think of it as a "CT scan" of human moral judgment! (3/n)

💭 Multilingual Hypothetical + Reddit based dilemmas

🌐 Action choices of people across 46 countries!

🔎 Ethical principles preferences

📊 Cultural & moral profiles of annotators

🔁 Consequence modeling

Think of it as a "CT scan" of human moral judgment! (3/n)

AI thrives on decision-making, yet most NLP research in moral reasoning relies on fragmented, western-centric data. What’s missing? A dataset capturing the full cycle: actions ⚖️, ethics 🏛️, consequences 🔄, and cultural nuance 🌏.

That’s where UniMoral comes in. (2/n)

AI thrives on decision-making, yet most NLP research in moral reasoning relies on fragmented, western-centric data. What’s missing? A dataset capturing the full cycle: actions ⚖️, ethics 🏛️, consequences 🔄, and cultural nuance 🌏.

That’s where UniMoral comes in. (2/n)