Prev: Ai2, Google Research, MSR

Evaluating language technologies, regularly ranting, and probably procrastinating.

https://sites.google.com/view/shailybhatt/

They adapt jargon 😀

BUT shift all other metrics in one direction (e.g shorter length, less formal) ☹️

‼️ TLDR: homogenised writing across cultures, not adaptation.

[7/11]

They adapt jargon 😀

BUT shift all other metrics in one direction (e.g shorter length, less formal) ☹️

‼️ TLDR: homogenised writing across cultures, not adaptation.

[7/11]

🖼️ Computer Vision loves figures (shocking!)

🧑🏫 Education has the most distinctive vocabulary

📈 ML/NLP emphasize "quantitative evidence" (we love our bold numbers!)

[6/11]

🖼️ Computer Vision loves figures (shocking!)

🧑🏫 Education has the most distinctive vocabulary

📈 ML/NLP emphasize "quantitative evidence" (we love our bold numbers!)

[6/11]

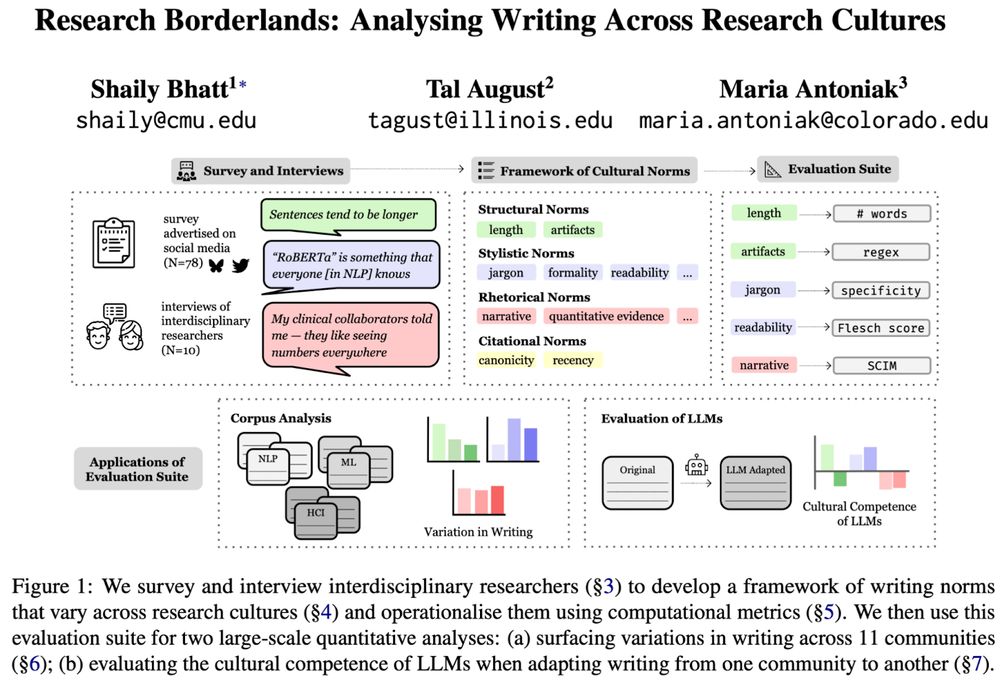

This suite can be used to analyse both human-written research papers as well as LLM-generated text.

[5/11]

This suite can be used to analyse both human-written research papers as well as LLM-generated text.

[5/11]

🏗️ Structural (length, figures/tables)

🕺 Stylistic (jargon, formality, readability)

⚖️ Rhetorical (evidence, framing, narrative flow)

📖 Citational (who is cited, how)

[4/11]

🏗️ Structural (length, figures/tables)

🕺 Stylistic (jargon, formality, readability)

⚖️ Rhetorical (evidence, framing, narrative flow)

📖 Citational (who is cited, how)

[4/11]

As an interviewee said:

🗣️ “There's a way to write ... that makes it way more likely a paper with the same results gets accepted or not.”

What are these tacit cultural norms and can LLMs follow them?

[2/11]

As an interviewee said:

🗣️ “There's a way to write ... that makes it way more likely a paper with the same results gets accepted or not.”

What are these tacit cultural norms and can LLMs follow them?

[2/11]

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

#pittsburghstorm #36hrslater

(The ghost town is my street).

#pittsburghstorm #36hrslater

(The ghost town is my street).

@aaclmeeting.bsky.social

@aaclmeeting.bsky.social

This underscores the importance of evaluating models in user-facing tasks.

[6/7]

This underscores the importance of evaluating models in user-facing tasks.

[6/7]

✨ names (Raj🇮🇳, Tommy🇺🇲, Ali🇦🇫)

✨ artefacts (temple🇮🇳, bao🇨🇳)

and factually varying words:

✨ parliaments (Lok Sabha🇮🇳, Bundestag🇩🇪)

✨ political parties (BJP🇮🇳, NDP🇨🇦, PDP🇳🇬)

✨ polarised issues (brexit🇬🇧, gun🇺🇲)

etc.

[5/7]

✨ names (Raj🇮🇳, Tommy🇺🇲, Ali🇦🇫)

✨ artefacts (temple🇮🇳, bao🇨🇳)

and factually varying words:

✨ parliaments (Lok Sabha🇮🇳, Bundestag🇩🇪)

✨ political parties (BJP🇮🇳, NDP🇨🇦, PDP🇳🇬)

✨ polarised issues (brexit🇬🇧, gun🇺🇲)

etc.

[5/7]

✨ Statistically significant variance across nationalities i.e., non-trivial adaptations.

✨ Higher variance in stories than QA, as the latter is more factual.

✨ More interquartile distance in QA due to more topic diversity.

[4/7]

✨ Statistically significant variance across nationalities i.e., non-trivial adaptations.

✨ Higher variance in stories than QA, as the latter is more factual.

✨ More interquartile distance in QA due to more topic diversity.

[4/7]

In this work we take the first steps towards asking whether LLMs can cater to diverse cultures in *user-facing generative* tasks.

[1/7]

In this work we take the first steps towards asking whether LLMs can cater to diverse cultures in *user-facing generative* tasks.

[1/7]