Also blogging about AI research at magazine.sebastianraschka.com.

Kimi K2 is based on the DeepSeek V3/R1 architecture, and here's a side-by-side comparison.

In short, Kimi K2 is a slightly scaled DeepSeek V3/R1. And the gains are in the data and training recipes. Hopefully, we will see some details on those soon, too.

Kimi K2 is based on the DeepSeek V3/R1 architecture, and here's a side-by-side comparison.

In short, Kimi K2 is a slightly scaled DeepSeek V3/R1. And the gains are in the data and training recipes. Hopefully, we will see some details on those soon, too.

Gated DeltaNet hybrids (Qwen3-Next, Kimi Linear), text diffusion, code world models, and small reasoning transformers.

🔗 magazine.sebastianraschka.com/p/beyond-sta...

Gated DeltaNet hybrids (Qwen3-Next, Kimi Linear), text diffusion, code world models, and small reasoning transformers.

🔗 magazine.sebastianraschka.com/p/beyond-sta...

Link to the full article: magazine.sebastianraschka.com/p/the-big-ll...

Link to the full article: magazine.sebastianraschka.com/p/the-big-ll...

(Source: huggingface.co/MiniMaxAI/Mi...)

(Source: huggingface.co/MiniMaxAI/Mi...)

🔗 github.com/rasbt/LLMs-f...

🔗 github.com/rasbt/LLMs-f...

🔗 github.com/rasbt/LLMs-f...

🔗 github.com/rasbt/LLMs-f...

🔗 github.com/rasbt/LLMs-f...

Will add this for multi-head latent, sliding, and sparse attention as well.

🔗 github.com/rasbt/LLMs-f...

Will add this for multi-head latent, sliding, and sparse attention as well.

A few months ago, the HRM made big waves in the AI research community as it showed really good performance on the ARC challenge despite its small 27M size. (That's about 22x smaller than the smallest Qwen3 0.6B model.)

A few months ago, the HRM made big waves in the AI research community as it showed really good performance on the ARC challenge despite its small 27M size. (That's about 22x smaller than the smallest Qwen3 0.6B model.)

sebastianraschka.com/blog/2021/dl...

sebastianraschka.com/blog/2021/dl...

If you are new to reinforcement learning, this article has a generous intro section (PPO, GRPO, etc)

Also, I cover 15 recent articles focused on RL & Reasoning.

🔗 magazine.sebastianraschka.com/p/the-state-...

If you are new to reinforcement learning, this article has a generous intro section (PPO, GRPO, etc)

Also, I cover 15 recent articles focused on RL & Reasoning.

🔗 magazine.sebastianraschka.com/p/the-state-...

Why? Because I think 1B & 3B models are great for experimentation, and I wanted to share a clean, readable implementation for learning and research: huggingface.co/rasbt/llama-...

Why? Because I think 1B & 3B models are great for experimentation, and I wanted to share a clean, readable implementation for learning and research: huggingface.co/rasbt/llama-...

- Python & PyTorch still dominate

- 80%+ use NVIDIA GPUs, but no multi-node setups 🤔

- LoRA still popular for training efficiency, but full finetuning gains traction.

Surprisingly, CNNs still lead in CV comps

- Python & PyTorch still dominate

- 80%+ use NVIDIA GPUs, but no multi-node setups 🤔

- LoRA still popular for training efficiency, but full finetuning gains traction.

Surprisingly, CNNs still lead in CV comps

1. Code and train your own LLM to really understand the fundamentals

2. Train models more conveniently using production-ready libraries

3. Learn about the big-picture considerations for real-world LLM/AI apps

1. Code and train your own LLM to really understand the fundamentals

2. Train models more conveniently using production-ready libraries

3. Learn about the big-picture considerations for real-world LLM/AI apps

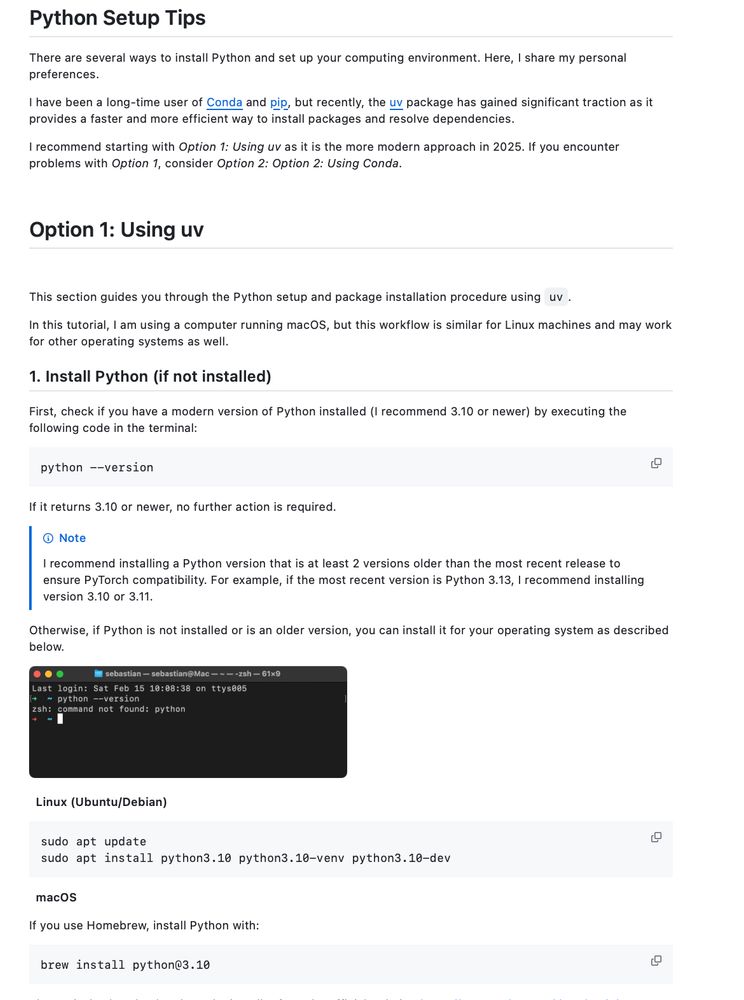

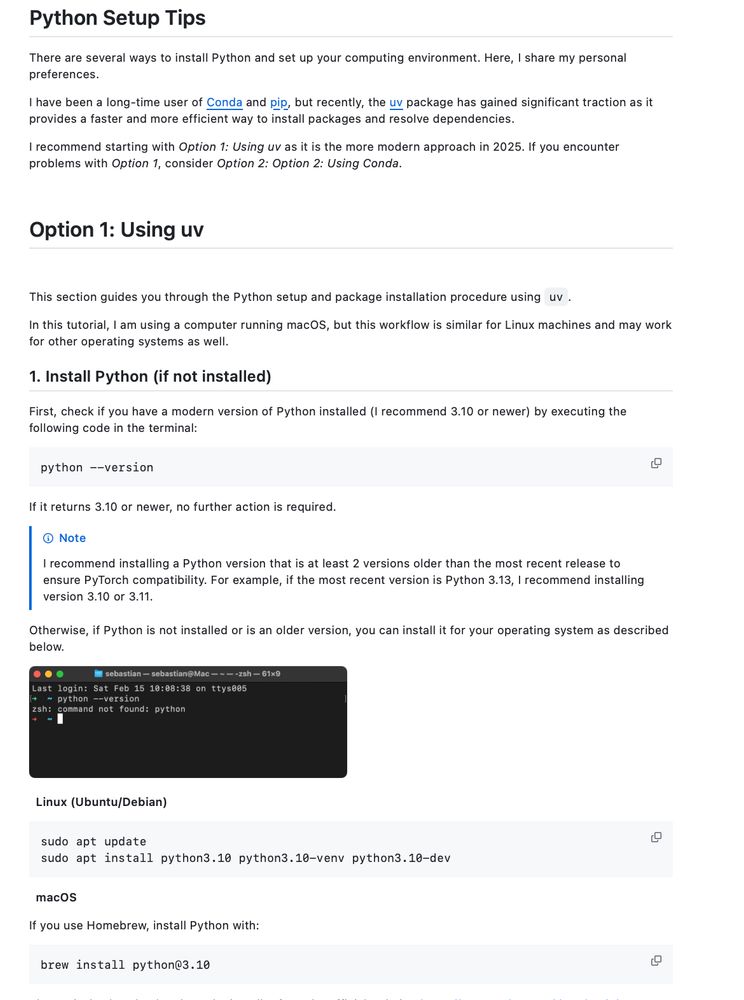

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

Anyone else familiar with projects that tried this?

Anyone else familiar with projects that tried this?