Gratitude to the entire CTRL-Labs team and partners at

Reality Labs, Meta.

Special shout out to @jseely.bsky.social, Michael Mandel, Sean Bittner, Alexandre Gramfort, Adam Berenzweig, Patrick Kaifosh, and TR Readon!

Gratitude to the entire CTRL-Labs team and partners at

Reality Labs, Meta.

Special shout out to @jseely.bsky.social, Michael Mandel, Sean Bittner, Alexandre Gramfort, Adam Berenzweig, Patrick Kaifosh, and TR Readon!

And and that's not all!

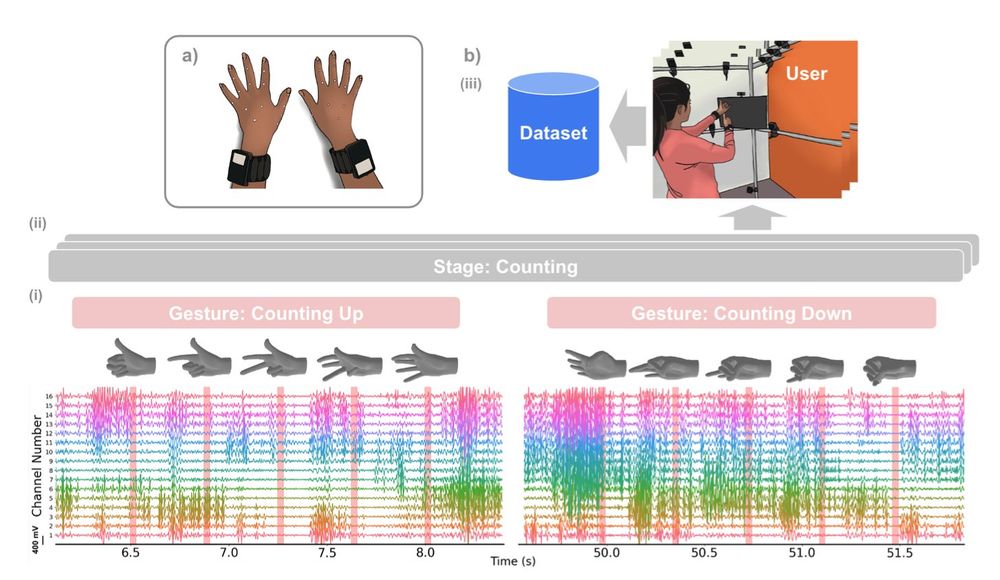

Here's another large dataset we just released - emg2pose - focused on hand pose estimation using sEMG. emg2pose has 193 participants over 370 hours & >50 behavioral categories w/ hand motion capture ground truth.

arxiv.org/abs/2412.02725

github.com/facebookrese...

And and that's not all!

Here's another large dataset we just released - emg2pose - focused on hand pose estimation using sEMG. emg2pose has 193 participants over 370 hours & >50 behavioral categories w/ hand motion capture ground truth.

arxiv.org/abs/2412.02725

github.com/facebookrese...

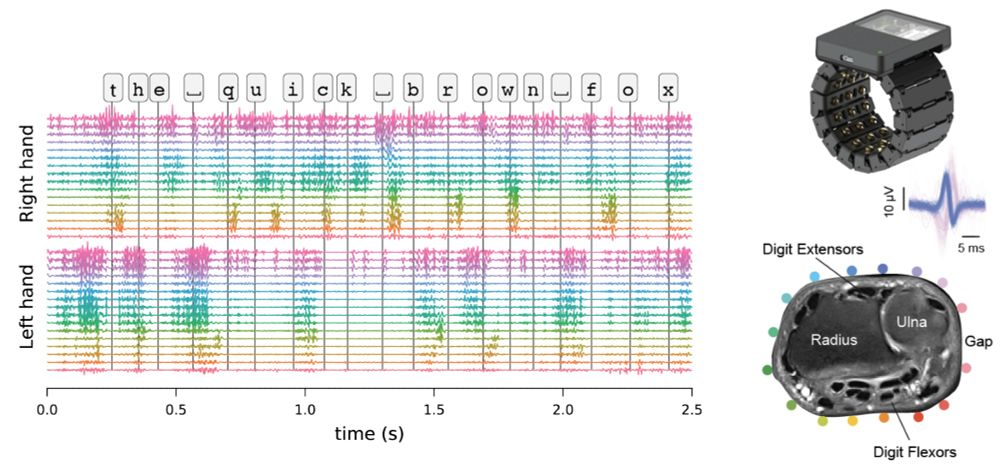

Our baseline model built using standard techniques from the Speech Recognition literature shows that, with some personalization on top of a model pretrained with 100 subjects, we can quite accurately enable typing with sEMG, eventually without a physical keyboard.

Our baseline model built using standard techniques from the Speech Recognition literature shows that, with some personalization on top of a model pretrained with 100 subjects, we can quite accurately enable typing with sEMG, eventually without a physical keyboard.

Towards that goal, we now release emg2qwerty - a wrist sEMG dataset collected while touch typing on a QWERTY keyboard. With 108 subjects, 1,135 sessions, 346 hours, and 5.2 million keystrokes, this is quite large by neuroscience standards.

arxiv.org/abs/2410.20081

github.com/facebookrese...

Towards that goal, we now release emg2qwerty - a wrist sEMG dataset collected while touch typing on a QWERTY keyboard. With 108 subjects, 1,135 sessions, 346 hours, and 5.2 million keystrokes, this is quite large by neuroscience standards.

arxiv.org/abs/2410.20081

github.com/facebookrese...

But can we further push the boundaries of what is possible with sEMG?

We imagine many other applications for sEMG, including the ability to manipulate objects in AR or write full messages as quickly as—or faster than—typing on a keyboard, with very little effort.

But can we further push the boundaries of what is possible with sEMG?

We imagine many other applications for sEMG, including the ability to manipulate objects in AR or write full messages as quickly as—or faster than—typing on a keyboard, with very little effort.

And at Connect 2024, we showed how one can use an EMG wristband with Orion—our AR glasses product prototype—for seamless and comfortable control over digital content to swipe, click, and scroll while keeping your arm resting comfortably by your side.

And at Connect 2024, we showed how one can use an EMG wristband with Orion—our AR glasses product prototype—for seamless and comfortable control over digital content to swipe, click, and scroll while keeping your arm resting comfortably by your side.

Earlier this year, we unveiled a wristband device that can be seamlessly worn, non-invasively sense muscle activations in the wrist and hand via surface EMG, and achieve out-of-the-box generalization across individuals for multiple tasks including handwriting!

Earlier this year, we unveiled a wristband device that can be seamlessly worn, non-invasively sense muscle activations in the wrist and hand via surface EMG, and achieve out-of-the-box generalization across individuals for multiple tasks including handwriting!

The papers - emg2qwerty and emg2pose - are accepted at NeurIPS 2024 Datasets and Benchmarks Track.

If you’re in Vancouver next week, drop by our posters on Fri Dec 13 at 11am PST or at the Meta booth. We have some cool demos to show too!

neurips.cc/virtual/2024...

nips.cc/virtual/2024...

The papers - emg2qwerty and emg2pose - are accepted at NeurIPS 2024 Datasets and Benchmarks Track.

If you’re in Vancouver next week, drop by our posters on Fri Dec 13 at 11am PST or at the Meta booth. We have some cool demos to show too!

neurips.cc/virtual/2024...

nips.cc/virtual/2024...