Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

We spent the last year (actually a bit longer) training an LLM with recurrent depth at scale.

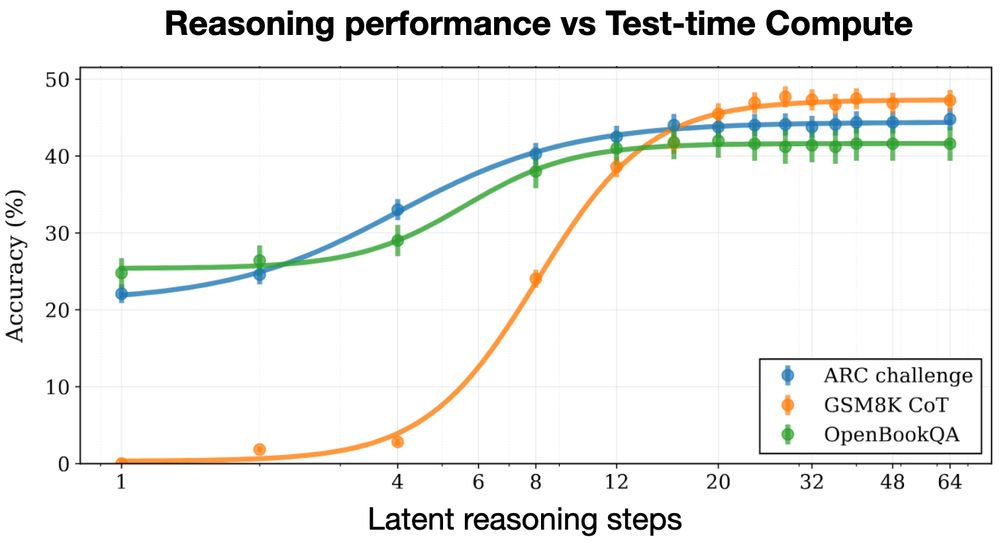

The model has an internal latent space in which it can adaptively spend more compute to think longer.

I think the tech report ...🐦⬛

We spent the last year (actually a bit longer) training an LLM with recurrent depth at scale.

The model has an internal latent space in which it can adaptively spend more compute to think longer.

I think the tech report ...🐦⬛