Personal website: https://www.santiviquez.com

Join us next week to ask Sebastian Raschka anything!

📅 Date: February 11th

⏰ Time: 10:00 AM – 11:00 AM EST

📍 Register: lu.ma/evqa4rct

Join us next week to ask Sebastian Raschka anything!

📅 Date: February 11th

⏰ Time: 10:00 AM – 11:00 AM EST

📍 Register: lu.ma/evqa4rct

Four years ago, at NannyML, we invented the first version of Confidence-Based Performance Estimation. Today, a paper about it was published in JAIR.

JAIR: jair.org/index.php/ja...

ArXiv: arxiv.org/abs/2407.08649

Four years ago, at NannyML, we invented the first version of Confidence-Based Performance Estimation. Today, a paper about it was published in JAIR.

JAIR: jair.org/index.php/ja...

ArXiv: arxiv.org/abs/2407.08649

Hopefully, it’ll take you a lot less after reading my explanation in "The Little Book of ML Metrics"

www.nannyml.com/metrics?via=...

Hopefully, it’ll take you a lot less after reading my explanation in "The Little Book of ML Metrics"

www.nannyml.com/metrics?via=...

Yesterday was my first day at culinary school!

Yesterday was my first day at culinary school!

Every person you admire was once considered cringe by someone.

A Writer, YouTuber, Founder, Musician, you name it. They all got to where they are because they constantly shared their work with the world. Constantly.

Every person you admire was once considered cringe by someone.

A Writer, YouTuber, Founder, Musician, you name it. They all got to where they are because they constantly shared their work with the world. Constantly.

So far, we have these two:

1. AI Engineering by Chip Huyen

2. Hands-On Generative AI with Transformers and Diffusion Models by Omar Sanseviero and gang

Any other suggestions?

So far, we have these two:

1. AI Engineering by Chip Huyen

2. Hands-On Generative AI with Transformers and Diffusion Models by Omar Sanseviero and gang

Any other suggestions?

A big thanks to @carloscapote.bsky.social and Michael Erasmus for their excellent explanations in today's meeting.

A big thanks to @carloscapote.bsky.social and Michael Erasmus for their excellent explanations in today's meeting.

Our NannyML open-source package reached 2,000 GitHub stars! 🌟

Slowly but steadily 💪

Our NannyML open-source package reached 2,000 GitHub stars! 🌟

Slowly but steadily 💪

Log Loss (aka cross-entropy loss)!

---

If you're interested in more metric descriptions like this one, check out the book I'm writing: The Little Book of ML Metrics.

GitHub Repo: github.com/NannyML/The-...

Pre-order the book:https://www.nannyml.com/metrics

Log Loss (aka cross-entropy loss)!

---

If you're interested in more metric descriptions like this one, check out the book I'm writing: The Little Book of ML Metrics.

GitHub Repo: github.com/NannyML/The-...

Pre-order the book:https://www.nannyml.com/metrics

In the coming weeks, I'll be working on the ranking chapter for "The Little Book of ML Metrics", and I want to make sure I'm not missing any popular ranking/recsys metrics.

In the coming weeks, I'll be working on the ranking chapter for "The Little Book of ML Metrics", and I want to make sure I'm not missing any popular ranking/recsys metrics.

1. "AI Engineering: Building Applications with Foundation Models" (Huyen, 2024): amzn.to/4gtQgJo

We’ll probably read it in the study group "AI from Scratch."

1. "AI Engineering: Building Applications with Foundation Models" (Huyen, 2024): amzn.to/4gtQgJo

We’ll probably read it in the study group "AI from Scratch."

Today, I found it and realized I’ve accomplished all of them.

Today, I found it and realized I’ve accomplished all of them.

Take a look at this demo created by my colleague @anopsy.bsky.social

There are two univariate distributions (top and right) which remain almost unchanged during the whole process.

Take a look at this demo created by my colleague @anopsy.bsky.social

There are two univariate distributions (top and right) which remain almost unchanged during the whole process.

It was an amazing one! @carloscapote.bsky.social walked us through Chapter 5: Pretraining on Unlabeled Data.

Next week, we’ll take a short break, but we’ll be back after the holidays to finish Chapters 6 and 7 💪

It was an amazing one! @carloscapote.bsky.social walked us through Chapter 5: Pretraining on Unlabeled Data.

Next week, we’ll take a short break, but we’ll be back after the holidays to finish Chapters 6 and 7 💪

Check out other metrics at:

github.com/NannyML/The-...

Check out other metrics at:

github.com/NannyML/The-...

But even more exciting than the stars is that for the past week, every day I've been waking up to at least two PRs from the community helping me write the book 🔥

But even more exciting than the stars is that for the past week, every day I've been waking up to at least two PRs from the community helping me write the book 🔥

We started putting everything together and implemented a GPT model.

My favorite part of the book is the way Sebastian outlined and structured it to progressively build on previous sections. That alone makes it worth every penny.

We started putting everything together and implemented a GPT model.

My favorite part of the book is the way Sebastian outlined and structured it to progressively build on previous sections. That alone makes it worth every penny.

Watch out for underperforming segments 👀

Watch out for underperforming segments 👀

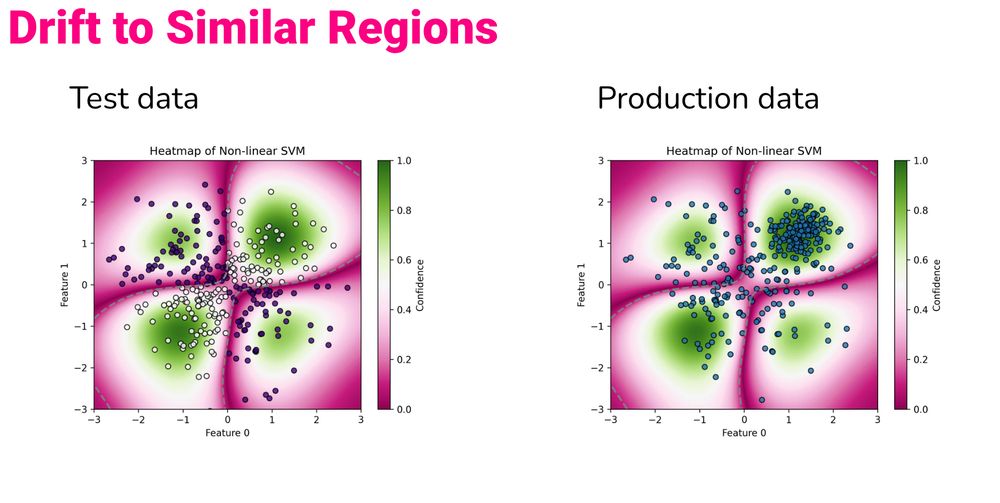

This happens when the production data moves to regions where the model is more confident in its predictions.

This happens when the production data moves to regions where the model is more confident in its predictions.

github.com/NannyML/The-...

github.com/NannyML/The-...

I will never forget his answer…

“We can’t, we don’t know how to do it.”

I will never forget his answer…

“We can’t, we don’t know how to do it.”

Also Richard: Writes a 552-page-long book

Also Richard: Writes a 552-page-long book

I feel like the intuition behind Q, K, and V matrices in self-attention finally clicked for many of us.

I feel like the intuition behind Q, K, and V matrices in self-attention finally clicked for many of us.