1️⃣ Learn more about RSs: Why they appear, their root causes, and mitigation: arxiv.org/abs/2305.19951

2️⃣ Make NeSy models aware of their shortcuts: arxiv.org/abs/2402.12240

1️⃣ Learn more about RSs: Why they appear, their root causes, and mitigation: arxiv.org/abs/2305.19951

2️⃣ Make NeSy models aware of their shortcuts: arxiv.org/abs/2402.12240

Website: unitn-sml.github.io/rsbench/

Paper: openreview.net/forum?id=5Vt...

GitHub: github.com/unitn-sml/rs...

Website: unitn-sml.github.io/rsbench/

Paper: openreview.net/forum?id=5Vt...

GitHub: github.com/unitn-sml/rs...

1️⃣ Configurable: can be easily configured with YAML/JSON files.

2️⃣ Intuitive: straightforward to use:

1️⃣ Configurable: can be easily configured with YAML/JSON files.

2️⃣ Intuitive: straightforward to use:

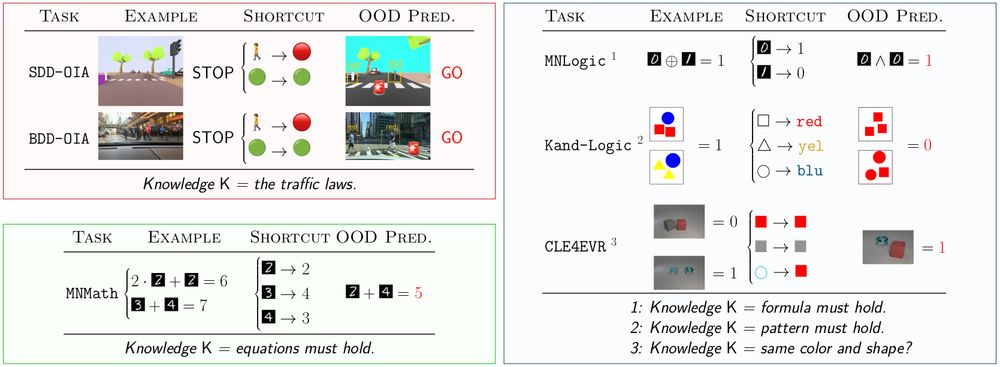

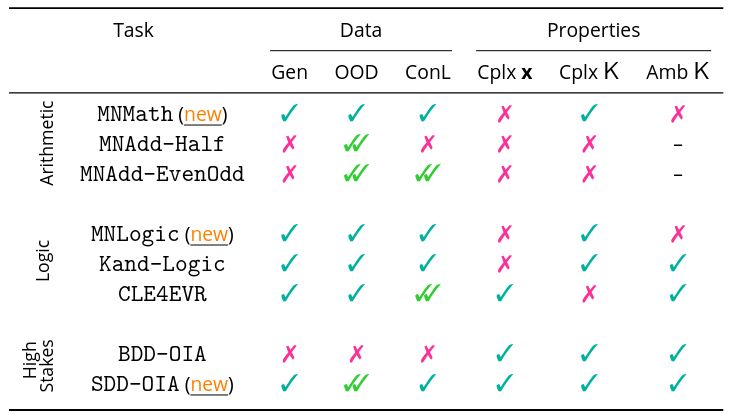

3 new benchmarks:

🔢 MNMath for arithmetic reasoning

🛃 MNLogic for SAT-like problems

🚖 SDD-OIA, a synthetic self-driving task!

They can all be made easier or harder with our data generator!

3 new benchmarks:

🔢 MNMath for arithmetic reasoning

🛃 MNLogic for SAT-like problems

🚖 SDD-OIA, a synthetic self-driving task!

They can all be made easier or harder with our data generator!

- 🌍 Evaluate concepts in in- and out-of-distribution scenarios.

- 🎯 Ground-truth concept annotations are available for all tasks.

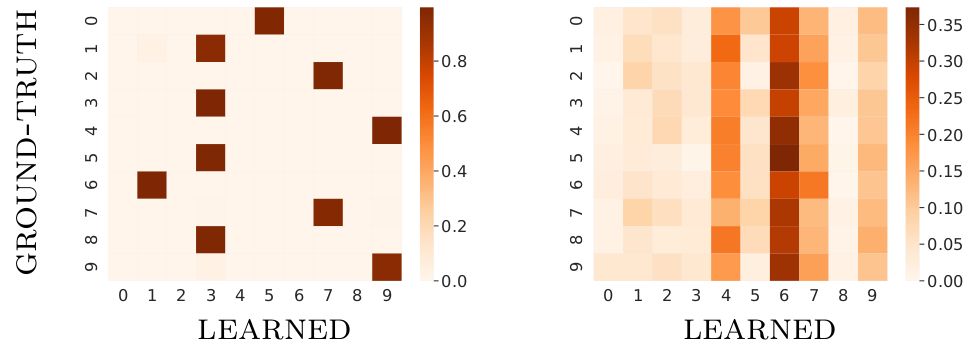

- 📊 Visualize how your models handle different learning & reasoning tasks!

- 🌍 Evaluate concepts in in- and out-of-distribution scenarios.

- 🎯 Ground-truth concept annotations are available for all tasks.

- 📊 Visualize how your models handle different learning & reasoning tasks!

- 🧮 Run algorithmic, logical, and high-stakes tasks w/ known reasoning shortcuts (RSs).

- 📊 Eval concept quality via F1, accuracy & concept collapse.

- 🛠️ Easily customize the tasks and count RSs a priori using our countrss tool!

- 🧮 Run algorithmic, logical, and high-stakes tasks w/ known reasoning shortcuts (RSs).

- 📊 Eval concept quality via F1, accuracy & concept collapse.

- 🛠️ Easily customize the tasks and count RSs a priori using our countrss tool!

NeSy models might learn wrong concepts but still make perfect predictions!

Example: A self-driving car 🚗 stops in front of a 🚦🔴 or a 🚶. Even if it confuses the two, it outputs the right prediction!

NeSy models might learn wrong concepts but still make perfect predictions!

Example: A self-driving car 🚗 stops in front of a 🚦🔴 or a 🚶. Even if it confuses the two, it outputs the right prediction!

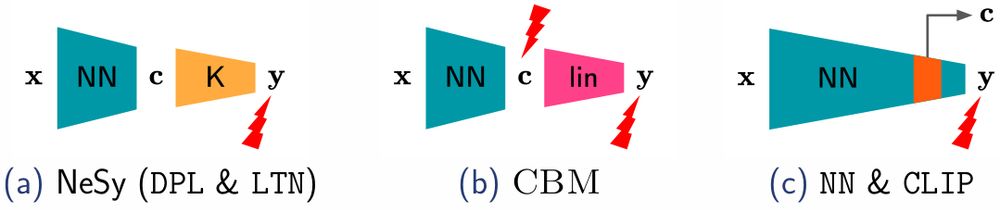

1️⃣ Neuro-Symbolic models (#NeSy)

2️⃣ Concept Bottleneck Models (#CBMs)

3️⃣ Black-box Neural Networks (NNs*)

4️⃣ Vision-Language Models (#VLMs*)

* through post-hoc concept-based explanations (e.g., TCAV)

1️⃣ Neuro-Symbolic models (#NeSy)

2️⃣ Concept Bottleneck Models (#CBMs)

3️⃣ Black-box Neural Networks (NNs*)

4️⃣ Vision-Language Models (#VLMs*)

* through post-hoc concept-based explanations (e.g., TCAV)

eg

👉 proceedings.neurips.cc/paper_files/...

👉 openreview.net/forum?id=pDc...

👉 unitn-sml.github.io/rsbench/