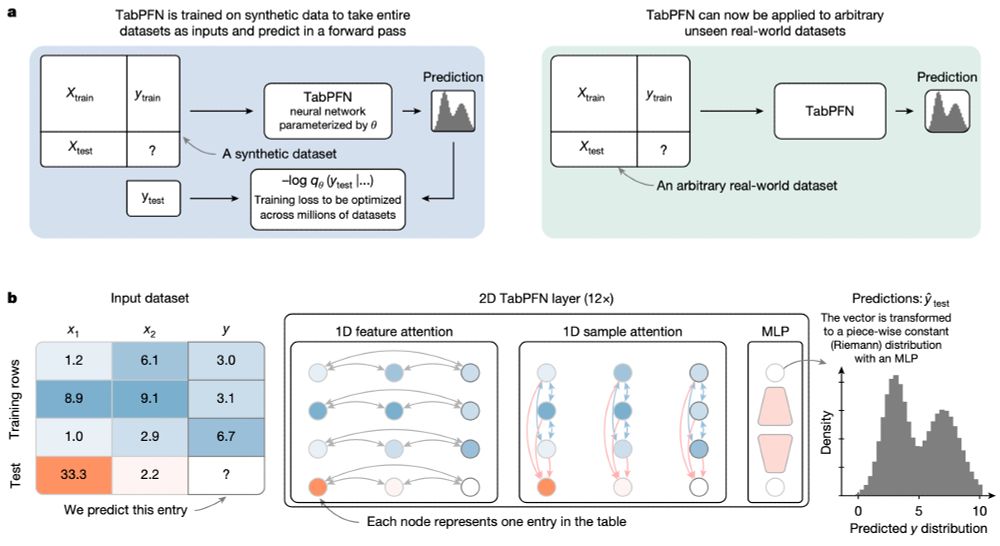

The PFN idea is to use a prior, e.g. a bayesian neural network (BNN) prior, sample datasets from that prior, and then train to predict the hold-out labels of these datasets. (no training on real-world data) 2/n

The PFN idea is to use a prior, e.g. a bayesian neural network (BNN) prior, sample datasets from that prior, and then train to predict the hold-out labels of these datasets. (no training on real-world data) 2/n

I used 20 interactions in the easy mode to learn the models' behaviors.

In hard mode (see prev post), you need to match three responses to the LLM name.

I used 20 interactions in the easy mode to learn the models' behaviors.

In hard mode (see prev post), you need to match three responses to the LLM name.

To figure out if this is the case, I created a game with two modes.

The game is about identifying which answer was provided by which LLM.

To figure out if this is the case, I created a game with two modes.

The game is about identifying which answer was provided by which LLM.

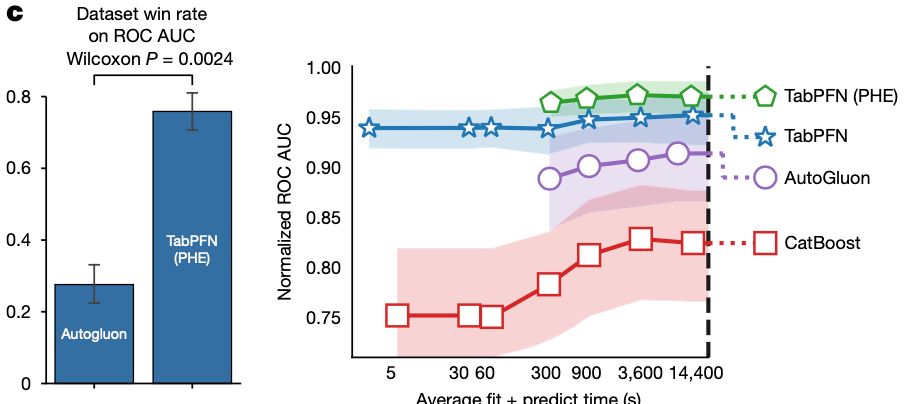

We train a neural network that natively handles tables, using attention across rows and columns, on millions of artificial tabular datasets from a meticulously designed data generator. It then performs in-context learning to make predictions on unseen data.

We train a neural network that natively handles tables, using attention across rows and columns, on millions of artificial tabular datasets from a meticulously designed data generator. It then performs in-context learning to make predictions on unseen data.

Instead a pre-trained neural network is, the new TabPFN, as we just published in Nature 🎉

Instead a pre-trained neural network is, the new TabPFN, as we just published in Nature 🎉