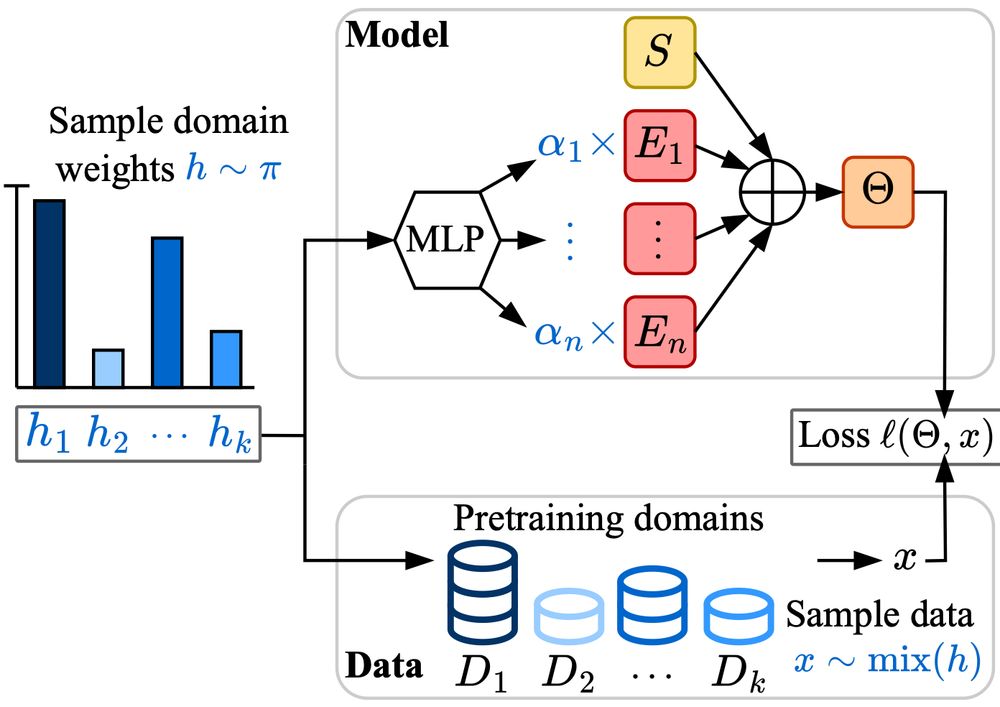

We explored this through the lens of MoEs:

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

"If I want a small, capable model, should I distill from a more powerful model, or train from scratch?"

Our distillation scaling law shows, well, it's complicated... 🧵

arxiv.org/abs/2502.08606

"If I want a small, capable model, should I distill from a more powerful model, or train from scratch?"

Our distillation scaling law shows, well, it's complicated... 🧵

arxiv.org/abs/2502.08606

We explored this through the lens of MoEs:

We explored this through the lens of MoEs: