HAI Fellow @ Stanford focusing on risk & safety 🖇️ 🦜

Hear from innovators who have made new solutions and @consumerreports.org's food safety experts!

action.consumerreports.org/20251120micr... #microplastics

Hear from innovators who have made new solutions and @consumerreports.org's food safety experts!

#bluesky #ai #tech

action.consumerreports.org/20251120micr... #microplastics

action.consumerreports.org/20251120micr... #microplastics

Their “Scientist AI” proposal allows us to disable agentic and planning components—building in off-switches from the start.

📄 arxiv.org/abs/2405.20009 #bluesky

Their “Scientist AI” proposal allows us to disable agentic and planning components—building in off-switches from the start.

📄 arxiv.org/abs/2405.20009 #bluesky

- AIAAIC (www.aiaaic.org/aiaaic-repos...) and

- MIT's AI Incident Tracker (airisk.mit.edu/ai-incident-...).

Pretty shocking to see the numbers on autonomous vehicle incidents. Very few of these reach the headlines.

- AIAAIC (www.aiaaic.org/aiaaic-repos...) and

- MIT's AI Incident Tracker (airisk.mit.edu/ai-incident-...).

Pretty shocking to see the numbers on autonomous vehicle incidents. Very few of these reach the headlines.

AI safety needs tools to track compound harm.

📑 arxiv.org/abs/2401.07836

#TechEthics #bluesky

AI safety needs tools to track compound harm.

📑 arxiv.org/abs/2401.07836

#TechEthics #bluesky

To what extent is this happening in practice?

📄 arxiv.org/abs/2305.15324

To what extent is this happening in practice?

📄 arxiv.org/abs/2305.15324

📄 arxiv.org/abs/2401.07836

📄 arxiv.org/abs/2401.07836

@cbsaustin @velez_tx

@cbsaustin @velez_tx

A study of 1,200 kids’ cereals launched since 2010 finds rising fat, salt & sugar – and falling protein & fibre.

Despite health claims, many cereals now pack over 45% of a child's daily sugar limit per bowl.

🔗 doi.org/10.1001/jama...

#Nutrition #ChildHealth #SciComm 🧪

A study of 1,200 kids’ cereals launched since 2010 finds rising fat, salt & sugar – and falling protein & fibre.

Despite health claims, many cereals now pack over 45% of a child's daily sugar limit per bowl.

🔗 doi.org/10.1001/jama...

#Nutrition #ChildHealth #SciComm 🧪

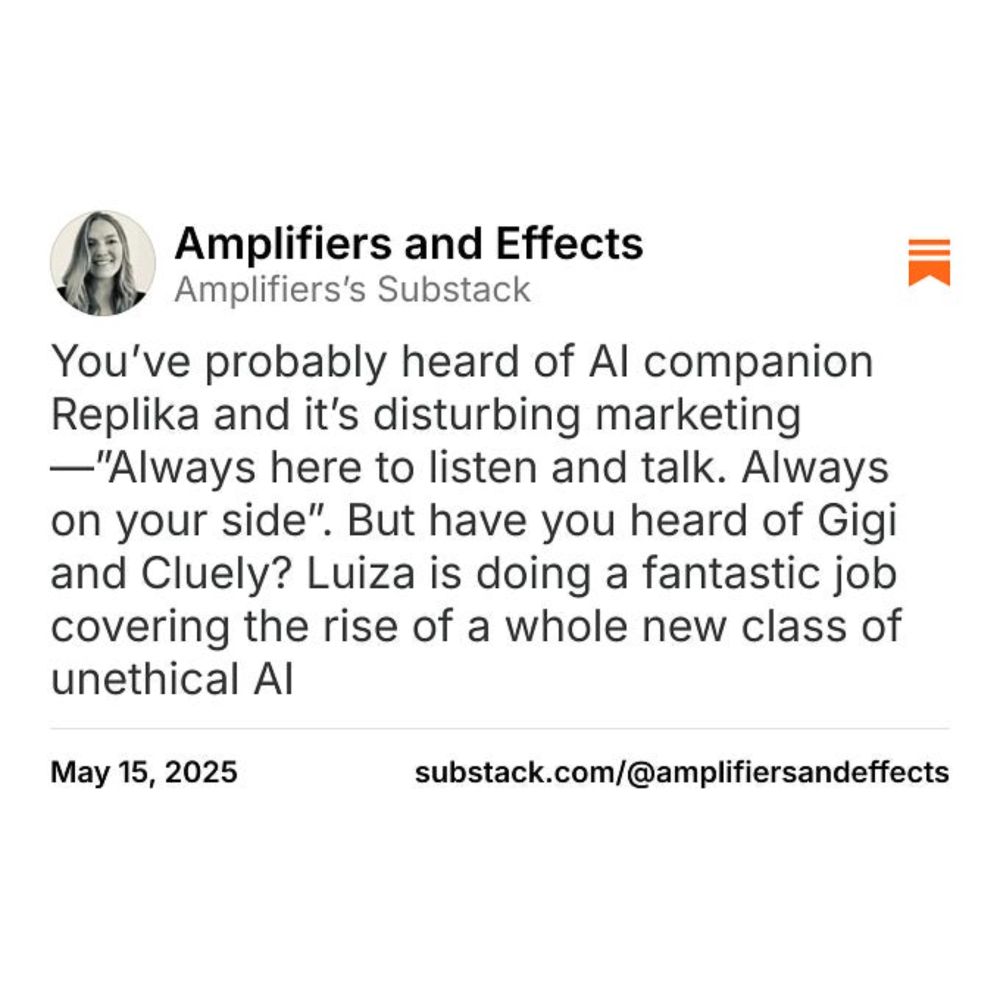

Read today's essay and subscribe to my newsletter using the link below:

A new study found long-term exposure to starch-based microplastics caused liver, gut & ovarian damage in mice - and disrupted blood sugar & circadian rhythms.

🔗 doi.org/10.1021/acs....

#Plastics #Toxicology #SciComm

A new study found long-term exposure to starch-based microplastics caused liver, gut & ovarian damage in mice - and disrupted blood sugar & circadian rhythms.

🔗 doi.org/10.1021/acs....

#Plastics #Toxicology #SciComm