https://ryokamoi.github.io/

@colmweb.org #COLM2025! See you in Montreal🍁

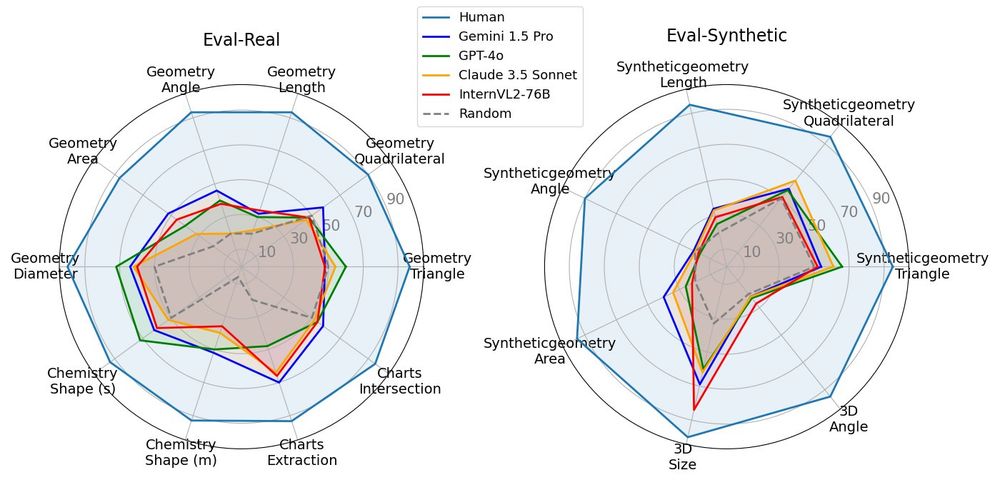

We find that even recent Vision Language Models struggle with simple questions about geometric properties in images, such as "What is the degree of angle AOD?"🧐

arxiv.org/abs/2412.00947

bsky.app/profile/ryok...

@colmweb.org #COLM2025! See you in Montreal🍁

We find that even recent Vision Language Models struggle with simple questions about geometric properties in images, such as "What is the degree of angle AOD?"🧐

arxiv.org/abs/2412.00947

bsky.app/profile/ryok...

github.com/open-compass...

VisOnlyQA reveals that even recent LVLMs like GPT-4o and Gemini 1.5 Pro stumble on simple visual perception questions, e.g., "What is the degree of angle AOD?"🧐

arxiv.org/abs/2412.00947

github.com/open-compass...

VisOnlyQA reveals that even recent LVLMs like GPT-4o and Gemini 1.5 Pro stumble on simple visual perception questions, e.g., "What is the degree of angle AOD?"🧐

arxiv.org/abs/2412.00947

We conclude that we need to improve both the training data and model architecture of LVLMs for better visual perception. [4/n]

We conclude that we need to improve both the training data and model architecture of LVLMs for better visual perception. [4/n]

Recent benchmarks for LVLMs often involve reasoning or knowledge, putting less focus on visual perception. In contrast, VisOnlyQA is designed to evaluate visual perception directly [2/n]

Recent benchmarks for LVLMs often involve reasoning or knowledge, putting less focus on visual perception. In contrast, VisOnlyQA is designed to evaluate visual perception directly [2/n]

We introduce VisOnlyQA, a new dataset for evaluating the visual perception of LVLMs, but existing LVLMs perform poorly on our dataset. [1/n]

arxiv.org/abs/2412.00947

github.com/psunlpgroup/...

We introduce VisOnlyQA, a new dataset for evaluating the visual perception of LVLMs, but existing LVLMs perform poorly on our dataset. [1/n]

arxiv.org/abs/2412.00947

github.com/psunlpgroup/...