But if we succeed, the future could be more beautiful than we can possibly imagine today.

But if we succeed, the future could be more beautiful than we can possibly imagine today.

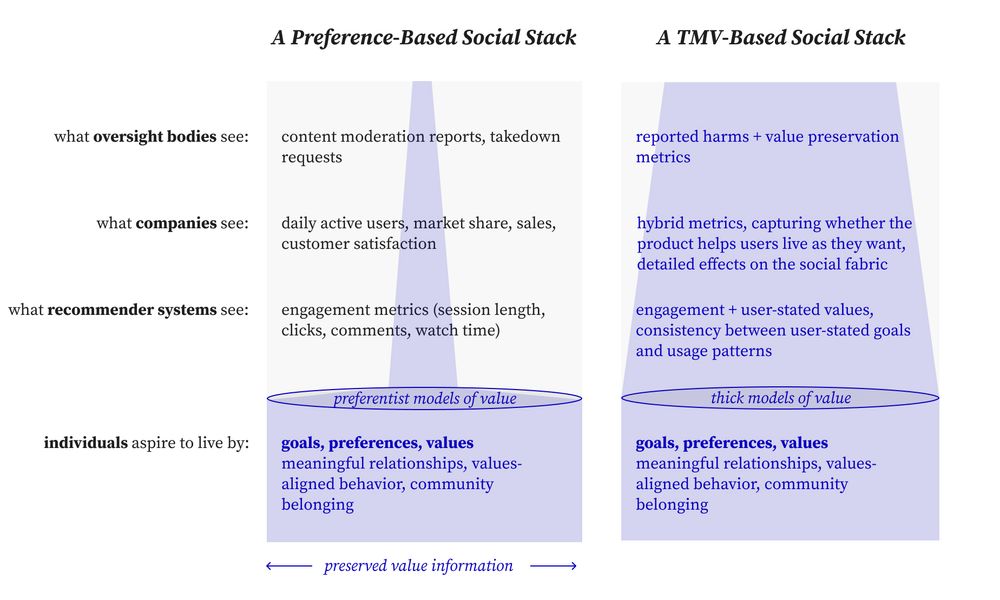

TMV is a broad class of structured approaches to modeling values and norms that:

1. are more robust against distortions

2. have better treatment of collective values and norms

3. have better generalization

TMV is a broad class of structured approaches to modeling values and norms that:

1. are more robust against distortions

2. have better treatment of collective values and norms

3. have better generalization

But this lack of internal structure becomes a critical weakness when we need *reliability* across contexts and institutions.

But this lack of internal structure becomes a critical weakness when we need *reliability* across contexts and institutions.

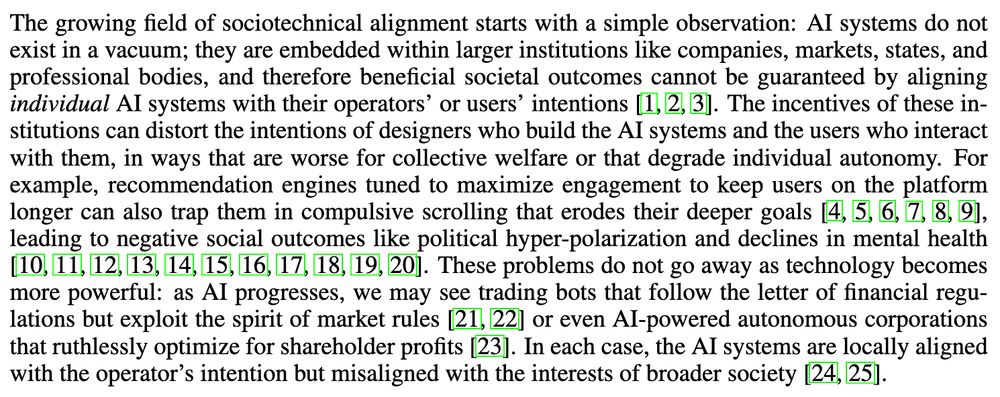

AI systems don't exist in a vacuum. They are embedded within institutions whose incentives shape their deployment.

Often, institutional incentives are not aligned with what's in our best interest.

AI systems don't exist in a vacuum. They are embedded within institutions whose incentives shape their deployment.

Often, institutional incentives are not aligned with what's in our best interest.

- A position paper that articulates the conceptual foundations of FSA (jytmawd4y4kxsznl.public.blob.vercel-storage.com/Full_Stack_...)

- A website which will be the homepage of FSA going forward (www.full-stack-alignment.ai/)

- A position paper that articulates the conceptual foundations of FSA (jytmawd4y4kxsznl.public.blob.vercel-storage.com/Full_Stack_...)

- A website which will be the homepage of FSA going forward (www.full-stack-alignment.ai/)

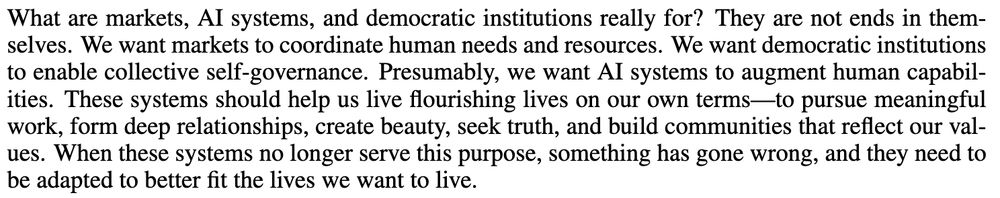

A research program dedicated to co-aligning AI systems *and* institutions with what people value.

It's the most ambitious project I've ever undertaken.

Here's what we're doing: 🧵

A research program dedicated to co-aligning AI systems *and* institutions with what people value.

It's the most ambitious project I've ever undertaken.

Here's what we're doing: 🧵