@xpnsec.com drops knowledge on LLM security w/ his latest post showing how attackers can by pass LLM WAFs by confusing the tokenization process to smuggle tokens to back-end LLMs.

Read more: ghst.ly/4koUJiz

@xpnsec.com drops knowledge on LLM security w/ his latest post showing how attackers can by pass LLM WAFs by confusing the tokenization process to smuggle tokens to back-end LLMs.

Read more: ghst.ly/4koUJiz

#IdentitySecurity #CyberSecurity

(1/6)

#IdentitySecurity #CyberSecurity

(1/6)

We are now hiring Consultants and Senior Consultants to join the team as operators, trainers, and program developers.

Learn more & apply today! ghst.ly/3PBmGFZ

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

I thought they laid out a pragmatic approach to evaluating AI models that should be a component of any organization's assessment methodology.

cdn.openai.com/papers/opena...

I thought they laid out a pragmatic approach to evaluating AI models that should be a component of any organization's assessment methodology.

cdn.openai.com/papers/opena...

Protect-StringWithAzureKeyVaultKey

Unprotect-StringWithAzureKeyVaultKey

github.com/BloodHoundAD...

Explanatory blog post coming soon.

Protect-StringWithAzureKeyVaultKey

Unprotect-StringWithAzureKeyVaultKey

github.com/BloodHoundAD...

Explanatory blog post coming soon.

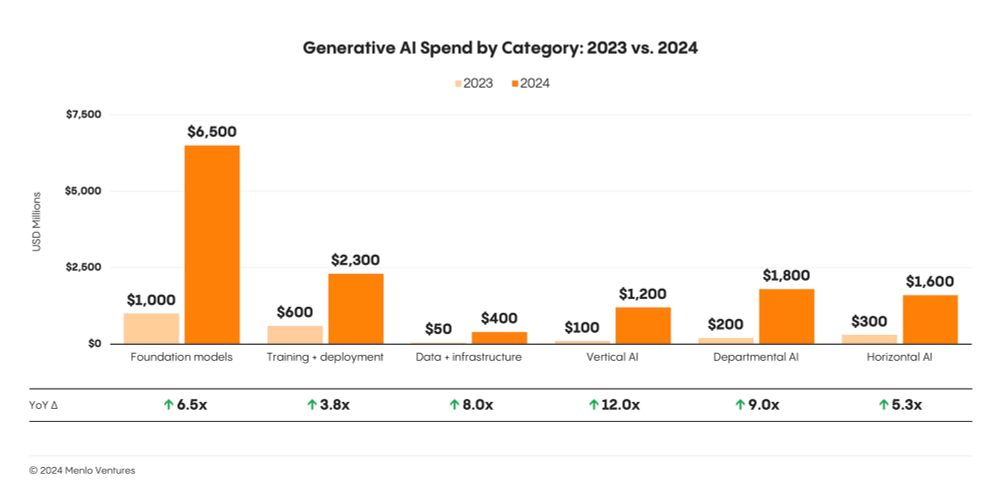

menlovc.com/2024-the-sta...

menlovc.com/2024-the-sta...

Go check out our latest report and hopefully you can apply some of the same lessons to your environment!

www.cisa.gov/news-events/...

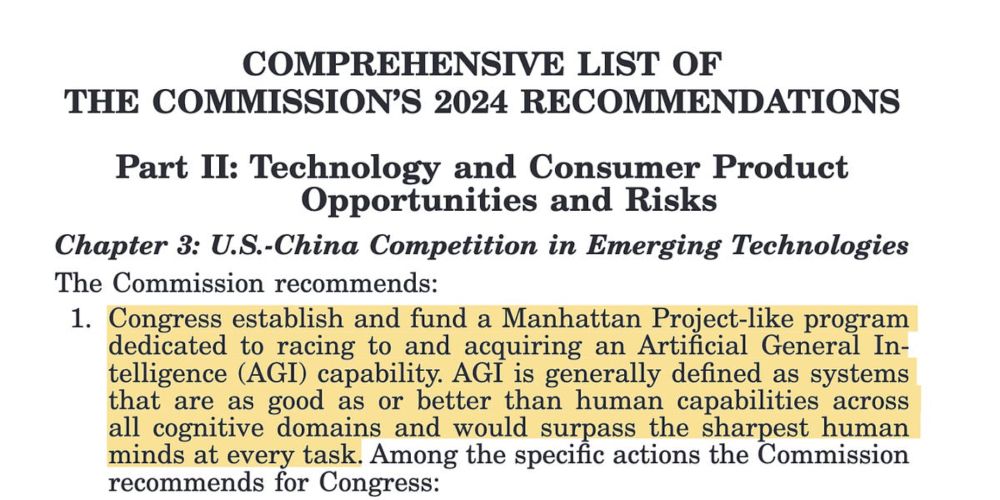

garrisonlovely.substack.com/p/china-hawk...

garrisonlovely.substack.com/p/china-hawk...

Are we going for security through obscurity by keeping system prompts private?

www.darkreading.com/cloud-securi...

Are we going for security through obscurity by keeping system prompts private?

research.google/pubs/securin...

Permissively licensed 1M synthetic instruction pairs covering different capabilities, such as text editing, creative writing, coding, reading comprehension

Paper: arxiv.org/abs/2407.03502

Dataset: huggingface.co/datasets/mic...

Permissively licensed 1M synthetic instruction pairs covering different capabilities, such as text editing, creative writing, coding, reading comprehension

Paper: arxiv.org/abs/2407.03502

Dataset: huggingface.co/datasets/mic...