Fantastic work done by Sanghwan Kim, @rui-xiao.bsky.social, Mariana Iuliana Georgescu, Stephan Alaniz, @zeynepakata.bsky.social .

📖 [Paper]: arxiv.org/abs/2412.01814

💻 [Code]: github.com/ExplainableM...

Fantastic work done by Sanghwan Kim, @rui-xiao.bsky.social, Mariana Iuliana Georgescu, Stephan Alaniz, @zeynepakata.bsky.social .

📖 [Paper]: arxiv.org/abs/2412.01814

💻 [Code]: github.com/ExplainableM...

By @rui-xiao.bsky.social, Sanghwan Kim, Mariana Iuliana Georgescu, @zeynepakata.bsky.social,Stephan Alaniz.

😼 [Project]: explainableml.github.io/flair-website/

💻 [Code]: github.com/ExplainableM...

By @rui-xiao.bsky.social, Sanghwan Kim, Mariana Iuliana Georgescu, @zeynepakata.bsky.social,Stephan Alaniz.

😼 [Project]: explainableml.github.io/flair-website/

💻 [Code]: github.com/ExplainableM...

We’re excited to celebrate @merceaotniel.bsky.social , who will defend his PhD on April 16th! See the thread to feature his research highlights👇

We’re excited to celebrate @merceaotniel.bsky.social , who will defend his PhD on April 16th! See the thread to feature his research highlights👇

Trained on 30M pairs, it outperforms billion-scale models on zero-shot image-text retrieval and segmentation.

📄 arxiv.org/abs/2412.03561

🔓 github.com/ExplainableM...

Trained on 30M pairs, it outperforms billion-scale models on zero-shot image-text retrieval and segmentation.

📄 arxiv.org/abs/2412.03561

🔓 github.com/ExplainableM...

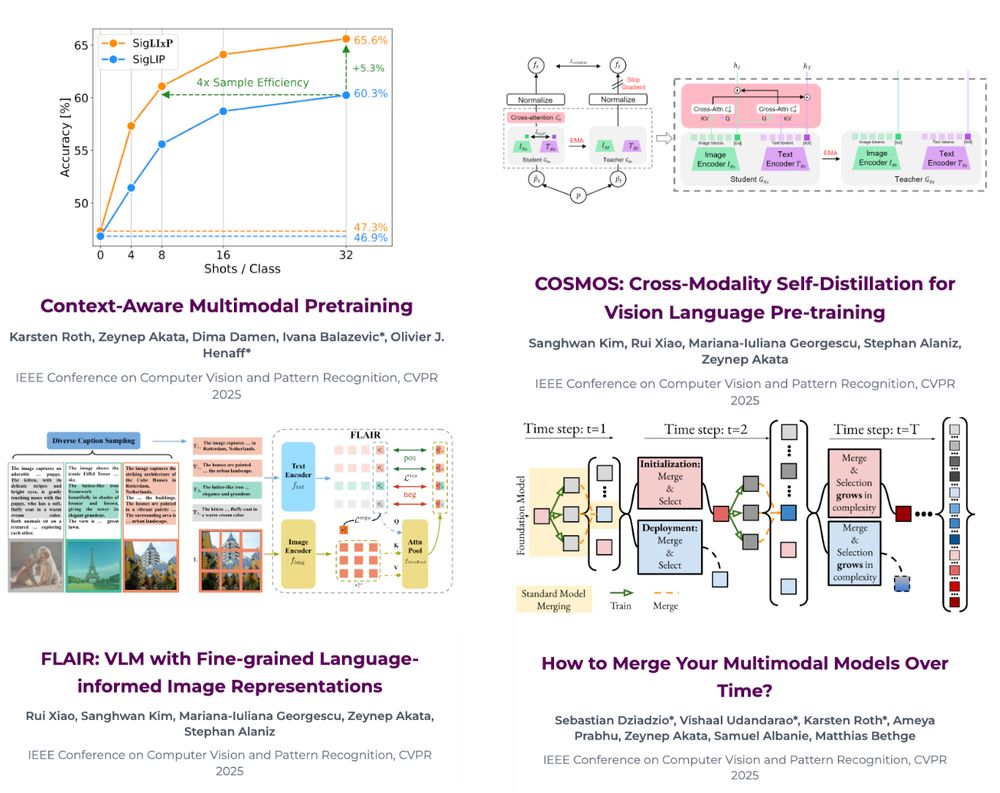

Our work spans multimodal pre-training, model merging, and more.

📄 Papers & codes: eml-munich.de#publications

See threads for highlights in each paper.

#CVPR

Our work spans multimodal pre-training, model merging, and more.

📄 Papers & codes: eml-munich.de#publications

See threads for highlights in each paper.

#CVPR