academic.oup.com/aje/article-...

academic.oup.com/aje/article-...

3/3

3/3

www.thelancet.com/journals/lan...

2/3

www.thelancet.com/journals/lan...

2/3

Julia concludes by highlighting the need for structural change. Rigorous causal research takes time and thought. That's not possible if we're still expecting PhD students to publish 3-5 papers.

Julia concludes by highlighting the need for structural change. Rigorous causal research takes time and thought. That's not possible if we're still expecting PhD students to publish 3-5 papers.

If a dataset is inappropriate for a particular question, the best you can do is NOT use it.

It shouldn't be our job, as scientists, to be showcasing datasets.

If a dataset is inappropriate for a particular question, the best you can do is NOT use it.

It shouldn't be our job, as scientists, to be showcasing datasets.

Additional nuances are discussed here: www.ahajournals.org/doi/10.1161/...

Additional nuances are discussed here: www.ahajournals.org/doi/10.1161/...

academic.oup.com/aje/article/...

academic.oup.com/aje/article/...

pubmed.ncbi.nlm.nih.gov/38761102/

pubmed.ncbi.nlm.nih.gov/38761102/

#StatsSky

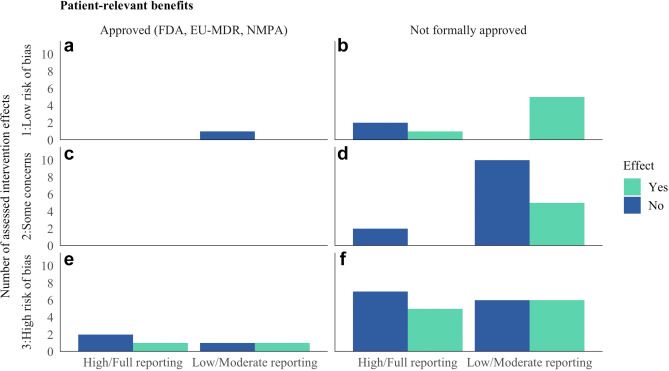

www.jclinepi.com/article/S089...

#StatsSky

www.jclinepi.com/article/S089...