PhD student @mila-quebec.bsky.social

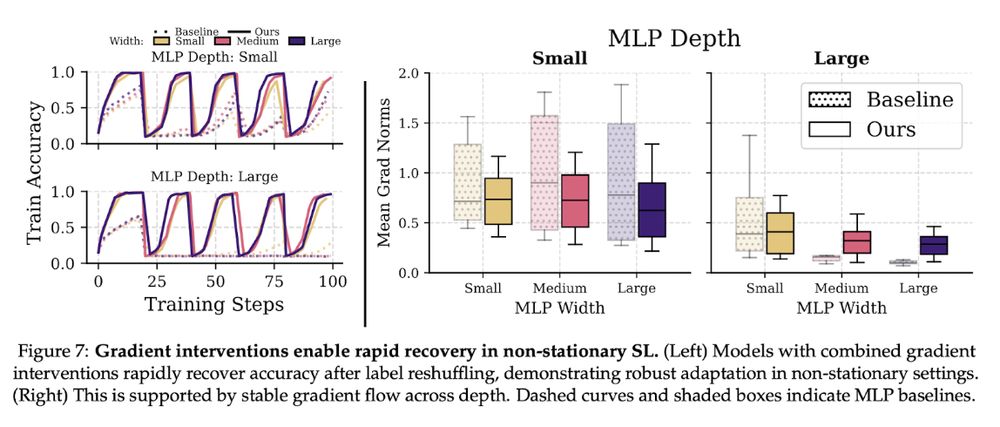

With both interventions, networks now show near-perfect plasticity. They can continually fit changing data across network scales. No collapse 💪

With both interventions, networks now show near-perfect plasticity. They can continually fit changing data across network scales. No collapse 💪

We benchmark against PQN and PPO, and the improvements are remarkable, both in terms of performance and scalability.

We benchmark against PQN and PPO, and the improvements are remarkable, both in terms of performance and scalability.

Second-order estimators capture curvature, providing more stable updates than first-order methods

Our ablations show the Kron optimizer shines in deep RL, helping agents adapt as learning evolves ✨

Second-order estimators capture curvature, providing more stable updates than first-order methods

Our ablations show the Kron optimizer shines in deep RL, helping agents adapt as learning evolves ✨

Residual connections create shortcuts that preserve gradients and avoid vanishing ⚡️

We extend this with MultiSkip: broadcasting features to all fully connected layers, ensuring direct gradient flow at scale

Residual connections create shortcuts that preserve gradients and avoid vanishing ⚡️

We extend this with MultiSkip: broadcasting features to all fully connected layers, ensuring direct gradient flow at scale

Gradients are at the heart of this instability.

We relate vanishing gradients to classic RL diagnostics:

⚫ Dead neurons

⚫ Representation collapse

⚫ Loss landscape instability (from bootstrapping)

Gradients are at the heart of this instability.

We relate vanishing gradients to classic RL diagnostics:

⚫ Dead neurons

⚫ Representation collapse

⚫ Loss landscape instability (from bootstrapping)

But in RL, performance collapses due to non-stationarity!

We simulate this by shuffling labels periodically. The result? Gradients degrade, and bigger networks collapse! 🚫

But in RL, performance collapses due to non-stationarity!

We simulate this by shuffling labels periodically. The result? Gradients degrade, and bigger networks collapse! 🚫

Why? Increasing depth and width leads to severe vanishing gradients, causing unstable learning ⚠️

We diagnose this issue across several algorithms: DQN, Rainbow, PQN, PPO, SAC, and DDPG.

Why? Increasing depth and width leads to severe vanishing gradients, causing unstable learning ⚠️

We diagnose this issue across several algorithms: DQN, Rainbow, PQN, PPO, SAC, and DDPG.

We propose gradient interventions that enable stable, scalable learning, unlocking significant performance gains across agents and environments!

Details below 👇

We propose gradient interventions that enable stable, scalable learning, unlocking significant performance gains across agents and environments!

Details below 👇