🔹Evals - So users can build custom scoring functions

🔹Agents - So users can freely fetch and manipulate data, invoke callbacks, and more

🔹Evals - So users can build custom scoring functions

🔹Agents - So users can freely fetch and manipulate data, invoke callbacks, and more

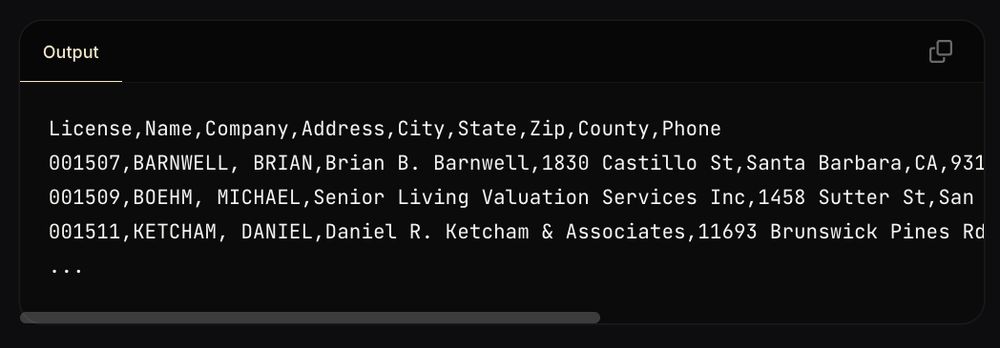

1️⃣ We fed sample HTML from the site to an LLM

2️⃣ We had the LLM write targeted extraction code

3️⃣ Riza executed that code securely

4️⃣ We got back clean, structured CSV data

1️⃣ We fed sample HTML from the site to an LLM

2️⃣ We had the LLM write targeted extraction code

3️⃣ Riza executed that code securely

4️⃣ We got back clean, structured CSV data

13,000 appraisers are shown in batches of 300, with no bulk download option:

13,000 appraisers are shown in batches of 300, with no bulk download option:

LLMs can help you extract it, fast.

Let's see an example:

LLMs can help you extract it, fast.

Let's see an example:

Here's a chart Anthropic's Claude generated using Riza that shows trends in San Francisco city employee salaries.

And you can repeat this infinitely over other data.

Here's how:

Here's a chart Anthropic's Claude generated using Riza that shows trends in San Francisco city employee salaries.

And you can repeat this infinitely over other data.

Here's how:

Finally, you're ready to run the eval:

In our run, #Llama3 70b achieved a pass@1 of ~0.44. This means it solved 44% of HumanEval problems on the first try.

Finally, you're ready to run the eval:

In our run, #Llama3 70b achieved a pass@1 of ~0.44. This means it solved 44% of HumanEval problems on the first try.

To avoid false failures due to timeouts, bump up the timeouts in HumanEval's `evaluate_functional_correctness.py` file up to 35s:

To avoid false failures due to timeouts, bump up the timeouts in HumanEval's `evaluate_functional_correctness.py` file up to 35s:

Modify the execute.py file in HumanEval. Instead of running the unsafe exec(), call Riza's Code Interpreter API.

Just import riza, and add 2 lines of code:

Modify the execute.py file in HumanEval. Instead of running the unsafe exec(), call Riza's Code Interpreter API.

Just import riza, and add 2 lines of code:

❌ Executing LLM-generated code on your machine is dangerous. HumanEval itself comes with a big warning.

❌The code might delete files, steal secrets, or do other bad things.

And you don't want to have to manually review all 164 functions.

❌ Executing LLM-generated code on your machine is dangerous. HumanEval itself comes with a big warning.

❌The code might delete files, steal secrets, or do other bad things.

And you don't want to have to manually review all 164 functions.

To run HumanEval, you

1️⃣First get the LLM to generate code to complete 164 functions like this.

2️⃣Then run the generated code and check if it passes the tests.

To run HumanEval, you

1️⃣First get the LLM to generate code to complete 164 functions like this.

2️⃣Then run the generated code and check if it passes the tests.

Codegen is a hot topic. Today, many LLMs can generate functions and even apps.

But how do you know which model writes the best code?

Enter #HumanEval.

Codegen is a hot topic. Today, many LLMs can generate functions and even apps.

But how do you know which model writes the best code?

Enter #HumanEval.

Our SDK is available in JavaScript, Python, and Go.

Here’s the same example, using our JavaScript SDK:

Our SDK is available in JavaScript, Python, and Go.

Here’s the same example, using our JavaScript SDK:

{"name": "Andrew"}

And you’ll get back a structured object:

"output": {

"greeting": "Hello, Andrew"

}

{"name": "Andrew"}

And you’ll get back a structured object:

"output": {

"greeting": "Hello, Andrew"

}

It's now easier to execute LLM-generated code with structured inputs and outputs.

Here's how:

It's now easier to execute LLM-generated code with structured inputs and outputs.

Here's how: