PMM @tailscale.com,📍 NOLA

I agree about talking to folks, but it’s a helpful complement.

I agree about talking to folks, but it’s a helpful complement.

Posthog is up there on that list for me too. If you get it set up right, it’s essentially Gong but for PLG / self-serve rather than sales.

Posthog is up there on that list for me too. If you get it set up right, it’s essentially Gong but for PLG / self-serve rather than sales.

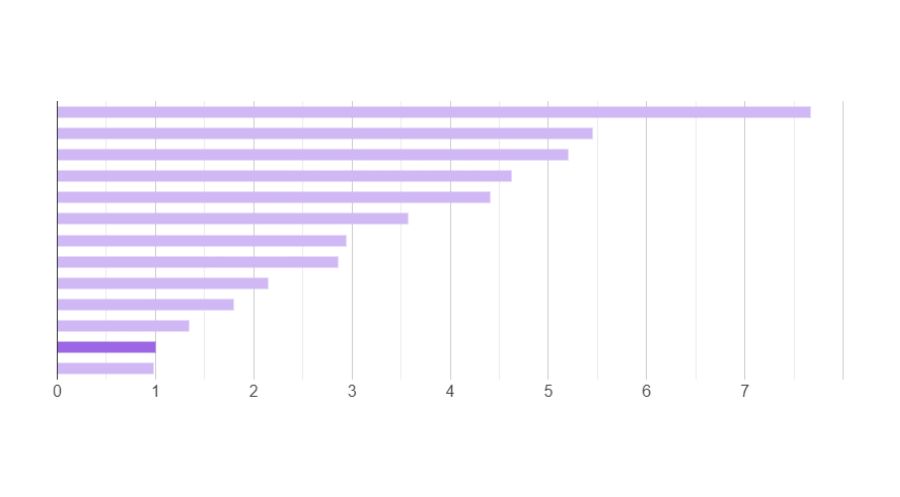

arxiv.org/pdf/2309.10668

arxiv.org/pdf/2309.10668

In this world it seems like you might want a ton of small LLMs with really tight training sets for a given context.

Things always get very odd to me when you need to use a model at temperature 0.

In this world it seems like you might want a ton of small LLMs with really tight training sets for a given context.

Things always get very odd to me when you need to use a model at temperature 0.

LLMs and compression are Interesting nonetheless. Still a little bit of a head scratcher.

LLMs and compression are Interesting nonetheless. Still a little bit of a head scratcher.

They say the training text is mostly English and code, so it seems like either there are still a bunch of Chinese characters being used as high context tokens or something happened with the tokenizer?

They say the training text is mostly English and code, so it seems like either there are still a bunch of Chinese characters being used as high context tokens or something happened with the tokenizer?

Lambda does its best for NVIDIA GPUs, but you have to look at specific models / precisions.

lambdalabs.com/gpu-benchmarks

Lambda does its best for NVIDIA GPUs, but you have to look at specific models / precisions.

lambdalabs.com/gpu-benchmarks

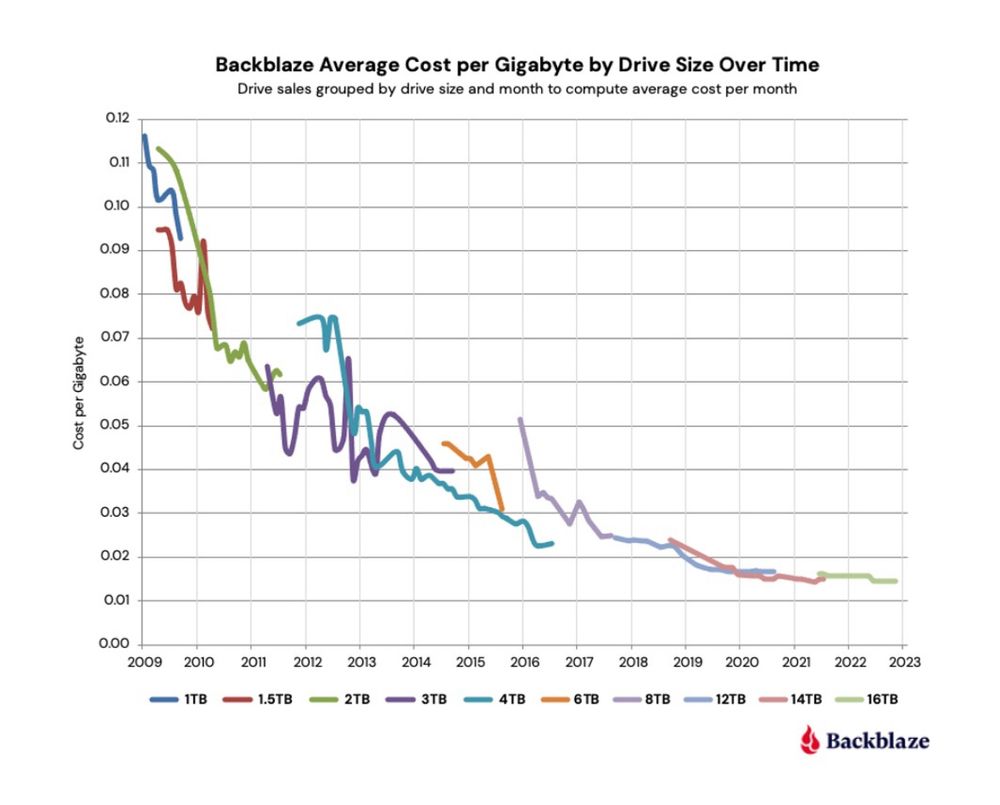

www.backblaze.com/blog/hard-dr...

www.backblaze.com/blog/hard-dr...