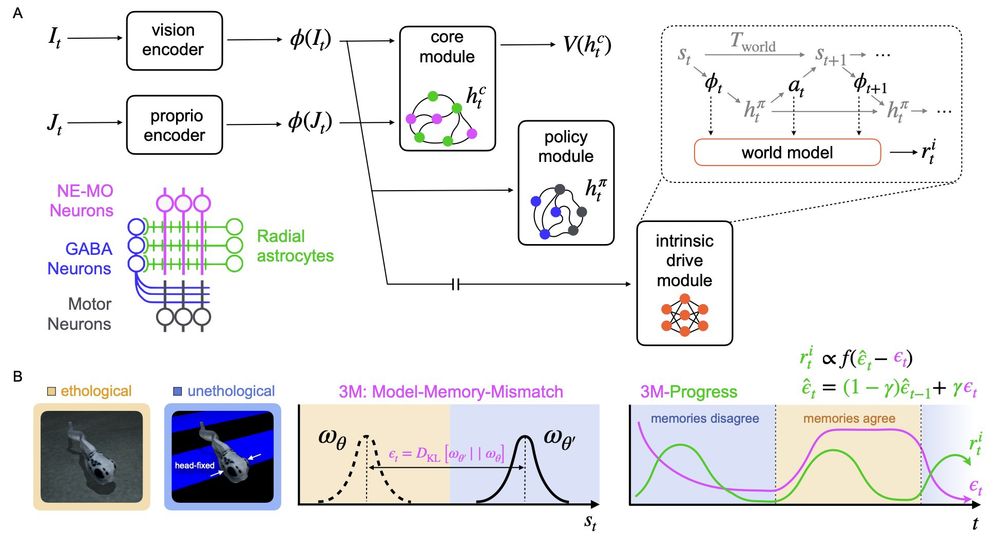

Our work shows this hinges on 1) a predictive world model and 2) memory primitives that ground these predictions in ethologically relevant contexts.

Our work shows this hinges on 1) a predictive world model and 2) memory primitives that ground these predictions in ethologically relevant contexts.

Thanks to my collaborators Alyn Kirsch and Felix Pei, and to Xaq Pitkow for his continued support!

Paper link: arxiv.org/abs/2506.00138

Thanks to my collaborators Alyn Kirsch and Felix Pei, and to Xaq Pitkow for his continued support!

Paper link: arxiv.org/abs/2506.00138