This points towards a major flaw in the dataset given MIMIC is one of the most significant medical datasets for T2I generation. 💔

This points towards a major flaw in the dataset given MIMIC is one of the most significant medical datasets for T2I generation. 💔

In other words, steps taken to protect patient information are in fact posing a threat to it.

In other words, steps taken to protect patient information are in fact posing a threat to it.

In the dataset, the sensitive patient information is hidden or de identified. This is done by replacing it with three underscores (“___”).

In the dataset, the sensitive patient information is hidden or de identified. This is done by replacing it with three underscores (“___”).

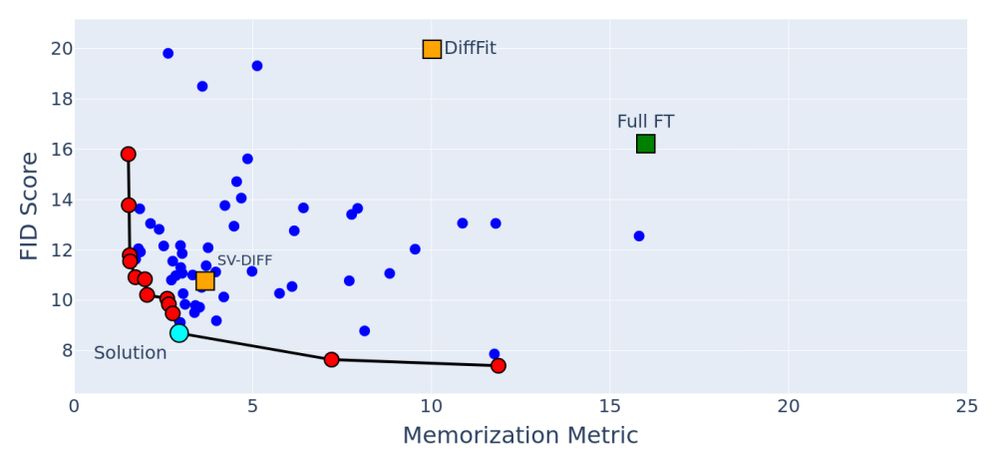

(1) Improve image generation quality

(2) Reduce Memorization!

MemControl leads to optimal model capacity that should be used during fine-tuning: Not more, not less!

(1) Improve image generation quality

(2) Reduce Memorization!

MemControl leads to optimal model capacity that should be used during fine-tuning: Not more, not less!

Each marker in the figure is a diffusion model finetuned on the same data but with different parameter subset.

Full FT (green) leads to high memorization!

Each marker in the figure is a diffusion model finetuned on the same data but with different parameter subset.

Full FT (green) leads to high memorization!

Q. How to fine-tune with fewer parameters? 🤔

A. Parameter-Efficient Fine-Tuning (PEFT) ✨

Q. How to fine-tune with fewer parameters? 🤔

A. Parameter-Efficient Fine-Tuning (PEFT) ✨

Artifact replication is shown in red boxes.

Artifact replication is shown in red boxes.