Research Scientist at Nvidia

Lab: http://pearls.ucsd.edu

Personal: prithvirajva.com

Some "relaxation" while I put out Prof fires for a smol bit then new adventures!

Some "relaxation" while I put out Prof fires for a smol bit then new adventures!

Every single (Bay) party. No I do not want to consult. I just wanna hang out.

Every single (Bay) party. No I do not want to consult. I just wanna hang out.

Only 5 highschoolers in all India do better than an LLM in the single most important exam of their to get into the IITs

The legacy edu selection systems are now worse than useless

Only 5 highschoolers in all India do better than an LLM in the single most important exam of their to get into the IITs

The legacy edu selection systems are now worse than useless

We then go Beyond this with interactive RL style evals to see how well models interact with a changing env

We then go Beyond this with interactive RL style evals to see how well models interact with a changing env

We do a thorough analysis of many types of architectures x training methods on two new evals

We do a thorough analysis of many types of architectures x training methods on two new evals

∞-THOR is an infinite len sim framework + guide on (new) architectures/training methods for VLA models

∞-THOR is an infinite len sim framework + guide on (new) architectures/training methods for VLA models

Is there a "RLSys" version of this on scaling RL+LLM training? If not + there's OSS community interest, I'll prob write one?

Is there a "RLSys" version of this on scaling RL+LLM training? If not + there's OSS community interest, I'll prob write one?

Things I hear a lot "no one thought next token pred would work, or RL on language, etc". But that's just not true, there were def ppl working on them (just weren't taken seriously by some communities)

Things I hear a lot "no one thought next token pred would work, or RL on language, etc". But that's just not true, there were def ppl working on them (just weren't taken seriously by some communities)

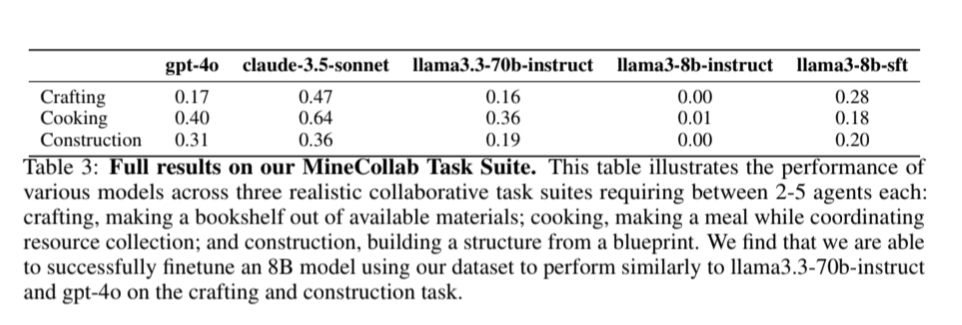

Despite this, we find that no existing agent right now is able to effectively collaborate, communication coordination is out of reach!

Despite this, we find that no existing agent right now is able to effectively collaborate, communication coordination is out of reach!

In particular in our benchmark MineCollab we test multi agent collaboration in cooking, crafting, and construction

In particular in our benchmark MineCollab we test multi agent collaboration in cooking, crafting, and construction

github.com/kolbytn/mind...

github.com/kolbytn/mind...

⛏️ Here are MINDcraft and MineCollab, a simulator and benchmark purpose built to enable research in this area!

⛏️ Here are MINDcraft and MineCollab, a simulator and benchmark purpose built to enable research in this area!

An RL algorithm inspired by trad retrieval that trains agents to more effectively use lists of documents in context for better multi-hop {QA, agentic tasks, and more}!

An RL algorithm inspired by trad retrieval that trains agents to more effectively use lists of documents in context for better multi-hop {QA, agentic tasks, and more}!

SOTA LLM agents are ok at synthetically (procedurally) generated tasks, but get at most *13%* on human made ones

👨💻github.com/microsoft/tale-suite

SOTA LLM agents are ok at synthetically (procedurally) generated tasks, but get at most *13%* on human made ones

👨💻github.com/microsoft/tale-suite

A benchmark of a few hundred text envs: science experiments and embodied cooking to solving murder mysteries. We test over 30 of the best LLM agents and pinpoint failure modes +how to improve

👨💻pip install tale-suite

A benchmark of a few hundred text envs: science experiments and embodied cooking to solving murder mysteries. We test over 30 of the best LLM agents and pinpoint failure modes +how to improve

👨💻pip install tale-suite