Engineering inference @ Menlo

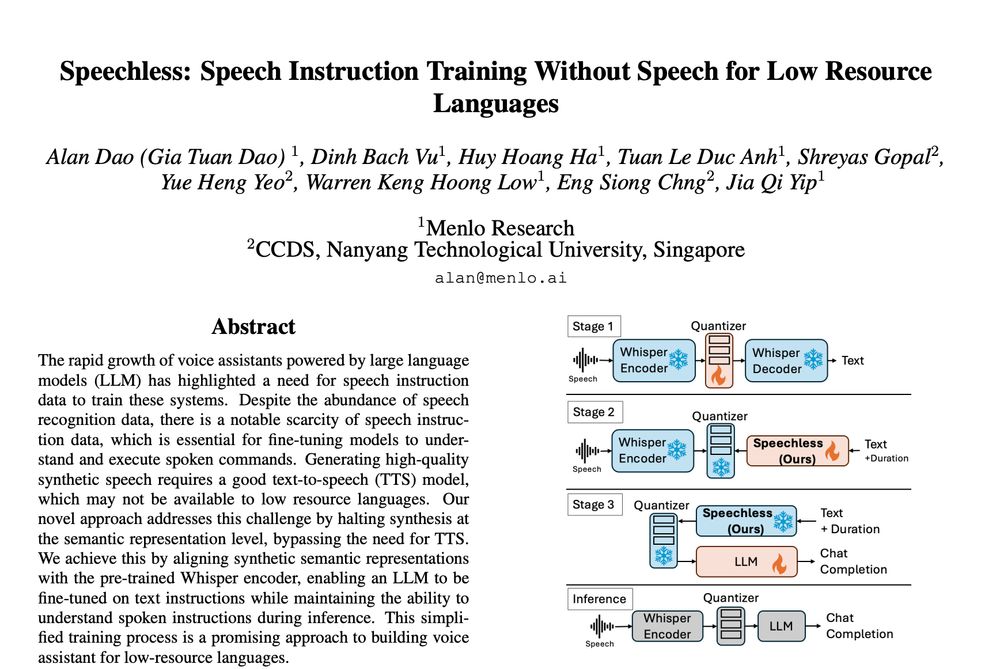

We're releasing new models, datasets, and a method for training speech instruction models without using any audio data. No waveforms, no TTS.

Links below.

We're releasing new models, datasets, and a method for training speech instruction models without using any audio data. No waveforms, no TTS.

Links below.

a model that enables LLMs to solve mazes and think visually. By combining synthetic reasoning data, fine-tuning, and GRPO, the research team at Menlo Research has successfully trained a model that can navigate spatial reasoning tasks. #ML #RL

www.youtube.com/watch?v=dUS9...

a model that enables LLMs to solve mazes and think visually. By combining synthetic reasoning data, fine-tuning, and GRPO, the research team at Menlo Research has successfully trained a model that can navigate spatial reasoning tasks. #ML #RL

www.youtube.com/watch?v=dUS9...

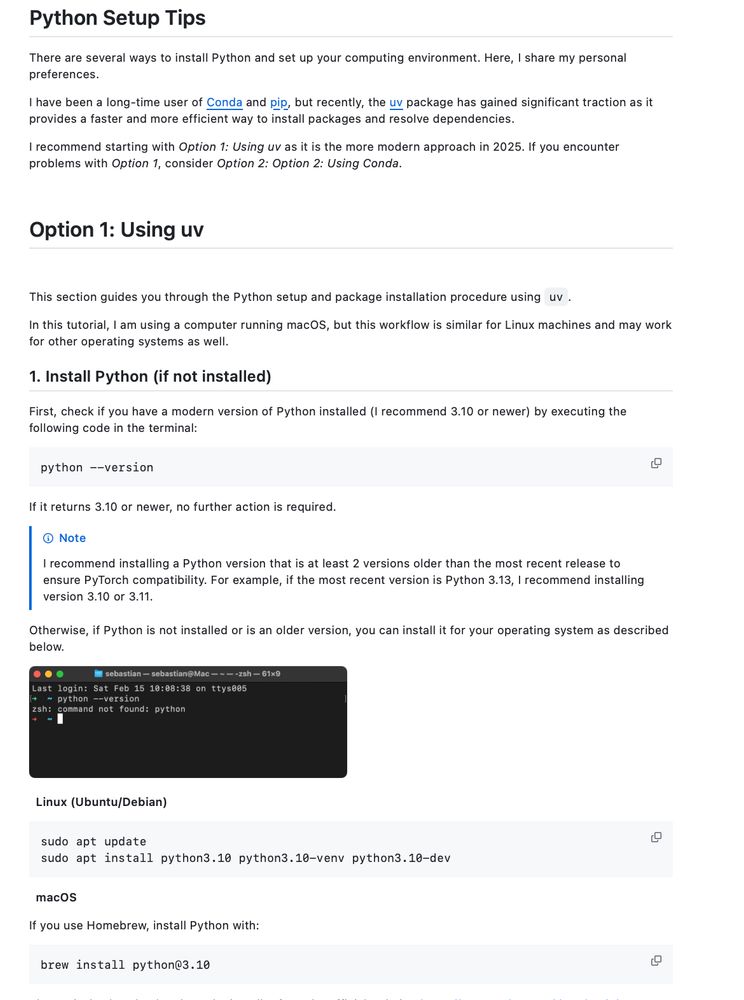

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

Pranav Nair: Combining losses for different Matyroshka-nested groups of bits in each weight within a neural network leads to an accuracy improvement for models (esp. 2-bit reps).

Paper: "Matryoshka Quantization" at arxiv.org/abs/2502.06786

Pranav Nair: Combining losses for different Matyroshka-nested groups of bits in each weight within a neural network leads to an accuracy improvement for models (esp. 2-bit reps).

Paper: "Matryoshka Quantization" at arxiv.org/abs/2502.06786

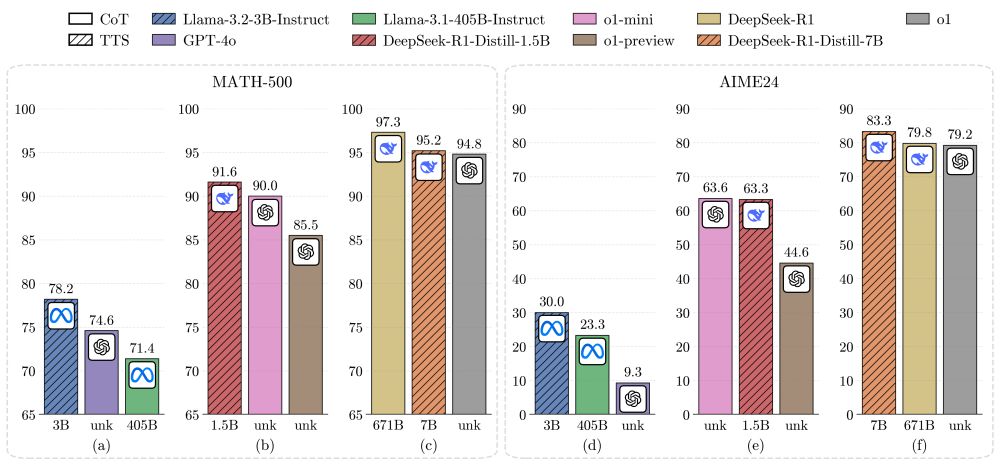

"A 1B LLM can exceed a 405B LLM on MATH-500. Moreover, on both MATH-500 and AIME24, a 0.5B LLM outperforms GPT-4o, a 3B LLM surpasses a 405B LLM, and a 7B LLM beats o1 and DeepSeek-R1, while with higher inference efficiency."

"A 1B LLM can exceed a 405B LLM on MATH-500. Moreover, on both MATH-500 and AIME24, a 0.5B LLM outperforms GPT-4o, a 3B LLM surpasses a 405B LLM, and a 7B LLM beats o1 and DeepSeek-R1, while with higher inference efficiency."

www.youtube.com/watch?v=P_fH...

www.youtube.com/watch?v=P_fH...

It showed the tool helping a cheesemonger in Wisconsin write a product description by informing him Gouda accounts for '50 to 60 percent of global cheese consumption'."

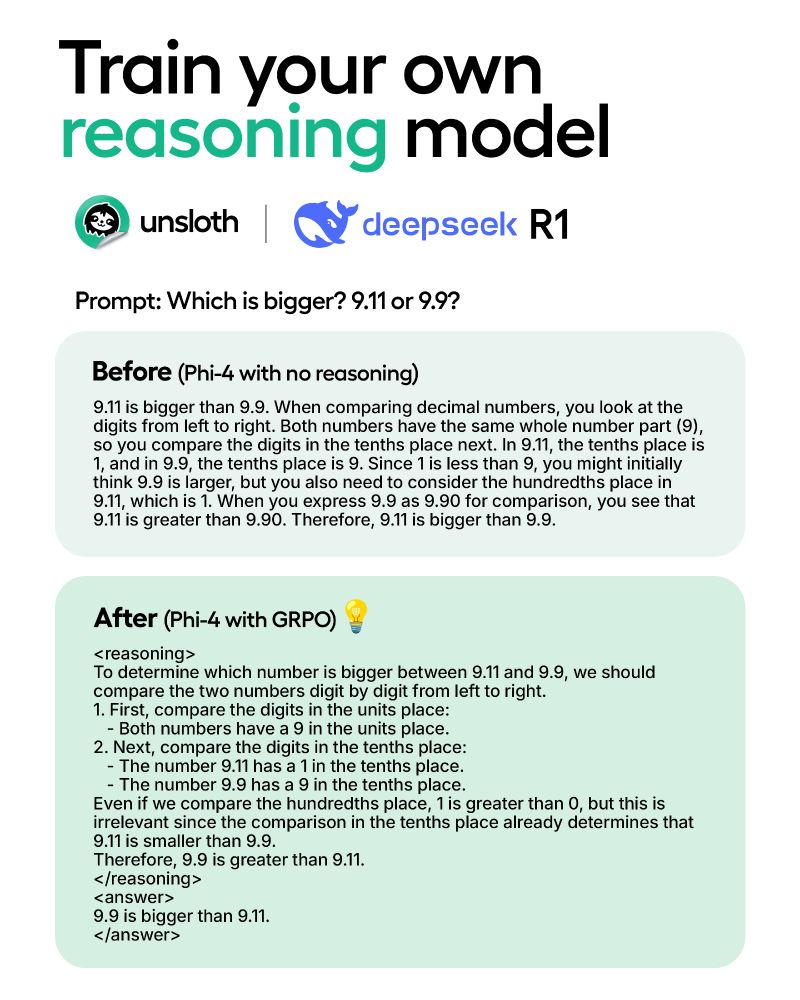

Experience the "Aha" moment with just 7GB VRAM.

Unsloth reduces GRPO training memory use by 80%.

15GB VRAM can transform Llama-3.1 (8B) & Phi-4 (14B) into reasoning models.

Blog: unsloth.ai/blog/r1-reas...

Experience the "Aha" moment with just 7GB VRAM.

Unsloth reduces GRPO training memory use by 80%.

15GB VRAM can transform Llama-3.1 (8B) & Phi-4 (14B) into reasoning models.

Blog: unsloth.ai/blog/r1-reas...

A parallelism-aware architecture modification that makes 70B Llama with tensor parallelism ~30% faster!

Paper: arxiv.org/abs/2501.06589

A parallelism-aware architecture modification that makes 70B Llama with tensor parallelism ~30% faster!

Paper: arxiv.org/abs/2501.06589

Aya 23 pairs a highly performant pre-trained model with the recent Aya dataset, making multilingual generative AI breakthroughs accessible to the research community. 🌍

arxiv.org/abs/2405.15032

Aya 23 pairs a highly performant pre-trained model with the recent Aya dataset, making multilingual generative AI breakthroughs accessible to the research community. 🌍

arxiv.org/abs/2405.15032

We've summarized everything we did & saw in this first week since DS came to light

Results/code/dataset/experiment => if we missed one, share & we'll update

Link: huggingface.co/blog/open-r1...

We've summarized everything we did & saw in this first week since DS came to light

Results/code/dataset/experiment => if we missed one, share & we'll update

Link: huggingface.co/blog/open-r1...

- RL generalizes in rule-based envs, esp. when trained with an outcome-based reward

- SFT tends to memorize the training data and struggles to generalize OOD

- RL generalizes in rule-based envs, esp. when trained with an outcome-based reward

- SFT tends to memorize the training data and struggles to generalize OOD

It is running smoothly on Qwen-1.5B w/ longer max_completion_length + higher num_generations, but haven't gotten LoRA or grad checkpointing working on multi-gpu w/ deepspeed yet for it.

gist.github.com/willccbb/467...

It is running smoothly on Qwen-1.5B w/ longer max_completion_length + higher num_generations, but haven't gotten LoRA or grad checkpointing working on multi-gpu w/ deepspeed yet for it.

gist.github.com/willccbb/467...

The test for whether that’s true is whether OpenAI is relieved or not at the advent of DeepSeek.

Paraphrasing him where he jokingly stated - "pack it up boys, it's over"

Source: github.com/ggerganov/ll...

Paraphrasing him where he jokingly stated - "pack it up boys, it's over"

Source: github.com/ggerganov/ll...

PPO, GPRO, PRIME — doesn’t matter what RL you use, the key is that it’s RL

experiment logs: wandb.ai/jiayipan/Tin...

x: x.com/jiayi_pirate...

PPO, GPRO, PRIME — doesn’t matter what RL you use, the key is that it’s RL

experiment logs: wandb.ai/jiayipan/Tin...

x: x.com/jiayi_pirate...

notable: they claim they developed in parallel and that most of their experiments were performed *prior to* the release of R1 and they came to the same conclusions

hkust-nlp.notion.site/simplerl-rea...

notable: they claim they developed in parallel and that most of their experiments were performed *prior to* the release of R1 and they came to the same conclusions

hkust-nlp.notion.site/simplerl-rea...

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...

📄 Paper: arxiv.org/abs/2501.13011

💡 Introductory explainer: deepmindsafetyresearch.medium.com/mona-a-meth...

⚙️ Technical safety post: www.alignmentforum.org/posts/zWySW...