https://privatellm.ai

Glad you’re enjoying Private LLM. The boost you’re seeing is because we’re not an MLX/llama.cpp wrapper like LM Studio or Ollama (slowllama?)

We quantize each model (OmniQuant/GPTQ) for Apple Silicon, so even low-RAM iPhones and Macs run fast and reason better.

Glad you’re enjoying Private LLM. The boost you’re seeing is because we’re not an MLX/llama.cpp wrapper like LM Studio or Ollama (slowllama?)

We quantize each model (OmniQuant/GPTQ) for Apple Silicon, so even low-RAM iPhones and Macs run fast and reason better.

* 7B (iOS + macOS) – 8GB RAM or more

* 32B (macOS only) – 32GB RAM minimum

Handles bug fixing and code refactoring tasks. Trained on real GitHub issues via reinforcement learning.

* 7B (iOS + macOS) – 8GB RAM or more

* 32B (macOS only) – 32GB RAM minimum

Handles bug fixing and code refactoring tasks. Trained on real GitHub issues via reinforcement learning.

Wilderness survival assistant, offline. Knows how to build shelters, find water, navigate terrain, etc.

Runs on any iOS/Mac device with 8GB+ RAM — even off-grid.

Wilderness survival assistant, offline. Knows how to build shelters, find water, navigate terrain, etc.

Runs on any iOS/Mac device with 8GB+ RAM — even off-grid.

Biomedical assistant for med students, researchers, and clinicians. Answers board-exam style questions, explains research findings, and supports clinical reasoning — privately.

Runs on 8GB+ RAM.

Biomedical assistant for med students, researchers, and clinicians. Answers board-exam style questions, explains research findings, and supports clinical reasoning — privately.

Runs on 8GB+ RAM.

Post-trained to eliminate refusal behavior on politically sensitive topics — while preserving full reasoning ability.

Built to refuse censorship: open dialogue, independent thought, and the right to answer freely.

macOS only. Needs 48GB+ RAM.

Post-trained to eliminate refusal behavior on politically sensitive topics — while preserving full reasoning ability.

Built to refuse censorship: open dialogue, independent thought, and the right to answer freely.

macOS only. Needs 48GB+ RAM.

Uncensored 4-bit Omniquant quantized fine-tunes of Gemma 3 1B. For users who want unrestricted conversations, roleplay, and truth-seeking without moral filters. Fast and small. iOS and macOS.

Uncensored 4-bit Omniquant quantized fine-tunes of Gemma 3 1B. For users who want unrestricted conversations, roleplay, and truth-seeking without moral filters. Fast and small. iOS and macOS.

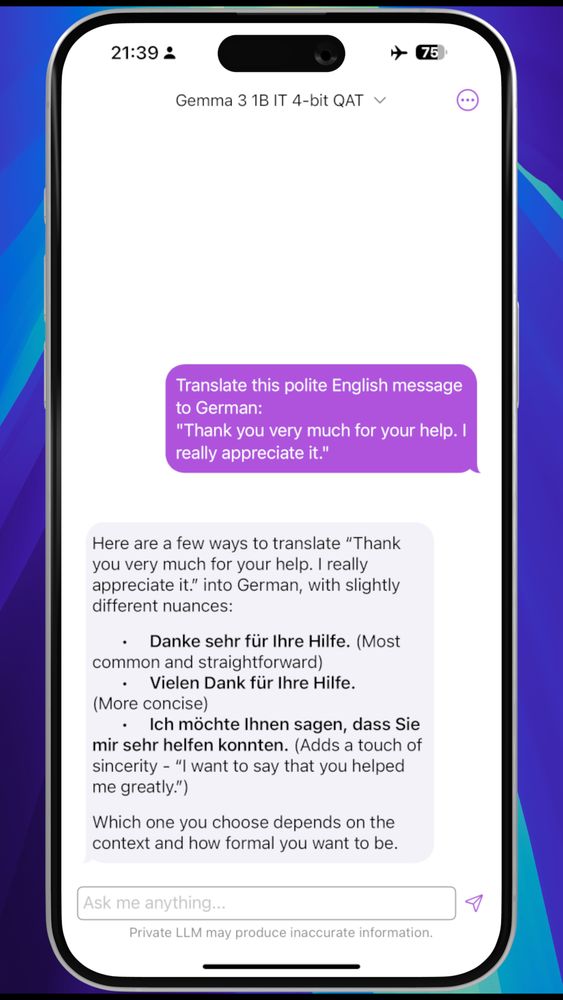

Multilingual. Full 32K context on iPhones with ≥ 6GB RAM.

Ideal for writing, Q&A, summarization — in 140+ languages.

Small enough to run on any supported iOS or Mac device.

Multilingual. Full 32K context on iPhones with ≥ 6GB RAM.

Ideal for writing, Q&A, summarization — in 140+ languages.

Small enough to run on any supported iOS or Mac device.

These are currently the best uncensored LLMs that can fit in your pocket, no holds barred!

These are currently the best uncensored LLMs that can fit in your pocket, no holds barred!

- Dolphin 3.0 Qwen 2.5 0.5B, 1.5B, 3B - Compatible with nearly all modern iPhones (iPhone 12 or newer) and Macs

- Dolphin 3.0 Qwen 2.5 0.5B, 1.5B, 3B - Compatible with nearly all modern iPhones (iPhone 12 or newer) and Macs

- Dolphin 3.0 Llama 3.2 1B - Perfect for iPhones/iPads with 4GB+ RAM or any Apple Silicon Mac

- Dolphin 3.0 Llama 3.2 1B - Perfect for iPhones/iPads with 4GB+ RAM or any Apple Silicon Mac