Find more interesting details on our website & paper here:

🌐: programmingwithpixels.com

with

@wellecks.bsky.social

Find more interesting details on our website & paper here:

🌐: programmingwithpixels.com

with

@wellecks.bsky.social

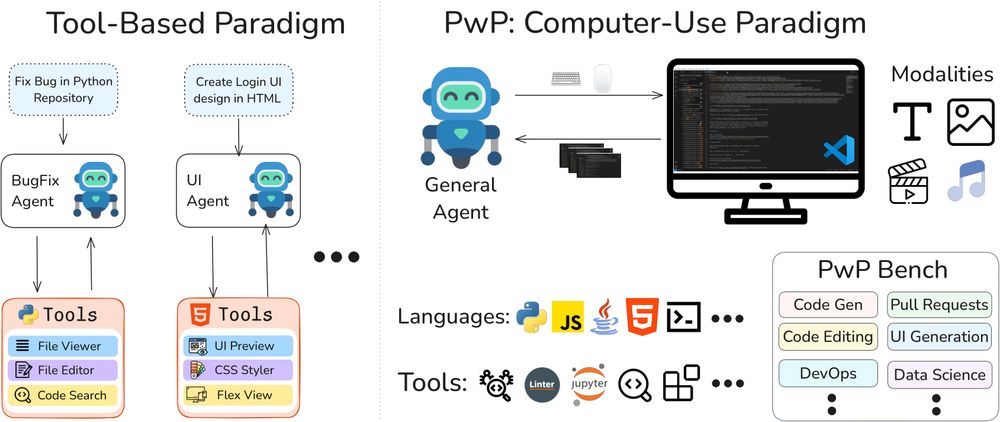

Tiny icons or complex menus can confuse the agent, and multi-step operations (e.g. a debugger workflow) remain challenging.

🧵

Tiny icons or complex menus can confuse the agent, and multi-step operations (e.g. a debugger workflow) remain challenging.

🧵

Results? It competes with or even surpasses tool-based agents—without any domain-specific hand-engineering.

Results? It competes with or even surpasses tool-based agents—without any domain-specific hand-engineering.

PwP-Bench systematically tests a broad dev skillset within a single, realistic IDE setup.

🧵

PwP-Bench systematically tests a broad dev skillset within a single, realistic IDE setup.

🧵

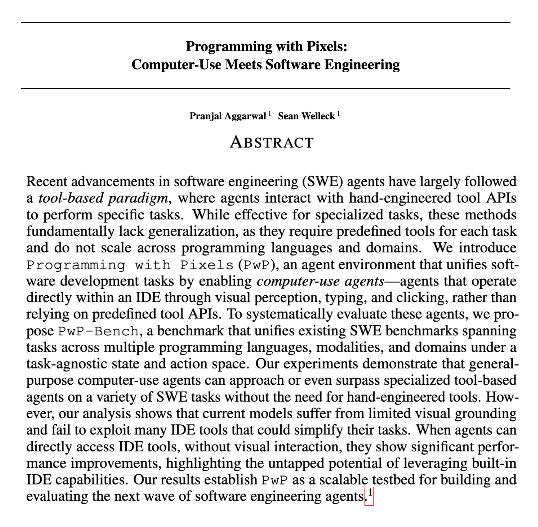

Agents see the IDE (pixel screenshots) and act by typing & clicking—no special integrations are needed. They leverage all developer tools, just like an autonomous programmer at keyboard!

🧵

Agents see the IDE (pixel screenshots) and act by typing & clicking—no special integrations are needed. They leverage all developer tools, just like an autonomous programmer at keyboard!

🧵

✅ Seeing VS Code’s UI

✅ Typing, clicking & basic file operations

✅ Using built-in tools (debuggers, refactoring, etc)

A simple, general-purpose agent should leverage any SWE tool-without extra engineering.

🧵

✅ Seeing VS Code’s UI

✅ Typing, clicking & basic file operations

✅ Using built-in tools (debuggers, refactoring, etc)

A simple, general-purpose agent should leverage any SWE tool-without extra engineering.

🧵

That’s limiting – they can’t easily adapt to new tasks or fully leverage complex IDEs like human developers do. In other words, their “toolbox” is too narrow.

🧵

That’s limiting – they can’t easily adapt to new tasks or fully leverage complex IDEs like human developers do. In other words, their “toolbox” is too narrow.

🧵

Introducing Programming with Pixels: an SWE environment where agents control VSCode via screen perception, typing & clicking to tackle diverse tasks.

programmingwithpixels.com

🧵

Introducing Programming with Pixels: an SWE environment where agents control VSCode via screen perception, typing & clicking to tackle diverse tasks.

programmingwithpixels.com

🧵

Even better, AlphaVerus can improve GPT-4o or any other model without any fine-tuning!!

🧵

Even better, AlphaVerus can improve GPT-4o or any other model without any fine-tuning!!

🧵

Our critique module solves this problem! And yes, our critique module self-improves too!

🧵

Our critique module solves this problem! And yes, our critique module self-improves too!

🧵

Correct translations and refinements improve future models!

Challenge? Reward Hacking!

🧵

Correct translations and refinements improve future models!

Challenge? Reward Hacking!

🧵

Challenge: Data is scarce & proofs are complex!

Challenge: Data is scarce & proofs are complex!

Instead, what if they can prove code correctness?

Presenting AlphaVerus: A self-reinforcing method that automatically learns to generate correct code using inference-time search and verifier feedback.

🌐 : alphaverus.github.io

🧵

Instead, what if they can prove code correctness?

Presenting AlphaVerus: A self-reinforcing method that automatically learns to generate correct code using inference-time search and verifier feedback.

🌐 : alphaverus.github.io

🧵