Pranav Goel

@pranavgoel.bsky.social

Researcher: Computational Social Science, Text as Data

On the job market in Fall 2025!

Currently a Postdoctoral Research Associate at Network Science Institute, Northeastern University

Website: pranav-goel.github.io/

On the job market in Fall 2025!

Currently a Postdoctoral Research Associate at Network Science Institute, Northeastern University

Website: pranav-goel.github.io/

June 11, 2025 at 3:39 PM

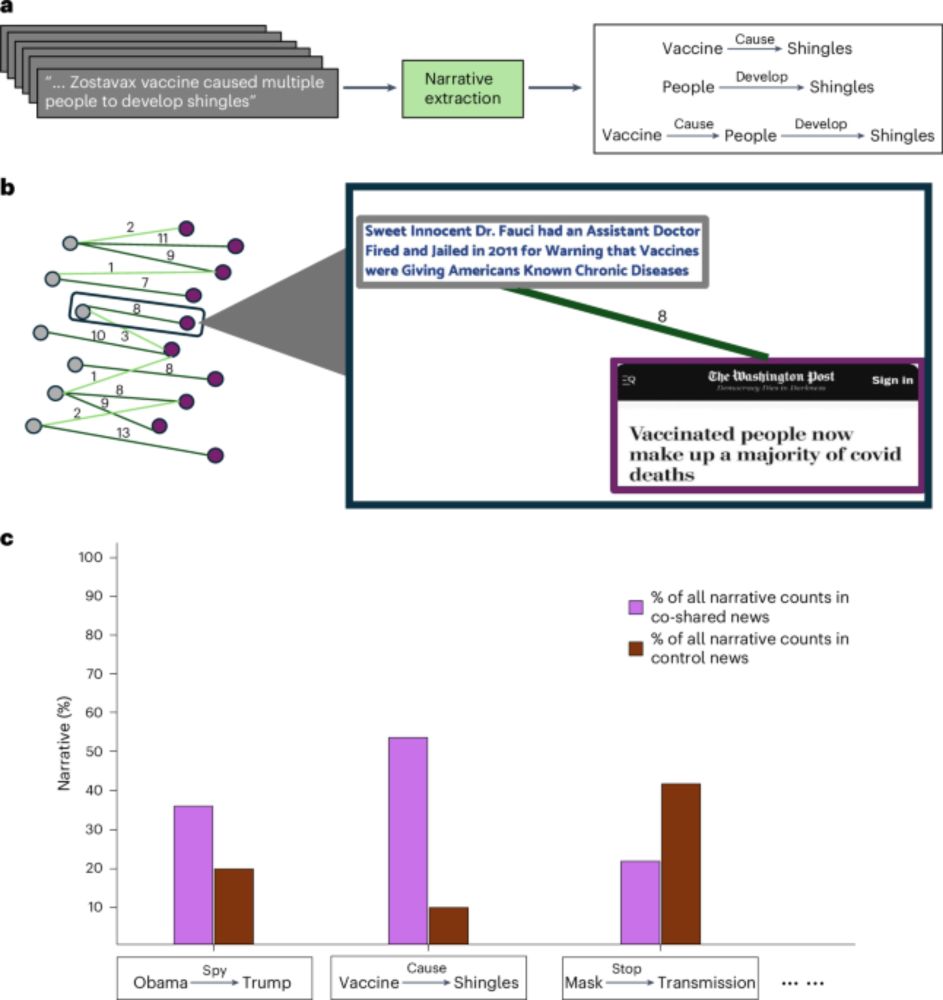

For journalists and especially headline writers: even if a discrete piece of information is true, you've got to think carefully about whether the way you're presenting it is useful for promoting narratives that aren't.

June 11, 2025 at 3:39 PM

For journalists and especially headline writers: even if a discrete piece of information is true, you've got to think carefully about whether the way you're presenting it is useful for promoting narratives that aren't.

Big picture: misleading claims are both *more prevalent* and *harder to moderate* than implied in current misinformation research. It's not as simple as fact-checking false claims or downranking/blocking unreliable domains. The extent to which information (mis)informs depends on how it is used!

June 11, 2025 at 3:39 PM

Big picture: misleading claims are both *more prevalent* and *harder to moderate* than implied in current misinformation research. It's not as simple as fact-checking false claims or downranking/blocking unreliable domains. The extent to which information (mis)informs depends on how it is used!

If you want to advance misleading narratives — such as COVID-19 vaccine skepticism — supporting information from reliable sources is more useful than similar information from unreliable sources, if you have it.

June 11, 2025 at 3:39 PM

If you want to advance misleading narratives — such as COVID-19 vaccine skepticism — supporting information from reliable sources is more useful than similar information from unreliable sources, if you have it.

This calls for a reconsideration of what misinformation is, how widespread it is, and the extent to which it can be moderated. Our core claim is that users are *using* information to promote their identities and advance their interests, not merely consuming information for its truth value.

June 11, 2025 at 3:39 PM

This calls for a reconsideration of what misinformation is, how widespread it is, and the extent to which it can be moderated. Our core claim is that users are *using* information to promote their identities and advance their interests, not merely consuming information for its truth value.

We find that mainstream stories with high scores on this measure are significantly more likely to contain narratives present in misinformation content. This suggests that reliable information — which has a much wider audience — can be repurposed by users promoting potentially misleading narratives.

June 11, 2025 at 3:39 PM

We find that mainstream stories with high scores on this measure are significantly more likely to contain narratives present in misinformation content. This suggests that reliable information — which has a much wider audience — can be repurposed by users promoting potentially misleading narratives.

We do this by looking at co-sharing behavior on Twitter/X. We first identify users who frequently share information from unreliable sources, and then examine the information from reliable sources that those same users also share at disproportionate rates.

June 11, 2025 at 3:39 PM

We do this by looking at co-sharing behavior on Twitter/X. We first identify users who frequently share information from unreliable sources, and then examine the information from reliable sources that those same users also share at disproportionate rates.

Our paper uses this dynamic — users strategically repurposing true information from reliable sources to advance misleading narratives — to move beyond conceptualizing misinformation as source reliability and measuring it by just counting sharing of / exposure to unreliable sources.

June 11, 2025 at 3:39 PM

Our paper uses this dynamic — users strategically repurposing true information from reliable sources to advance misleading narratives — to move beyond conceptualizing misinformation as source reliability and measuring it by just counting sharing of / exposure to unreliable sources.

Take, for example, this headline from the Washington Post. The source is reliable and the information is, strictly speaking, true. But the people most excited to share this story wanted to advance a misleading claim: that the COVID-19 vaccine was ineffective at best.

June 11, 2025 at 3:39 PM

Take, for example, this headline from the Washington Post. The source is reliable and the information is, strictly speaking, true. But the people most excited to share this story wanted to advance a misleading claim: that the COVID-19 vaccine was ineffective at best.

But users who want to advance misleading claims likely *prefer* to use reliable sources when they can. They know others see reliable sources as more credible!

June 11, 2025 at 3:39 PM

But users who want to advance misleading claims likely *prefer* to use reliable sources when they can. They know others see reliable sources as more credible!

When thinking about online misinformation, we'd really like to identify/measure misleading claims; unreliable sources are only a convenient proxy.

June 11, 2025 at 3:39 PM

When thinking about online misinformation, we'd really like to identify/measure misleading claims; unreliable sources are only a convenient proxy.

In our new paper (w/ @jongreen.bsky.social , @davidlazer.bsky.social, & Philip Resnik), now up in Nature Human Behaviour (nature.com/articles/s41562-025-02223-4), we argue that this tension really speaks to a broader misconceptualization of what misinformation is and how it works.

Using co-sharing to identify use of mainstream news for promoting potentially misleading narratives - Nature Human Behaviour

Goel et al. examine why some factually correct news articles are often shared by users who also shared fake news articles on social media.

nature.com

June 11, 2025 at 3:39 PM

In our new paper (w/ @jongreen.bsky.social , @davidlazer.bsky.social, & Philip Resnik), now up in Nature Human Behaviour (nature.com/articles/s41562-025-02223-4), we argue that this tension really speaks to a broader misconceptualization of what misinformation is and how it works.