📝 Blog: au-clan.github.io/2025-06-19-f...

💻 Code: github.com/au-clan/FoA

cc: @akhilarora.bsky.social @larshklein.bsky.social @caglarai.bsky.social @icepfl.bsky.social @csaudk.bsky.social

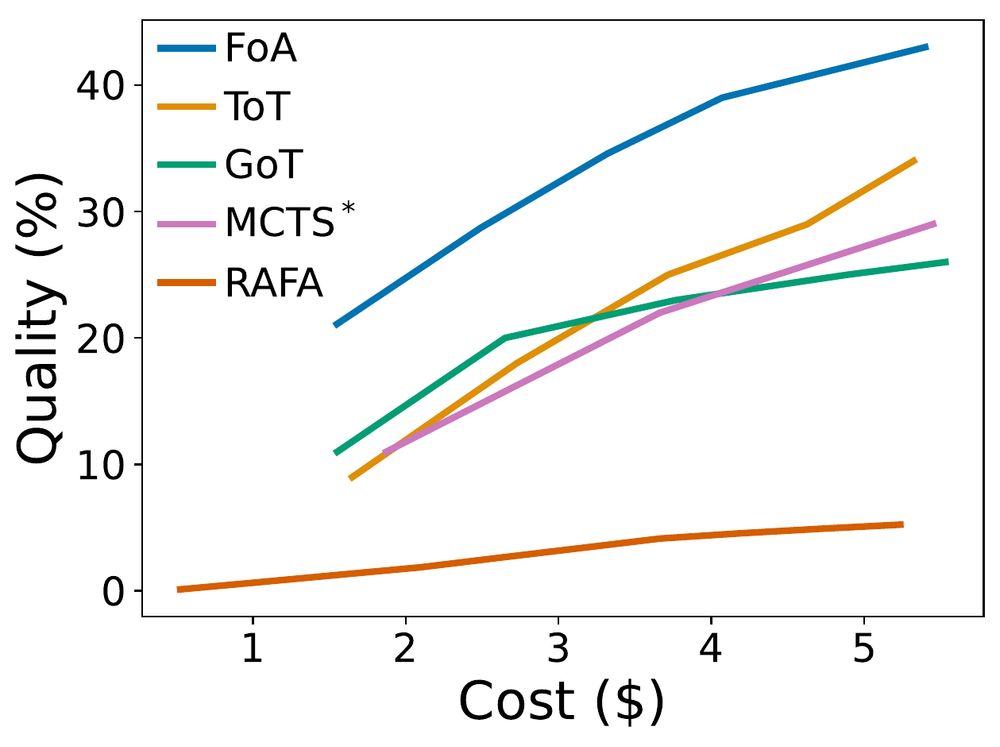

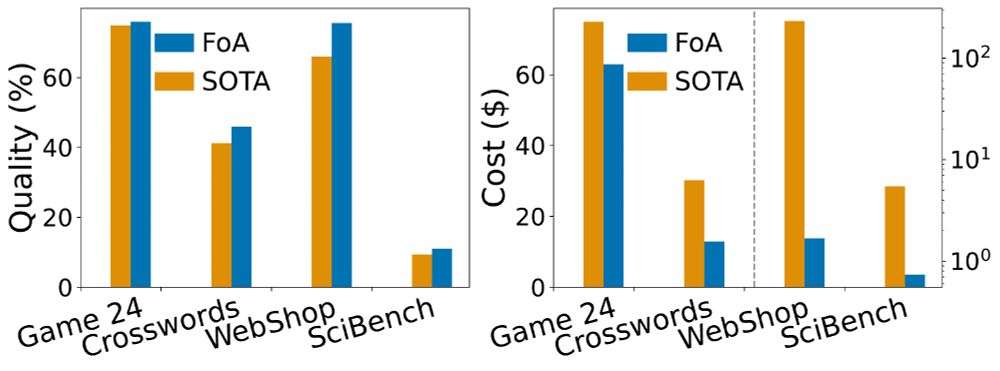

💸 Better cost-quality trade-off: Up to 70% improvements at a fraction of the compute

⚙️ Plug-and-play: Works with any prompting strategy.

📏 Tunable & predictable: Control compute precisely

😁 More details 👇

💸 Better cost-quality trade-off: Up to 70% improvements at a fraction of the compute

⚙️ Plug-and-play: Works with any prompting strategy.

📏 Tunable & predictable: Control compute precisely

😁 More details 👇