TL;DR we find that certain attention heads perform various, distinct operations on the input prompt for QA!

arxiv.org/abs/2505.15807

1/

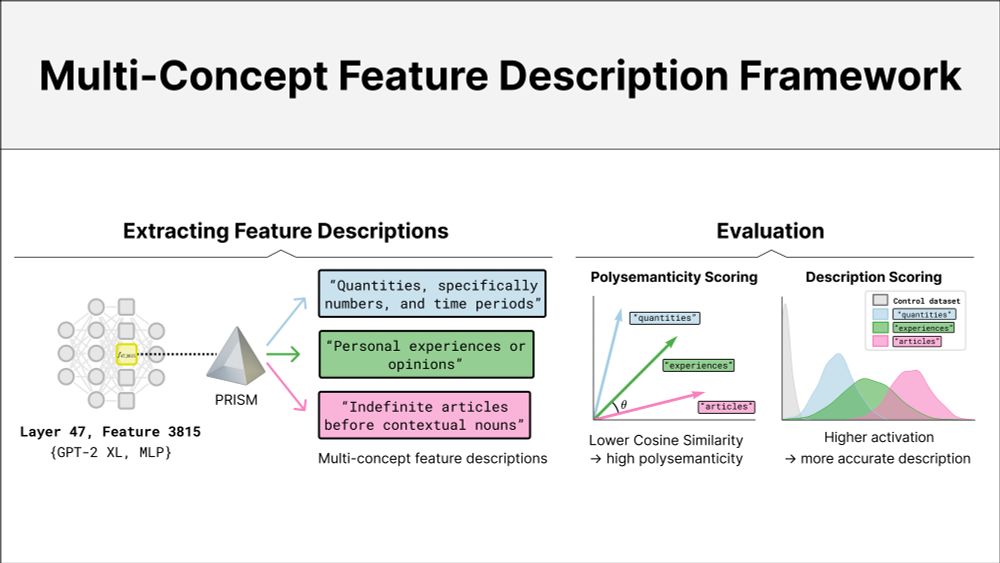

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

If you're interested in LLM post-training techniques and in how to make LLMs better "language users", read this thread, introducing the "LM Playpen".

If you're interested in LLM post-training techniques and in how to make LLMs better "language users", read this thread, introducing the "LM Playpen".

Go check this out! arxiv.org/abs/2505.15807

Thanks to my amazing co-authors

@pkhdipraja.bsky.social,

@reduanachtibat.bsky.social , Thomas Wiegand and Wojciech Samek!

Go check this out! arxiv.org/abs/2505.15807

Thanks to my amazing co-authors

@pkhdipraja.bsky.social,

@reduanachtibat.bsky.social , Thomas Wiegand and Wojciech Samek!

TL;DR we find that certain attention heads perform various, distinct operations on the input prompt for QA!

arxiv.org/abs/2505.15807

1/

TL;DR we find that certain attention heads perform various, distinct operations on the input prompt for QA!

arxiv.org/abs/2505.15807

1/

These are fairly independent research positions that will allow the candidate to build their own profile. Dln June 2nd.

Details: tinyurl.com/pd-potsdam-2...

#NLProc #AI 🤖🧠

These are fairly independent research positions that will allow the candidate to build their own profile. Dln June 2nd.

Details: tinyurl.com/pd-potsdam-2...

#NLProc #AI 🤖🧠

Nominations and self-nominations go here 👇

docs.google.com/forms/d/e/1F...

Nominations and self-nominations go here 👇

docs.google.com/forms/d/e/1F...