www.mimuw.edu.pl/~pmilos

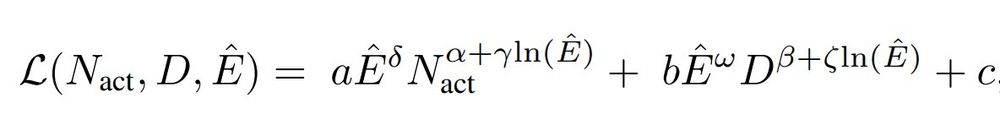

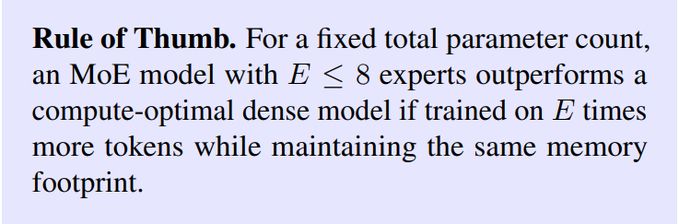

We verify this empirically, and along the way, we develop joint scaling laws for both MoE and dense models.

We verify this empirically, and along the way, we develop joint scaling laws for both MoE and dense models.

(do rozwagi dla naszych w PL)

(do rozwagi dla naszych w PL)

However, I recommend chatting with my co-authors and students :) @bartoszpiotrowski.bsky.social @albertqjiang.bsky.social @michalnauman @mateuszostaszewski.bsky.social

2 papers on the main track and spotlight

However, I recommend chatting with my co-authors and students :) @bartoszpiotrowski.bsky.social @albertqjiang.bsky.social @michalnauman @mateuszostaszewski.bsky.social

2 papers on the main track and spotlight

BRO: Bigger, Regularized, Optimistic! 🧵

BRO: Bigger, Regularized, Optimistic! 🧵

Excellent news from NeurIPS. Two papers in, including a spotlight.

1. Repurposing Language Models into Embedding Models: Finding the Compute-Optimal Recipe

2. Bigger, Regularized, Optimistic: scaling for compute and sample-efficient continuous control

Excellent news from NeurIPS. Two papers in, including a spotlight.

1. Repurposing Language Models into Embedding Models: Finding the Compute-Optimal Recipe

2. Bigger, Regularized, Optimistic: scaling for compute and sample-efficient continuous control