Build Attention-Killers AI (RWKV) from scratch @ http://wiki.rwkv.com

Also built uilicious & GPU.js (http://gpu.rocks)

Gives more context than I expected. 👍

Gives more context than I expected. 👍

the dataset space

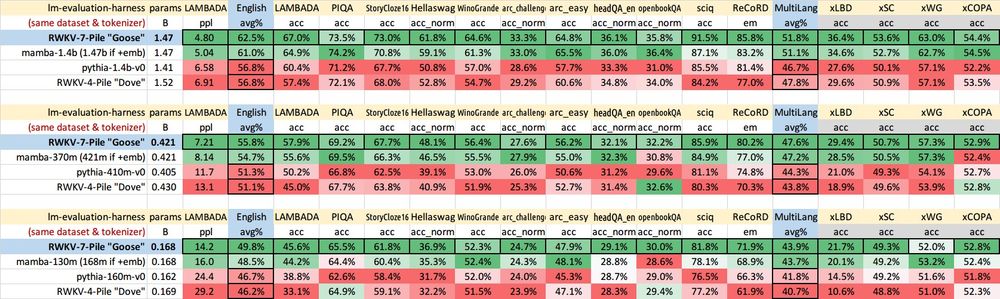

We plan to do more training on these new line of QRWKV and LLaMA-RWKV models, over larger context lengths so that they can be true transformer killer

If your @ Neurips, you can find me with an RWKV7 Goose

the dataset space

We plan to do more training on these new line of QRWKV and LLaMA-RWKV models, over larger context lengths so that they can be true transformer killer

If your @ Neurips, you can find me with an RWKV7 Goose

Which we are scheduled to do a conversion run as well for 32B, and 70B class models

x.com/BlinkDL_AI/s...

Which we are scheduled to do a conversion run as well for 32B, and 70B class models

x.com/BlinkDL_AI/s...

For that refer to our other models coming live today. Including a 37B MoE model, and updated 7B

substack.recursal.ai/p/qrwkv6-and...

For that refer to our other models coming live today. Including a 37B MoE model, and updated 7B

substack.recursal.ai/p/qrwkv6-and...

- take qwen 32B

- freeze feedforward

- replace QKV attention layers, with RWKV6 layers

- train RWKV layers

- unfreeze feedforward & train all layers

You can try the models on featherless AI today:

featherless.ai/models/recur...

- take qwen 32B

- freeze feedforward

- replace QKV attention layers, with RWKV6 layers

- train RWKV layers

- unfreeze feedforward & train all layers

You can try the models on featherless AI today:

featherless.ai/models/recur...

Our 72B model is already "on its way" before the end of the month.

Once we cross that line, RWKV would now be at the scale which meets most Enterprise needs

Release Details:

substack.recursal.ai/p/q-rwkv-6-3...

Our 72B model is already "on its way" before the end of the month.

Once we cross that line, RWKV would now be at the scale which meets most Enterprise needs

Release Details:

substack.recursal.ai/p/q-rwkv-6-3...

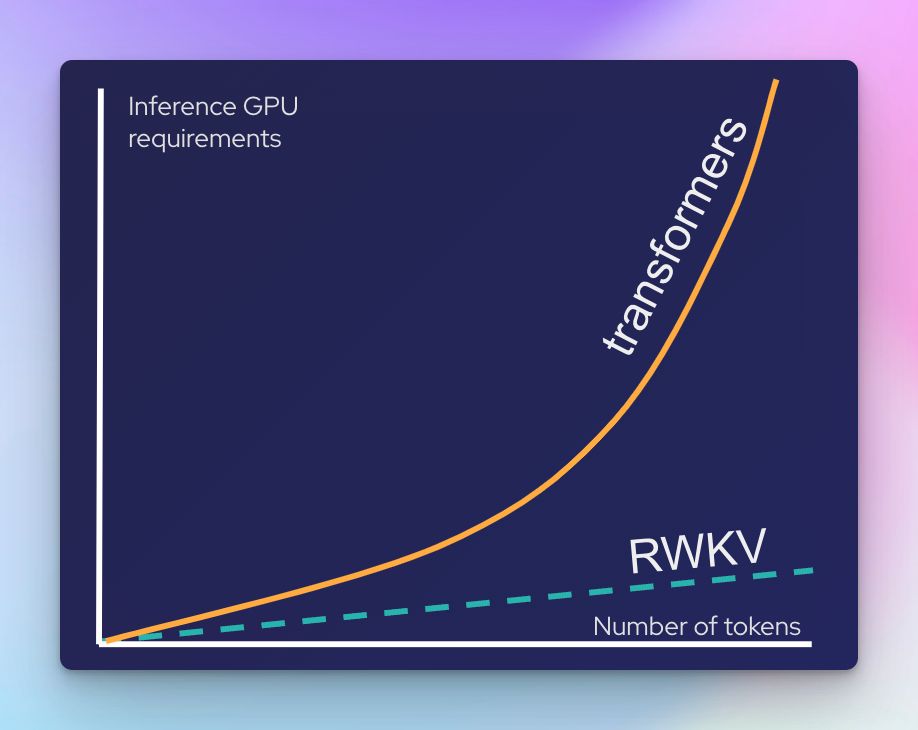

With the move to inference time thinking (O1-reasoning, chain-of-thought, etc). There is an increasing need for scalable inference over larger context lengths

The quadratic inference cost scaling of transformer models is ill suited for such long contexts

With the move to inference time thinking (O1-reasoning, chain-of-thought, etc). There is an increasing need for scalable inference over larger context lengths

The quadratic inference cost scaling of transformer models is ill suited for such long contexts

substack.recursal.ai/p/q-rwkv-6-3...

Considering its 3AM where I am.

I will be napping first before adding more details, and doing a more formal tweet thread

substack.recursal.ai/p/q-rwkv-6-3...

Considering its 3AM where I am.

I will be napping first before adding more details, and doing a more formal tweet thread

Then the posters itself: so that’s fair haha 😅

Then the posters itself: so that’s fair haha 😅

Poutine and beer at a bar

Discord Quebec gang: Toronto Poutine ain’t real Poutine 🤣

(Will be back in SF tomorrow)

Poutine and beer at a bar

Discord Quebec gang: Toronto Poutine ain’t real Poutine 🤣

(Will be back in SF tomorrow)