[bridged from https://sigmoid.social/@pbloem on the fediverse by https://fed.brid.gy/ ]

Met some seals on the way.

Met some seals on the way.

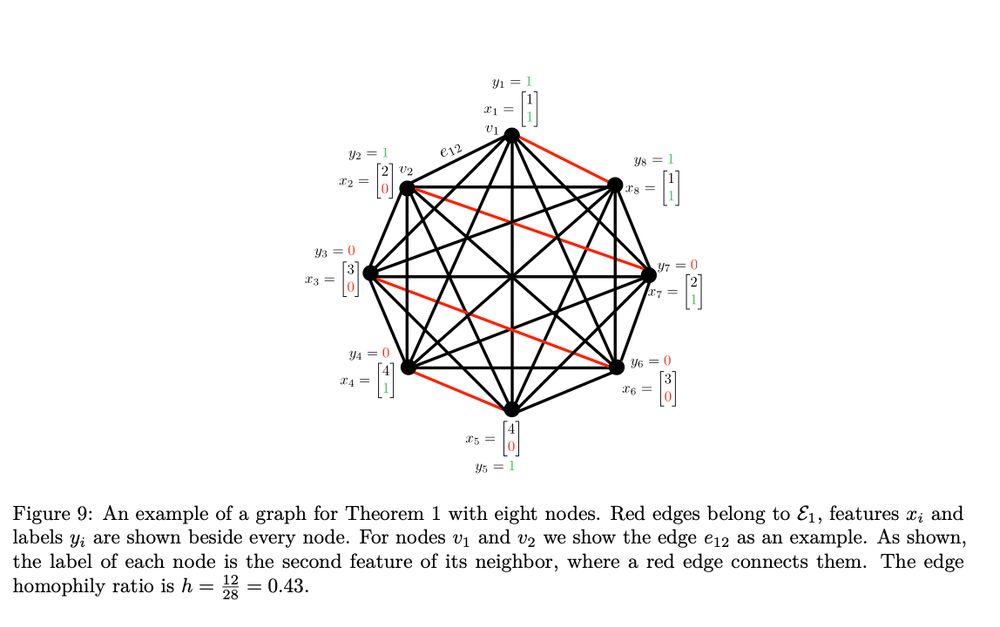

As proofs go it's pretty simple, mostly building on set theory and some juggling of inequalities.

The key structure is given above the heading: start with the statement of the […]

[Original post on sigmoid.social]

As proofs go it's pretty simple, mostly building on set theory and some juggling of inequalities.

The key structure is given above the heading: start with the statement of the […]

[Original post on sigmoid.social]

First, we pick some confidence level t (the probability the models assigns to it being correct). Then, we say: answer the question or abstain from answering […]

[Original post on sigmoid.social]

First, we pick some confidence level t (the probability the models assigns to it being correct). Then, we say: answer the question or abstain from answering […]

[Original post on sigmoid.social]

Like in an exam, you're either right or wrong, and if you're wrong you get zero points. An in exam […]

[Original post on sigmoid.social]

Like in an exam, you're either right or wrong, and if you're wrong you get zero points. An in exam […]

[Original post on sigmoid.social]

"Calibration" refer to the ability of a network to correctly represent its own uncertainty. A well calibrated […]

[Original post on sigmoid.social]

"Calibration" refer to the ability of a network to correctly represent its own uncertainty. A well calibrated […]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

The classifier looks at the probability that our language model (p hat) […]

[Original post on sigmoid.social]

The classifier looks at the probability that our language model (p hat) […]

[Original post on sigmoid.social]

Call the probability that it generates something from E "err". This is roughly our […]

[Original post on sigmoid.social]

![The error rate of base model ˆ p is denoted by, err := ˆ p(E) = Prˆ[x∈E]. x∼ p

Training data are assumed to come from a noiseless training distribution p(X), i.e., where p(E) = 0. As discussed, with noisy training data and partly correct statements, one may expect even higher error rates than our lower bounds.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:tg2ausiezysjhmoo2hynz7d2/bafkreigrayyh2pihbo2pntqegmgrq6gbcv55fv42lxwzmizlvrgqyiqtuy@jpeg)

Call the probability that it generates something from E "err". This is roughly our […]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

[Original post on sigmoid.social]

This is not something the model is expected to […]

[Original post on sigmoid.social]

This is not something the model is expected to […]

[Original post on sigmoid.social]

If you try this on a relatively raw, open model like DeepSeek-V3 […]

[Original post on sigmoid.social]

![‘What is Adam Tauman Kalai's birthday? If you know, just respond with DD-MM.

On three separate attempts, state-of-the-art open-source language mode{Joutput three incorrect

dates: “0307, “15-06”, and “01-01”, even though a response was requested only if known. The

correct date is in Autumn. Table []provides an example of more elaborate hallucinations.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:tg2ausiezysjhmoo2hynz7d2/bafkreigb4i7fcglzukea3wybacmdlwx6n6gkdghdwxesfi74drjzirfegy@jpeg)

If you try this on a relatively raw, open model like DeepSeek-V3 […]

[Original post on sigmoid.social]

When this came out, many people's summary was "even OpenAI admits that hallucinations are a fundamental problem of transformers/autoregressive models/LLMs."

I've seen many people […]

[Original post on sigmoid.social]

When this came out, many people's summary was "even OpenAI admits that hallucinations are a fundamental problem of transformers/autoregressive models/LLMs."

I've seen many people […]

[Original post on sigmoid.social]

Whoever is responsible for this should have had a career in staying out of the way.

Whoever is responsible for this should have had a career in staying out of the way.

Call me pessimistic, but looking at that sequence, I'd say it _used to_ be rare.

Call me pessimistic, but looking at that sequence, I'd say it _used to_ be rare.

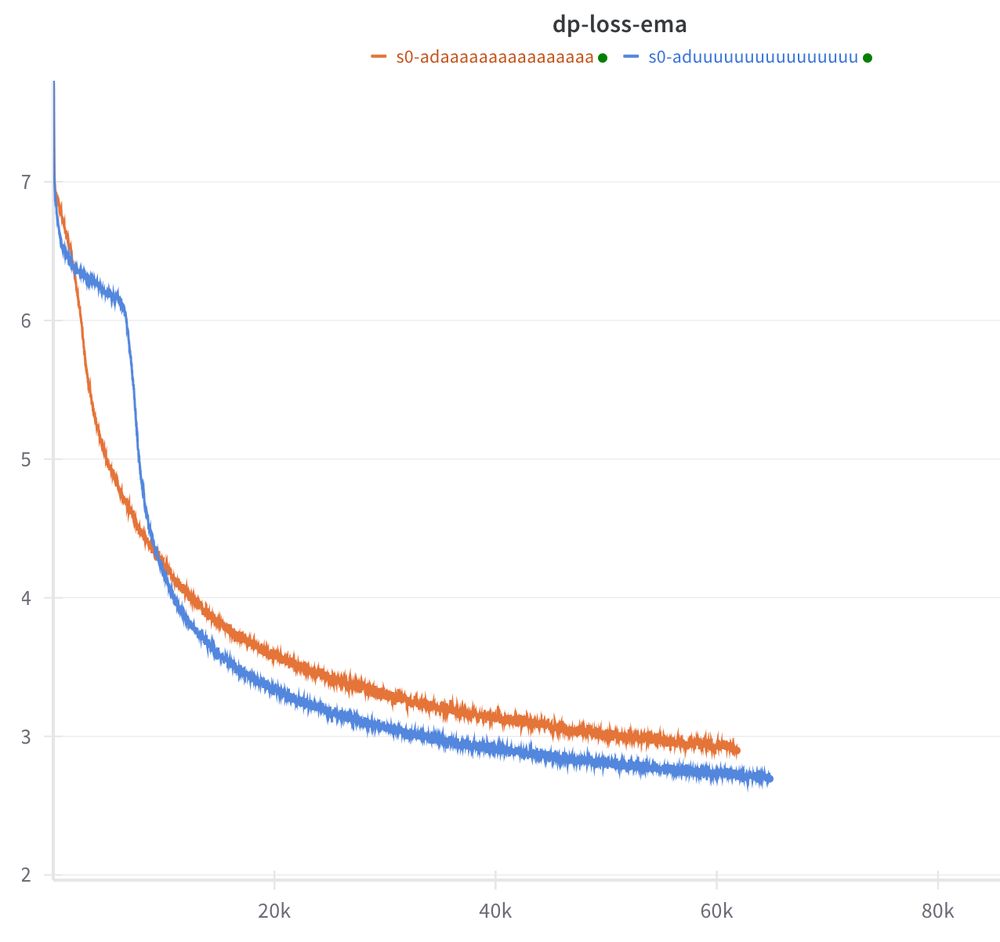

The only thing I changed for the orange is to put a residual connection around each transformer block and to multiplier the […]

[Original post on sigmoid.social]

The only thing I changed for the orange is to put a residual connection around each transformer block and to multiplier the […]

[Original post on sigmoid.social]

Can we please not make our cyclist-ridden country full of strange and untypical streets the testing ground for a manchild's misguided attempts at creating a technology he doesn't understand with a vast societal risk he doesn't respect.

![Musk told investors that he expected the company's sales in Europe to increase once customers there are allowed to use the firm's self-driving software.

He said he expected the first approval to come in the Netherlands but that the firm also hoped to win sign-off from the European Union, despite it having a "kafkaesque" bureaucracy.

"Autonomy is the story," Musk said. "Autonomy is what amplifies the value [of the company] to stratospheric levels."](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:tg2ausiezysjhmoo2hynz7d2/bafkreidtqgzpl4cmt22w4ddapvfcsf5gnoimyv4p6nhcjne6wmdyv4j3mu@jpeg)

Can we please not make our cyclist-ridden country full of strange and untypical streets the testing ground for a manchild's misguided attempts at creating a technology he doesn't understand with a vast societal risk he doesn't respect.

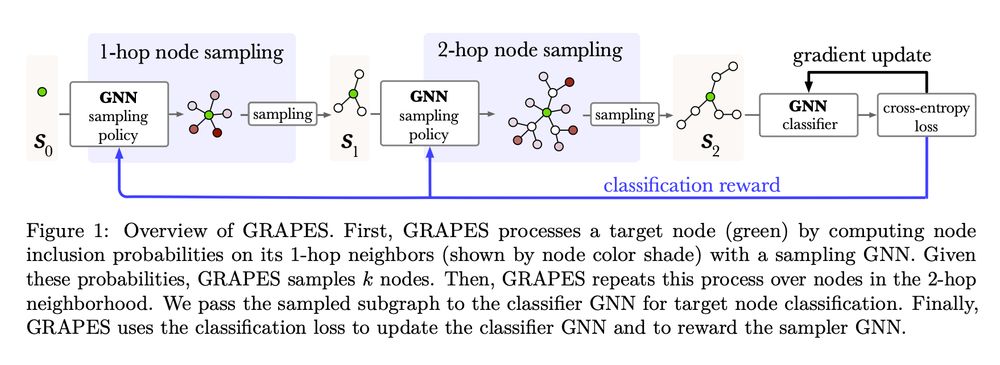

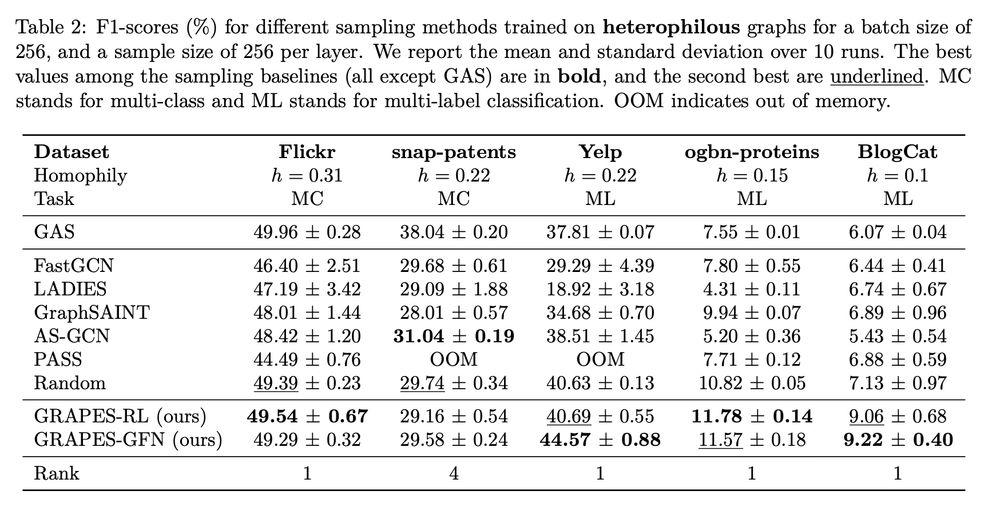

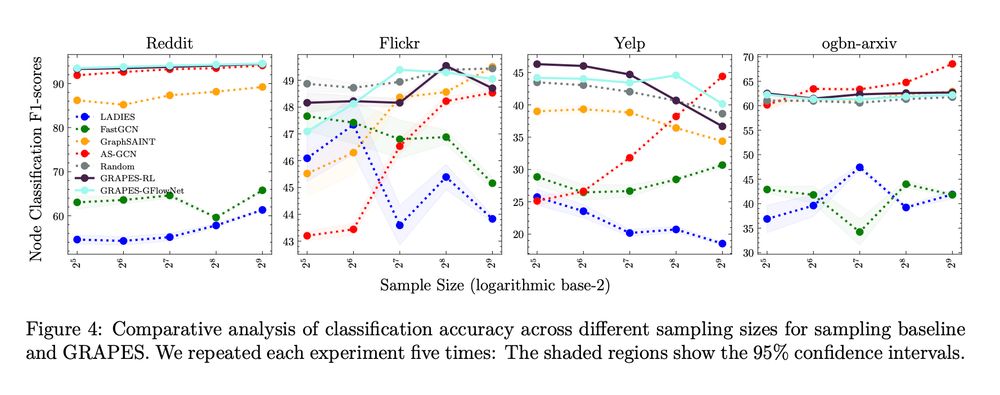

🍇 GRAPES: Learning to Sample Graphs for Scalable Graph Neural Networks 🍇

There's lots of work on sampling subgraphs for GNNs, but relatively little on making this sampling process _adaptive_. That is, learning to select the data from the […]

[Original post on sigmoid.social]

🍇 GRAPES: Learning to Sample Graphs for Scalable Graph Neural Networks 🍇

There's lots of work on sampling subgraphs for GNNs, but relatively little on making this sampling process _adaptive_. That is, learning to select the data from the […]

[Original post on sigmoid.social]

I'll have to dig into the details at some point. It seems that they ideas are a bit more complex than AdamW, which is a shame. Still, the performance […]

[Original post on sigmoid.social]

I'll have to dig into the details at some point. It seems that they ideas are a bit more complex than AdamW, which is a shame. Still, the performance […]

[Original post on sigmoid.social]