Paul Soulos

@paulsoulos.bsky.social

Computational Cognitive Science @JhuCogsci researching neurosymbolic methods. Previously wearable engineering @fitbit and @Google.

While both robotics and LM can be cast as next-token prediction, the token distribution for computer agents seems more like abstract motor programs (robotics) vs. language. This puts computer use on the trajectory of robotics which is slower than LLMs. 2/2

May 30, 2025 at 3:21 PM

While both robotics and LM can be cast as next-token prediction, the token distribution for computer agents seems more like abstract motor programs (robotics) vs. language. This puts computer use on the trajectory of robotics which is slower than LLMs. 2/2

📜 Check out our paper for all of the details and results. openreview.net/forum?id=fOQ....

Compositional Generalization Across Distributional Shifts with...

Neural networks continue to struggle with compositional generalization, and this issue is exacerbated by a lack of massive pre-training. One successful approach for developing neural systems which...

openreview.net

December 9, 2024 at 3:06 PM

📜 Check out our paper for all of the details and results. openreview.net/forum?id=fOQ....

📅 You can find me at the following presentations:

- Poster Session 1 East #4009 on Wednesday, December 11, from 11a-2p PST.

- System 2 Reasoning Workshop Spotlight Oral Talk on Sunday, December 15, from 9:30-10a PST.

- System 2 Reasoning Workshop poster sessions on Sunday, December 15.

- Poster Session 1 East #4009 on Wednesday, December 11, from 11a-2p PST.

- System 2 Reasoning Workshop Spotlight Oral Talk on Sunday, December 15, from 9:30-10a PST.

- System 2 Reasoning Workshop poster sessions on Sunday, December 15.

December 9, 2024 at 3:06 PM

📅 You can find me at the following presentations:

- Poster Session 1 East #4009 on Wednesday, December 11, from 11a-2p PST.

- System 2 Reasoning Workshop Spotlight Oral Talk on Sunday, December 15, from 9:30-10a PST.

- System 2 Reasoning Workshop poster sessions on Sunday, December 15.

- Poster Session 1 East #4009 on Wednesday, December 11, from 11a-2p PST.

- System 2 Reasoning Workshop Spotlight Oral Talk on Sunday, December 15, from 9:30-10a PST.

- System 2 Reasoning Workshop poster sessions on Sunday, December 15.

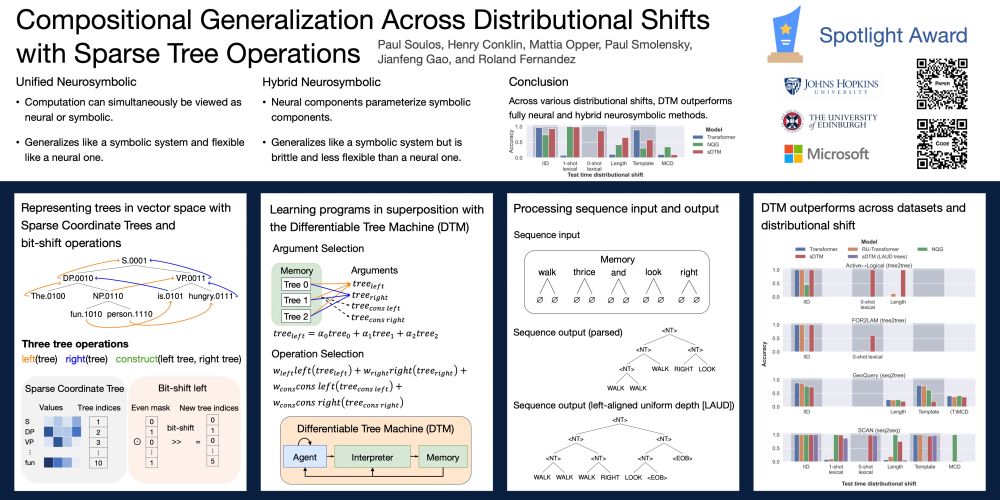

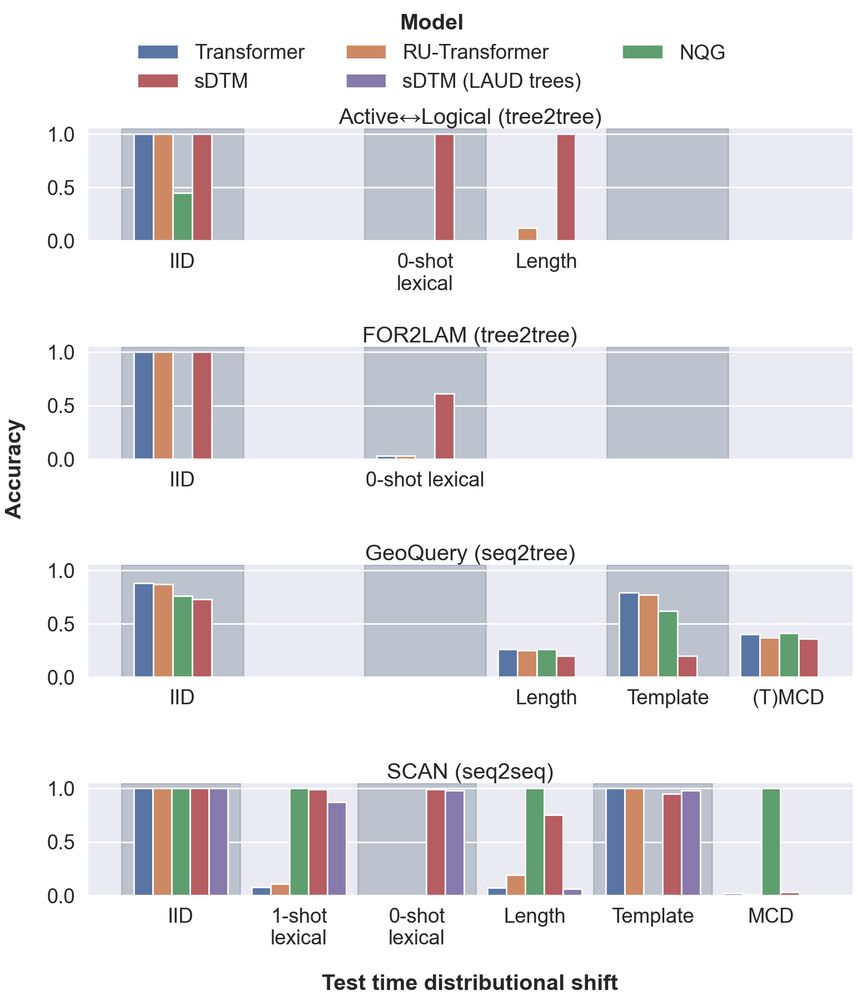

📈DTM and sDTM operate on trees, and we introduce a very simple and dataset independent method to embed sequence inputs and outputs as trees. Across a variety of datasets and test time distributional shifts, sDTM outperforms fully neural and hybrid neurosymbolic models.

December 9, 2024 at 3:06 PM

📈DTM and sDTM operate on trees, and we introduce a very simple and dataset independent method to embed sequence inputs and outputs as trees. Across a variety of datasets and test time distributional shifts, sDTM outperforms fully neural and hybrid neurosymbolic models.

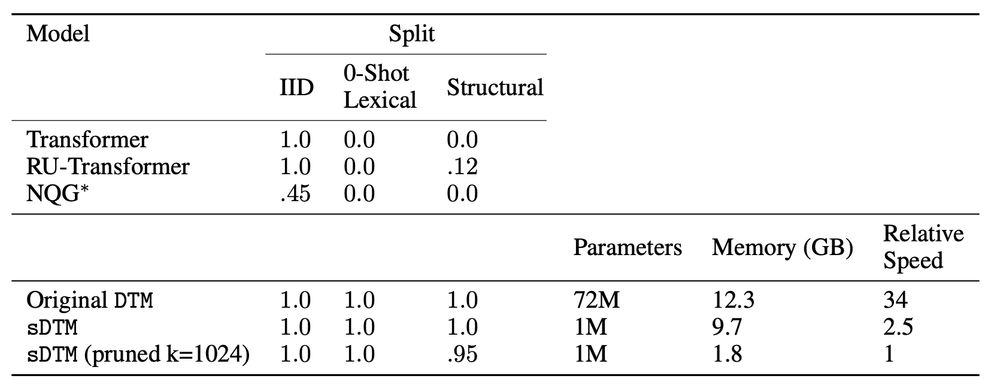

🌳We introduce the Sparse Differentiable Tree Machine (sDTM), an extension of (DTM) that introduces a new way to represent trees in vector space. Sparse Coordinate Trees (SCT) reduce the parameter count and memory usage over the previous DTM by an order of magnitude and lead to a 30x speedup!

December 9, 2024 at 3:06 PM

🌳We introduce the Sparse Differentiable Tree Machine (sDTM), an extension of (DTM) that introduces a new way to represent trees in vector space. Sparse Coordinate Trees (SCT) reduce the parameter count and memory usage over the previous DTM by an order of magnitude and lead to a 30x speedup!

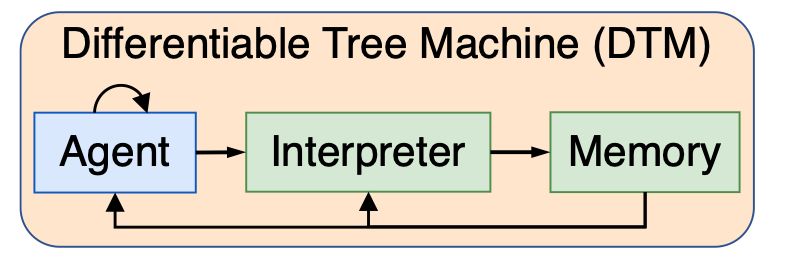

Our previous work introducing the Differentiable Tree Machine (DTM) is an example of a unified neurosymbolic system where trees are represented and operated over in vector space.

December 9, 2024 at 3:06 PM

Our previous work introducing the Differentiable Tree Machine (DTM) is an example of a unified neurosymbolic system where trees are represented and operated over in vector space.

Hybrid systems use neural networks to parameterize symbolic components and can struggle with the same pitfalls as fully symbolic systems. In Unified Neurosymbolic systems, operations can simultaneously be viewed as either neural or symbolic, and this provides a fully neural path through the network.

December 9, 2024 at 3:06 PM

Hybrid systems use neural networks to parameterize symbolic components and can struggle with the same pitfalls as fully symbolic systems. In Unified Neurosymbolic systems, operations can simultaneously be viewed as either neural or symbolic, and this provides a fully neural path through the network.

🧠 Neural networks struggle with compositionality, and symbolic methods struggle with flexibility and scalability. Neurosymbolic methods promise to combine the benefits of both methods, but there is a distinction between *hybrid* neurosymbolic methods and *unified* neurosymbolic methods.

December 9, 2024 at 3:06 PM

🧠 Neural networks struggle with compositionality, and symbolic methods struggle with flexibility and scalability. Neurosymbolic methods promise to combine the benefits of both methods, but there is a distinction between *hybrid* neurosymbolic methods and *unified* neurosymbolic methods.

Hi Melanie, I'll be there presenting some neurosymbolic work at the main conference and the system 2 workshop, as well as some other early work on Transformers and Computational Linguistics at the system 2 workshop!

openreview.net/forum?id=fOQ...

openreview.net/forum?id=6Pj...

openreview.net/forum?id=fOQ...

openreview.net/forum?id=6Pj...

Compositional Generalization Across Distributional Shifts with...

Neural networks continue to struggle with compositional generalization, and this issue is exacerbated by a lack of massive pre-training. One successful approach for developing neural systems which...

openreview.net

December 1, 2024 at 2:44 PM

Hi Melanie, I'll be there presenting some neurosymbolic work at the main conference and the system 2 workshop, as well as some other early work on Transformers and Computational Linguistics at the system 2 workshop!

openreview.net/forum?id=fOQ...

openreview.net/forum?id=6Pj...

openreview.net/forum?id=fOQ...

openreview.net/forum?id=6Pj...

Want to learn more? Speak to the wonderful Kate McCurdy at #EMNLP2024 poster session 2, poster 1053, tomorrow from 11-12:30p ET.

Check out the paper if you want the full details! aclanthology.org/2024.emnlp-m...

Check out the paper if you want the full details! aclanthology.org/2024.emnlp-m...

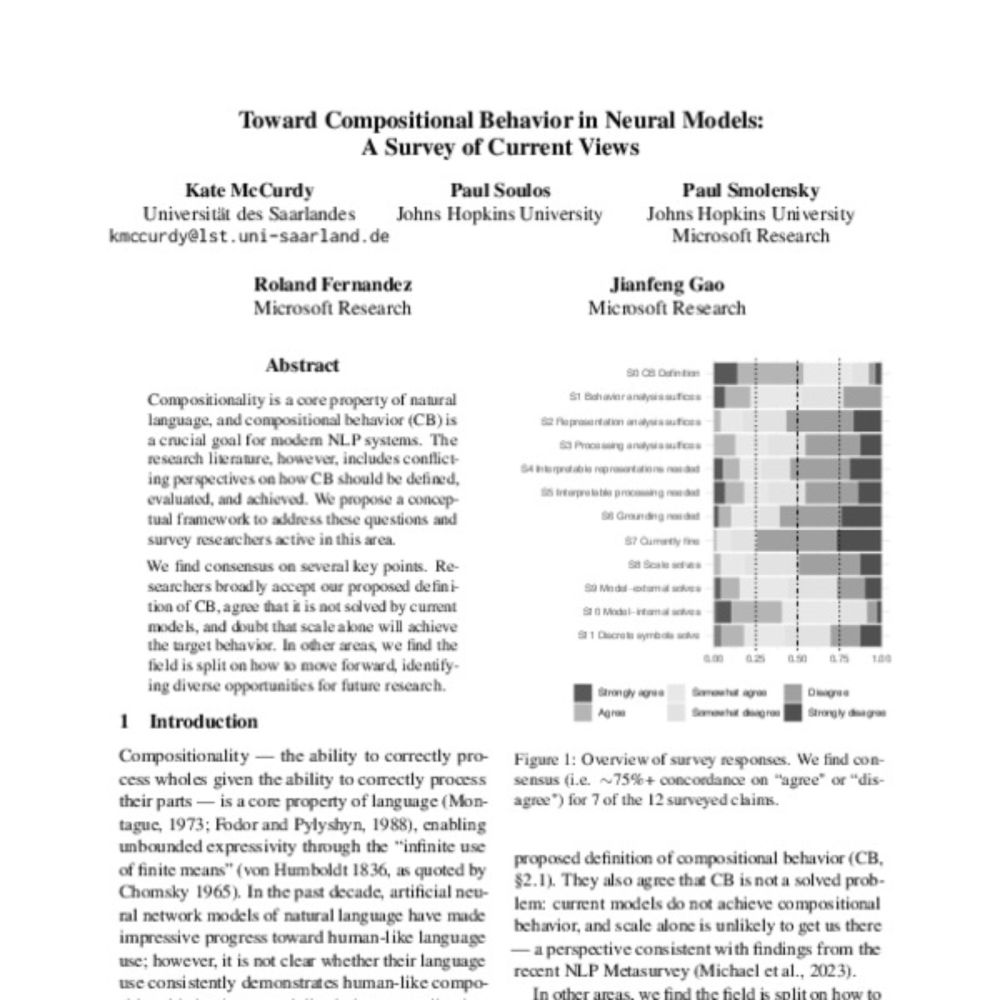

Toward Compositional Behavior in Neural Models: A Survey of Current Views

Kate McCurdy, Paul Soulos, Paul Smolensky, Roland Fernandez, Jianfeng Gao. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 2024.

aclanthology.org

November 11, 2024 at 8:40 PM

Want to learn more? Speak to the wonderful Kate McCurdy at #EMNLP2024 poster session 2, poster 1053, tomorrow from 11-12:30p ET.

Check out the paper if you want the full details! aclanthology.org/2024.emnlp-m...

Check out the paper if you want the full details! aclanthology.org/2024.emnlp-m...

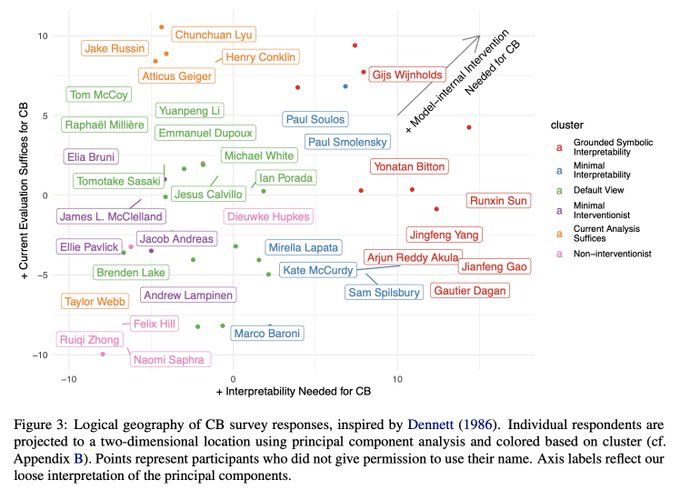

Researchers are split on HOW to achieve compositional behavior. Some propose data interventions, others argue we need entirely new model architectures, and some suggest we need to integrate symbolic paradigms.

November 11, 2024 at 8:40 PM

Researchers are split on HOW to achieve compositional behavior. Some propose data interventions, others argue we need entirely new model architectures, and some suggest we need to integrate symbolic paradigms.

Key finding: ~75% of researchers agree that CURRENT neural models do NOT demonstrate true compositional behavior. Scale alone won't solve this - we need fundamental breakthroughs.

November 11, 2024 at 8:40 PM

Key finding: ~75% of researchers agree that CURRENT neural models do NOT demonstrate true compositional behavior. Scale alone won't solve this - we need fundamental breakthroughs.

We surveyed 79 top AI researchers about compositional behavior. Our goal? Map out the field's consensus and disagreements on how neural models process language to illuminate promising paths forward. Inspired by Dennett’s logical geography, we cluster participants by responses 🗺️

November 11, 2024 at 8:40 PM

We surveyed 79 top AI researchers about compositional behavior. Our goal? Map out the field's consensus and disagreements on how neural models process language to illuminate promising paths forward. Inspired by Dennett’s logical geography, we cluster participants by responses 🗺️

Compositionality is fundamental to language: the ability to understand complex expressions by combining simpler parts. But do current AI models REALLY understand this? Spoiler: Most researchers say NO.

November 11, 2024 at 8:40 PM

Compositionality is fundamental to language: the ability to understand complex expressions by combining simpler parts. But do current AI models REALLY understand this? Spoiler: Most researchers say NO.

Besides being ergonomically beneficial, a split keyboard can prevent this from happening!

September 19, 2024 at 7:46 AM

Besides being ergonomically beneficial, a split keyboard can prevent this from happening!