Patrick Liu

@patrickpliu.bsky.social

Columbia Political Science | PhD Student

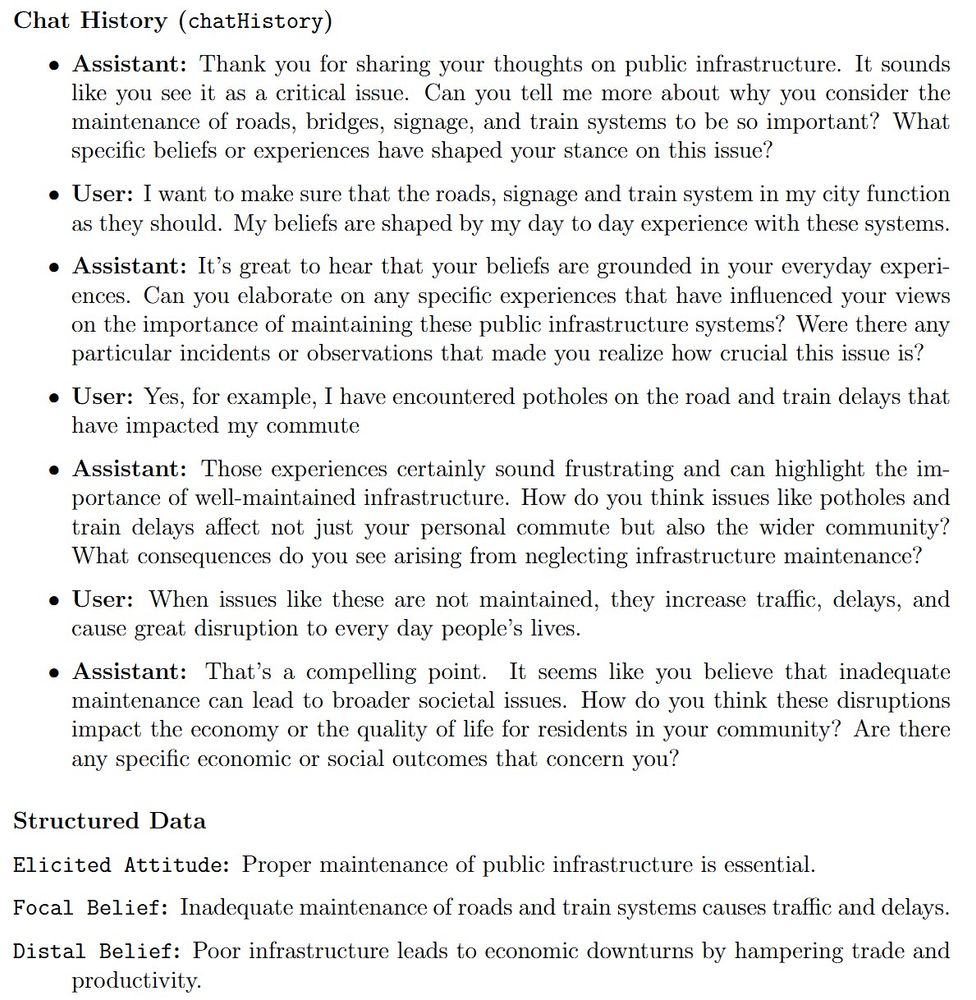

Ex: Respondent said they care about public infrastructure.

In the same wave, they held the following convo with an AI chatbot. After GPT synthesized a summary attitude, focal belief, and distal belief, they saw treatment/placebo text and answered pre- and post-treatment Qs.

In the same wave, they held the following convo with an AI chatbot. After GPT synthesized a summary attitude, focal belief, and distal belief, they saw treatment/placebo text and answered pre- and post-treatment Qs.

April 2, 2025 at 1:04 PM

Ex: Respondent said they care about public infrastructure.

In the same wave, they held the following convo with an AI chatbot. After GPT synthesized a summary attitude, focal belief, and distal belief, they saw treatment/placebo text and answered pre- and post-treatment Qs.

In the same wave, they held the following convo with an AI chatbot. After GPT synthesized a summary attitude, focal belief, and distal belief, they saw treatment/placebo text and answered pre- and post-treatment Qs.

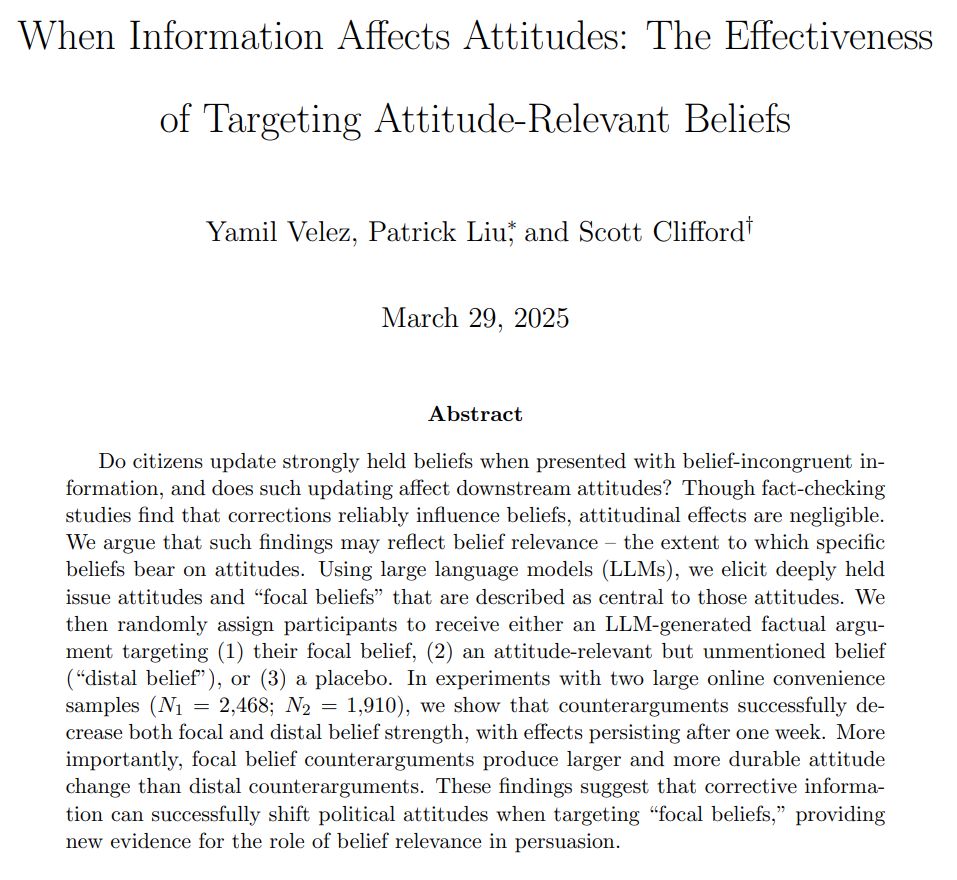

🧵 Why do facts often change beliefs but not attitudes?

In a new WP with @yamilrvelez.bsky.social and @scottclifford.bsky.social, we caution against interpreting this as rigidity or motivated reasoning. Often, the beliefs *relevant* to people’s attitudes are not what researchers expect.

In a new WP with @yamilrvelez.bsky.social and @scottclifford.bsky.social, we caution against interpreting this as rigidity or motivated reasoning. Often, the beliefs *relevant* to people’s attitudes are not what researchers expect.

April 2, 2025 at 1:04 PM

🧵 Why do facts often change beliefs but not attitudes?

In a new WP with @yamilrvelez.bsky.social and @scottclifford.bsky.social, we caution against interpreting this as rigidity or motivated reasoning. Often, the beliefs *relevant* to people’s attitudes are not what researchers expect.

In a new WP with @yamilrvelez.bsky.social and @scottclifford.bsky.social, we caution against interpreting this as rigidity or motivated reasoning. Often, the beliefs *relevant* to people’s attitudes are not what researchers expect.