Paper pentagon? Tie a simple overhand knot with a long strip of paper, keeping it flat, cut off the ends.

Wow. Life changing trick 😊

Paper pentagon? Tie a simple overhand knot with a long strip of paper, keeping it flat, cut off the ends.

Wow. Life changing trick 😊

I need to look into this clustering method called Gaussian Mixture Models

I need to look into this clustering method called Gaussian Mixture Models

Why? Some frames just move things already drawn by a few px, others have to redraw a whole new item.

Saying "the P50 shifted from 6 ms to 4.6 ms" hides these clusters.

Why? Some frames just move things already drawn by a few px, others have to redraw a whole new item.

Saying "the P50 shifted from 6 ms to 4.6 ms" hides these clusters.

First, maybe your test sucks.

Here, both "before" & "after" show a slow 1st (starting activity) & 40th frame (load images)

That test isn't correctly focused on scrolling frames, it includes initial loading frames.

First, maybe your test sucks.

Here, both "before" & "after" show a slow 1st (starting activity) & 40th frame (load images)

That test isn't correctly focused on scrolling frames, it includes initial loading frames.

cc @rahulrav.com

cc @rahulrav.com

- I'm guess you picked 100 bins as default, and (max-min)/ bin_count as bucket width. Not always good. Try other things, e.g. en.wikipedia.org/wiki/Freedma... ?

- I'm guess you picked 100 bins as default, and (max-min)/ bin_count as bucket width. Not always good. Try other things, e.g. en.wikipedia.org/wiki/Freedma... ?

- The Drag&Drop box should be the entire screen (if you miss the rectangle, Chrome just opens your json file)

- I loaded a file, it showed a nice chart, but then bottom seems to have something else trying to load (I see a loader) and nothing is loading.

- The Drag&Drop box should be the entire screen (if you miss the rectangle, Chrome just opens your json file)

- I loaded a file, it showed a nice chart, but then bottom seems to have something else trying to load (I see a loader) and nothing is loading.

Showing how frame metrocs & percentiles are computed in Macrobenchmark, then I went on to explain how to draw a distribution, compute confidence intervals, and do bootstrapping. That was a little ambitious, but tons of fun

#AndroidFun

Showing how frame metrocs & percentiles are computed in Macrobenchmark, then I went on to explain how to draw a distribution, compute confidence intervals, and do bootstrapping. That was a little ambitious, but tons of fun

#AndroidFun

I'll be talking about Benchmarking with confidence.. wish me luck, live demos incoming

www.meetup.com/new-york-and...

#AndroidDev

I'll be talking about Benchmarking with confidence.. wish me luck, live demos incoming

www.meetup.com/new-york-and...

#AndroidDev

Mardi 21 Octobre (dans 2 semaines!) à 19h, le Paris Android User Group organise un meetup chez Radio France. Il paraît que le lieu est magnifique!

Je serai là en 2e partie pour vous parler de benchmarks dignes de confiance.

www.meetup.com/android-pari...

#AndroidDev #paris

Mardi 21 Octobre (dans 2 semaines!) à 19h, le Paris Android User Group organise un meetup chez Radio France. Il paraît que le lieu est magnifique!

Je serai là en 2e partie pour vous parler de benchmarks dignes de confiance.

www.meetup.com/android-pari...

#AndroidDev #paris

- Last week, Block offsite in California with 8000 employees... Sleeping from midnight (party!) to 4am (jetlag!) every night.. + 2 talk engs talks to give

- then Octoberfest in Munich this weekend

- then Droidcon Berlin next week, with 5mn in keynote + 40mm talk

#AndroidDev

- Last week, Block offsite in California with 8000 employees... Sleeping from midnight (party!) to 4am (jetlag!) every night.. + 2 talk engs talks to give

- then Octoberfest in Munich this weekend

- then Droidcon Berlin next week, with 5mn in keynote + 40mm talk

#AndroidDev

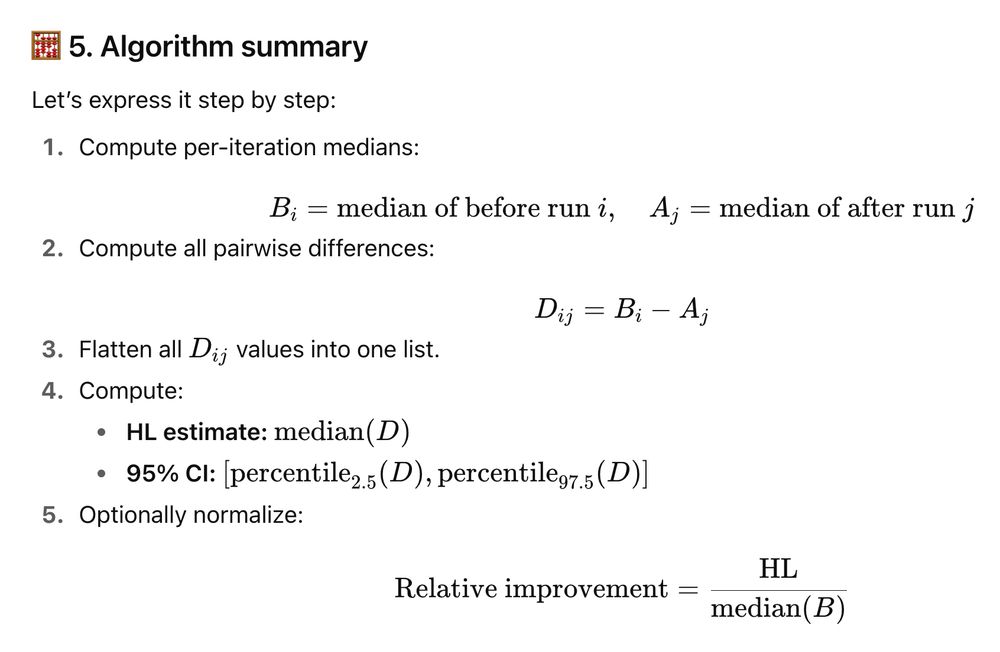

Let's say we run a benchmark again with no change and get a slight different aggregate. E.g. for "before" P50 -5 instead of -4.2. Suddenly the improvement vs "after" looks less good!

What now? Which one do you pick?

This is why we need stats.

Let's say we run a benchmark again with no change and get a slight different aggregate. E.g. for "before" P50 -5 instead of -4.2. Suddenly the improvement vs "after" looks less good!

What now? Which one do you pick?

This is why we need stats.

AOSP should document APIs that make binder calls + have custom trace sections for all binder calls.

AOSP should document APIs that make binder calls + have custom trace sections for all binder calls.