Want to learn more?

📜Paper arxiv.org/abs/2410.24153

💾Code github.com/bhoov/distri...

👨🏫NeurIPS Page: neurips.cc/virtual/2024...

🎥SlidesLive (use Chrome) recorder-v3.slideslive.com#/share?share...

Want to learn more?

📜Paper arxiv.org/abs/2410.24153

💾Code github.com/bhoov/distri...

👨🏫NeurIPS Page: neurips.cc/virtual/2024...

🎥SlidesLive (use Chrome) recorder-v3.slideslive.com#/share?share...

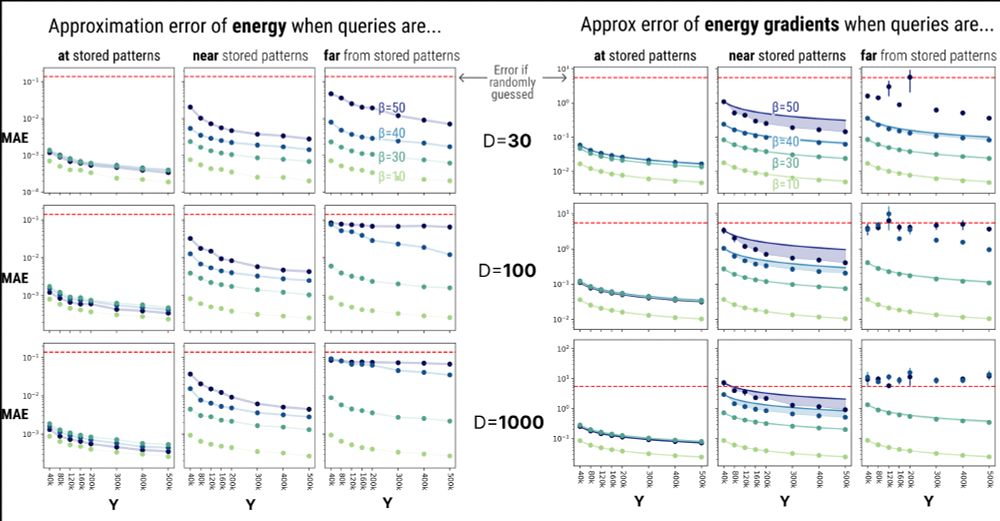

1️⃣Far from memories

2️⃣“Spiky” (i.e., low temperature/high beta)

We need more random features Y to reconstruct highly occluded/correlated data!

1️⃣Far from memories

2️⃣“Spiky” (i.e., low temperature/high beta)

We need more random features Y to reconstruct highly occluded/correlated data!

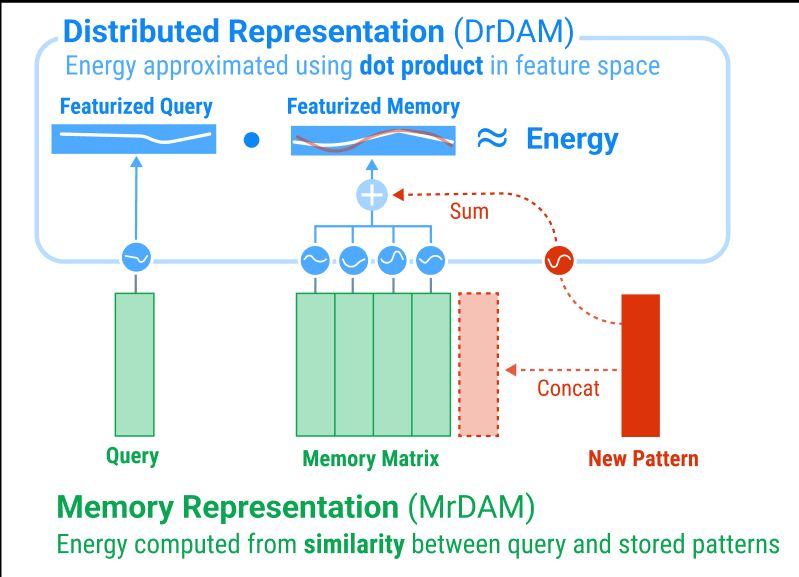

1️⃣A similarity func between stored patterns & noisy input

2️⃣A rapidly growing separation func (e.g., exponential)

Together, they reveal kernels (e.g., RBF) that can be approximated via the kernel trick & random features (Rahimi&Recht, 2007)

1️⃣A similarity func between stored patterns & noisy input

2️⃣A rapidly growing separation func (e.g., exponential)

Together, they reveal kernels (e.g., RBF) that can be approximated via the kernel trick & random features (Rahimi&Recht, 2007)

In traditional Memory representations of DenseAMs (MrDAM) one row in the weight matrix stores one pattern. In our new Distributed representation (DrDAM) patterns are entangled via superposition, “distributed” across all dims of a featurized memory vector

In traditional Memory representations of DenseAMs (MrDAM) one row in the weight matrix stores one pattern. In our new Distributed representation (DrDAM) patterns are entangled via superposition, “distributed” across all dims of a featurized memory vector