Thoughts & opinions are my own and do not necessarily represent my employer.

Is discrimination the right way to frame the issues of lang tech? Or should we answer deeper rooted questions? And how does tech fit in systems of oppression?

Is discrimination the right way to frame the issues of lang tech? Or should we answer deeper rooted questions? And how does tech fit in systems of oppression?

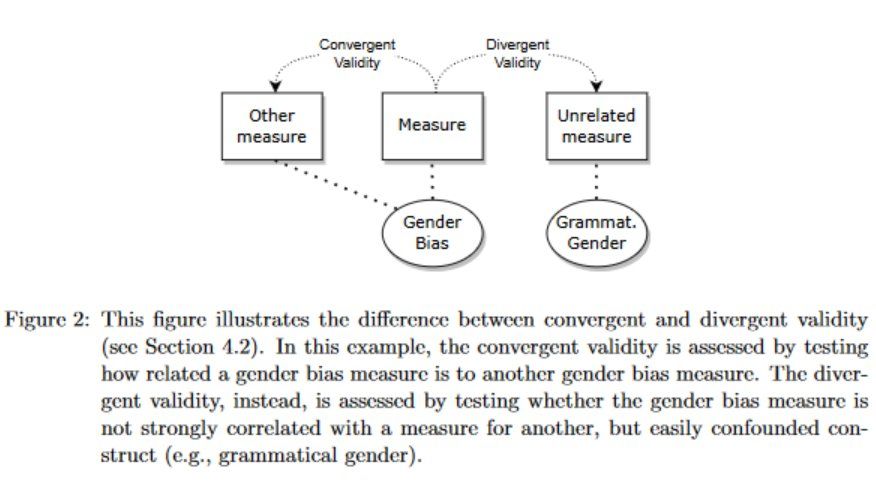

We also presented our own work on strategies for testing the validity and reliability of LM bias measures:

www.jair.org/index.php/ja...

We also presented our own work on strategies for testing the validity and reliability of LM bias measures:

www.jair.org/index.php/ja...

Can we create equitable ML technologies? Can statistical models faithfully express human language? Or are tokenizers "tokenizing" people—creating a Frankenstein monster of lived experiences?

Can we create equitable ML technologies? Can statistical models faithfully express human language? Or are tokenizers "tokenizing" people—creating a Frankenstein monster of lived experiences?

@hellinanigatu.bsky.social introduced us to the Capabilities Approach and how it can help us better understand the social impact of language technologies—with case studies of failing tech in the Majority World.

@hellinanigatu.bsky.social introduced us to the Capabilities Approach and how it can help us better understand the social impact of language technologies—with case studies of failing tech in the Majority World.

Flor Plaza discussed the importance of studying gendered emotional stereotypes in LLMs, and how collaborating with philosophers benefits work on bias evaluation greatly.

Flor Plaza discussed the importance of studying gendered emotional stereotypes in LLMs, and how collaborating with philosophers benefits work on bias evaluation greatly.

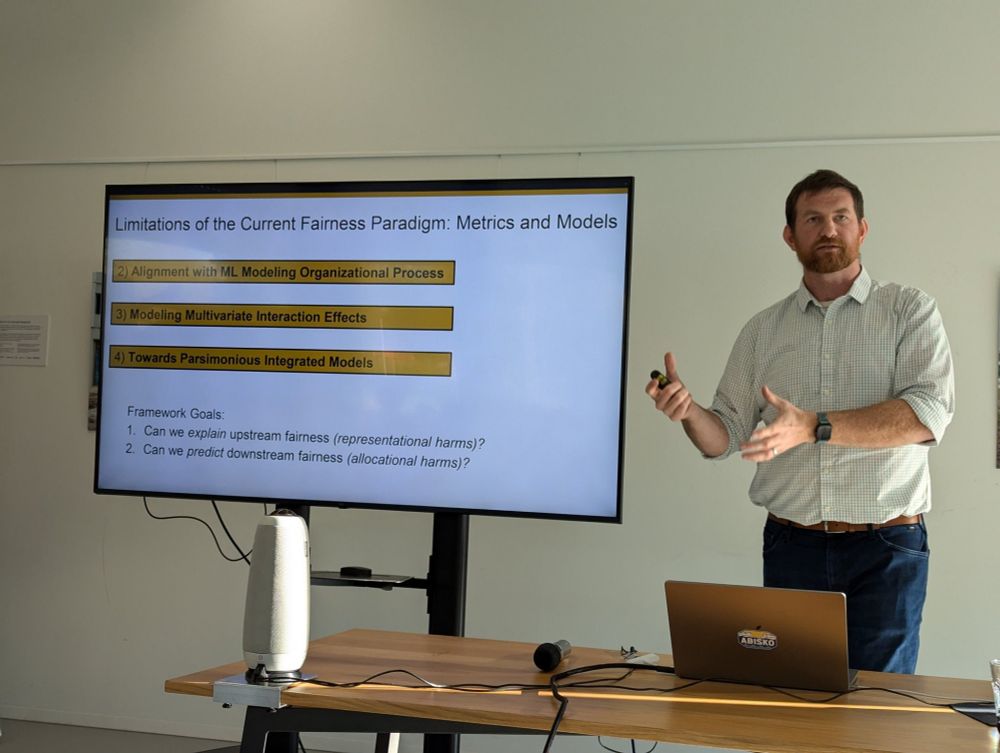

While fairness is often viewed as a metric, using integrated models instead can help with explaining upstream bias, predicting downstream fairness, and capturing intersectional bias.

While fairness is often viewed as a metric, using integrated models instead can help with explaining upstream bias, predicting downstream fairness, and capturing intersectional bias.

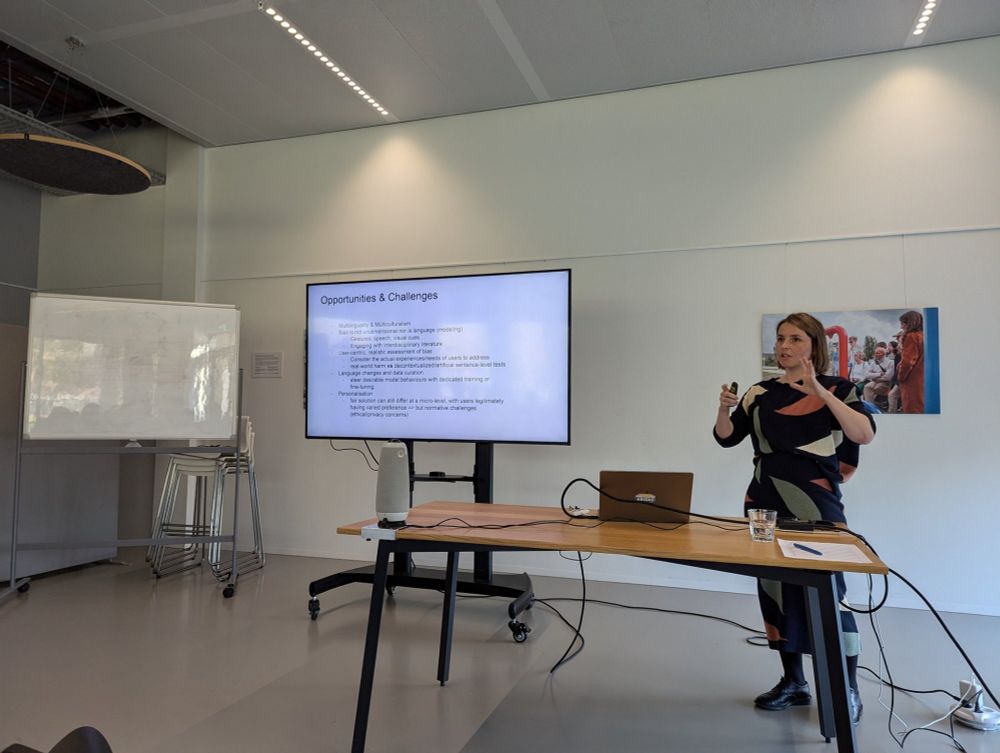

Eva Vanmassenhove: how has research on gender bias in MT developed over the years? Important issues, like non-binary gender bias, now fortunately get more attention. Yet, fundamental problems (that initially seemed trivial) remain unsolved.

Eva Vanmassenhove: how has research on gender bias in MT developed over the years? Important issues, like non-binary gender bias, now fortunately get more attention. Yet, fundamental problems (that initially seemed trivial) remain unsolved.

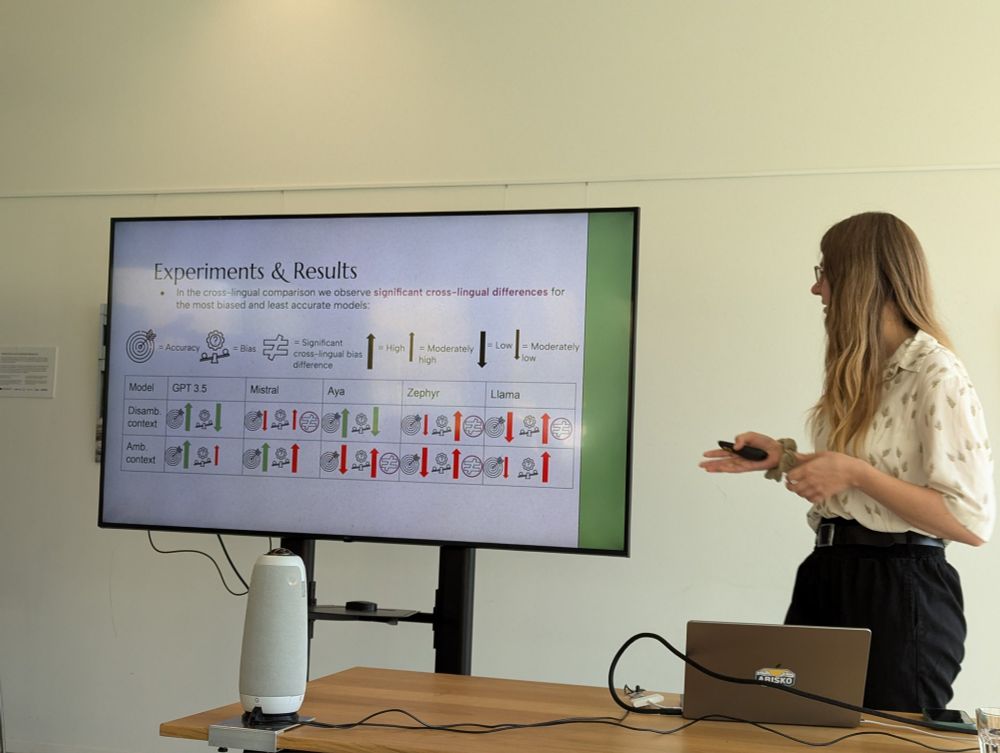

Vera Neplenbroek presented a multilingual extension of the BBQ bias benchmark to study bias across English, Dutch, Spanish, and Turkish.

"Multilingual LLMs are not necessarily multicultural!"

Vera Neplenbroek presented a multilingual extension of the BBQ bias benchmark to study bias across English, Dutch, Spanish, and Turkish.

"Multilingual LLMs are not necessarily multicultural!"

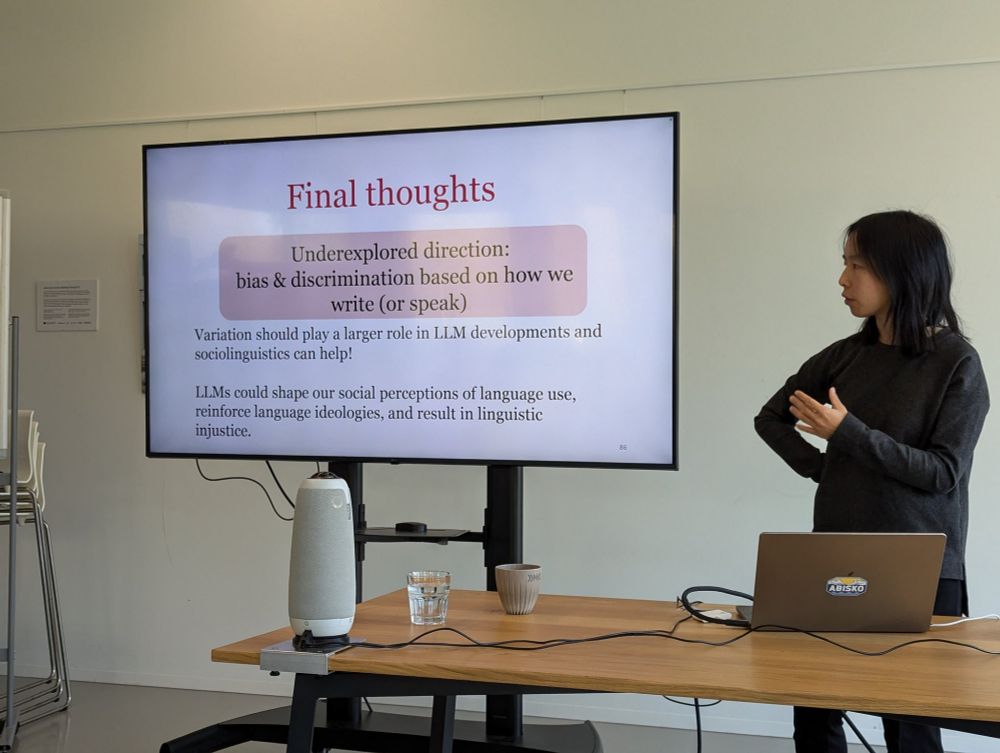

Non-standard language is often seen as noisy/incorrect data, but this ignores the reality of language. Variation should play a larger role in LLM developments and sociolinguistics can help!

Non-standard language is often seen as noisy/incorrect data, but this ignores the reality of language. Variation should play a larger role in LLM developments and sociolinguistics can help!

We're looking back at two inspiring days of talks, posters, and discussions—thanks to everyone who participated!

wai-amsterdam.github.io

We're looking back at two inspiring days of talks, posters, and discussions—thanks to everyone who participated!

wai-amsterdam.github.io