The new agreement removes mention of developing tools “for authenticating content and tracking its provenance” as well as “labeling synthetic content,” signaling less interest in tracking misinformation and deep fakes.

www.wired.com/story/ai-saf...

The new agreement removes mention of developing tools “for authenticating content and tracking its provenance” as well as “labeling synthetic content,” signaling less interest in tracking misinformation and deep fakes.

www.wired.com/story/ai-saf...

However, a useful paper to cite with empirical information for people using LMs for text analysis.

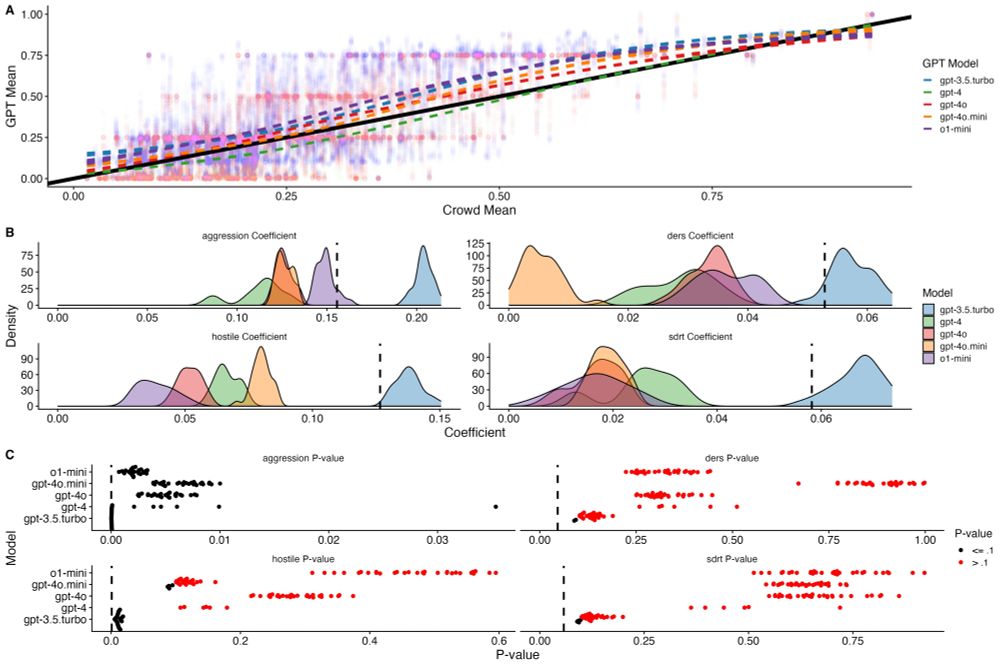

Given this, there are still research avenues for closed source LMs where limited reproducibility is tolerable.

We show:

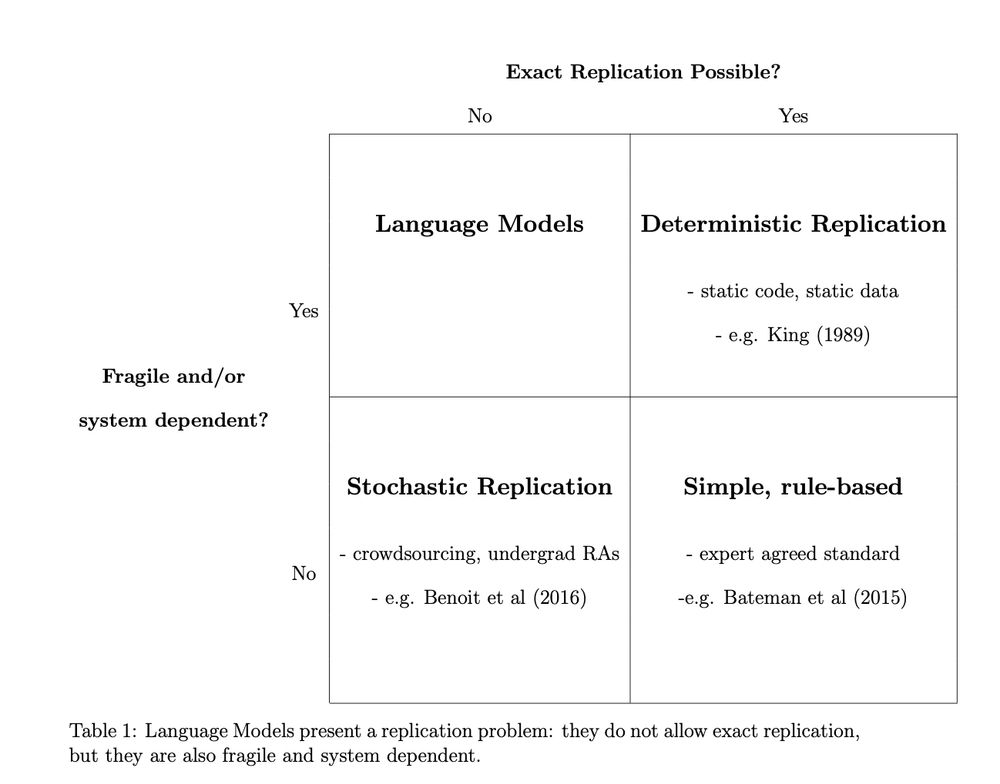

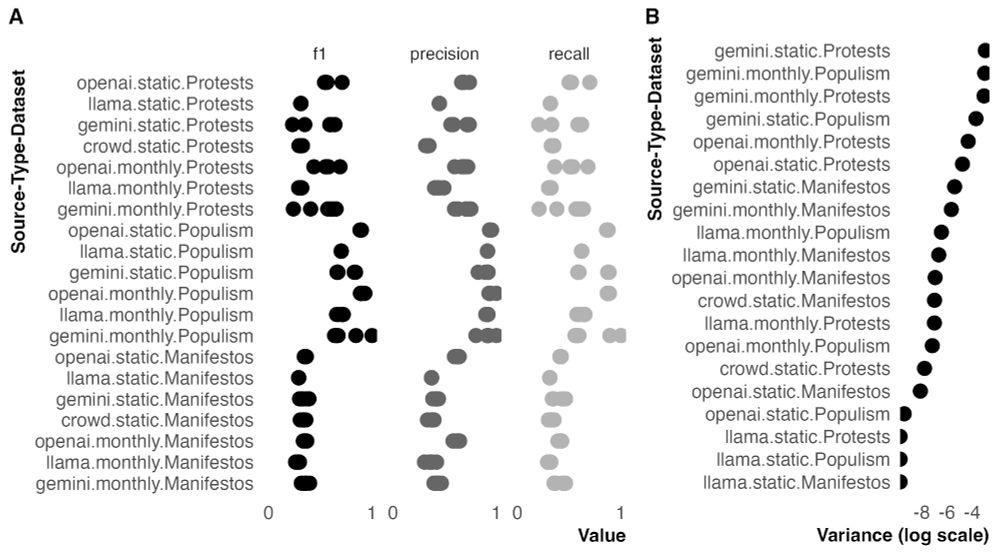

1. current applications of LMs in political science research *don't* meet basic standards of reproducibility...

However, a useful paper to cite with empirical information for people using LMs for text analysis.

Given this, there are still research avenues for closed source LMs where limited reproducibility is tolerable.