Robotics. Reinforcement learning. AI.

& collaborators: arxiv.org/abs/2502.04327

& collaborators: arxiv.org/abs/2502.04327

Seohong Park

uses a distillation (reflow-like) scheme to train flow matching actor, and works super well!

Check it out: seohong.me/projects/fql/

Seohong Park

uses a distillation (reflow-like) scheme to train flow matching actor, and works super well!

Check it out: seohong.me/projects/fql/

github.com/oumi-ai/oumi

github.com/oumi-ai/oumi

There are great tokenizers for text and images, but existing action tokenizers don’t work well for dexterous, high-frequency control. We’re excited to release (and open-source) FAST, an efficient tokenizer for robot actions.

There are great tokenizers for text and images, but existing action tokenizers don’t work well for dexterous, high-frequency control. We’re excited to release (and open-source) FAST, an efficient tokenizer for robot actions.

Lots more on the project website: yanqval.github.io/PAE/

Lots more on the project website: yanqval.github.io/PAE/

@CharlesXu0124

, we present RLDG, which trains VLAs with RL data🧵👇

@CharlesXu0124

, we present RLDG, which trains VLAs with RL data🧵👇

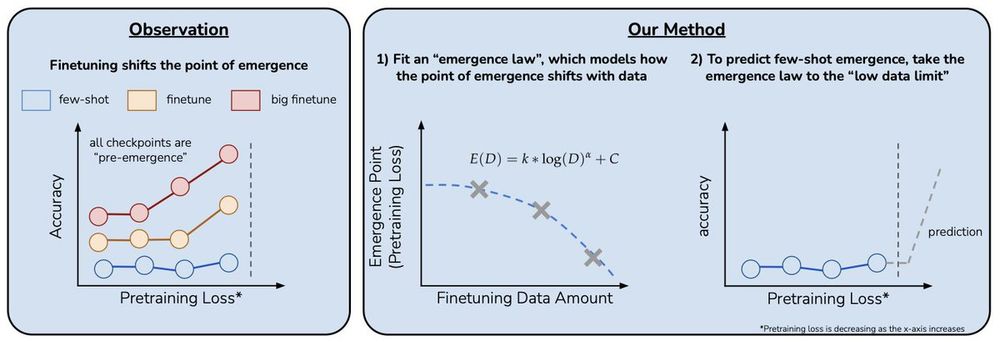

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵