Prev intern at NVIDIA, Sony

(9/N)

(9/N)

(8/N)

(8/N)

(6/N)

(6/N)

(5/N)

(5/N)

(4/N)

(4/N)

(3/N)

(3/N)

(2/N)

(2/N)

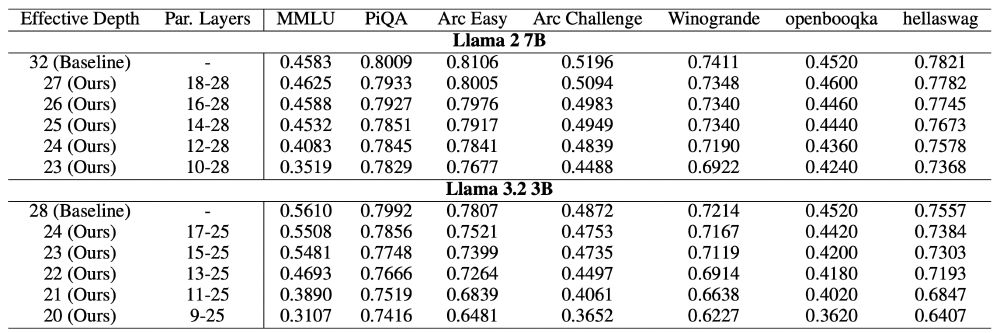

Together with @danielepal.bsky.social , @matpagliardini.bsky.social, M. Jaggi and @francois.fleuret.org we show that LLMs have a smaller effective depth that can be exploited to increase inference speeds on multi-GPU settings!

arxiv.org/abs/2502.02790

(1/N)

Together with @danielepal.bsky.social , @matpagliardini.bsky.social, M. Jaggi and @francois.fleuret.org we show that LLMs have a smaller effective depth that can be exploited to increase inference speeds on multi-GPU settings!

arxiv.org/abs/2502.02790

(1/N)