Co-Founder @synthesiaIO

Co-Founder @SpAItialAI

https://niessnerlab.org/publications.html

PercHead reconstructs realistic 3D heads from a single image and enables disentangled 3D editing via geometric controls and style inputs from images or text.

PercHead reconstructs realistic 3D heads from a single image and enables disentangled 3D editing via geometric controls and style inputs from images or text.

Still hard to fathom this privilege as a researcher — getting to travel to such amazing places and be part of this brilliant community - Thanks!

Still hard to fathom this privilege as a researcher — getting to travel to such amazing places and be part of this brilliant community - Thanks!

Internal reviews, ideas brainstorming, paper reading, and much more! Of course also many social activities -- the highlight being our kayaking trip - lots of fun :)

Internal reviews, ideas brainstorming, paper reading, and much more! Of course also many social activities -- the highlight being our kayaking trip - lots of fun :)

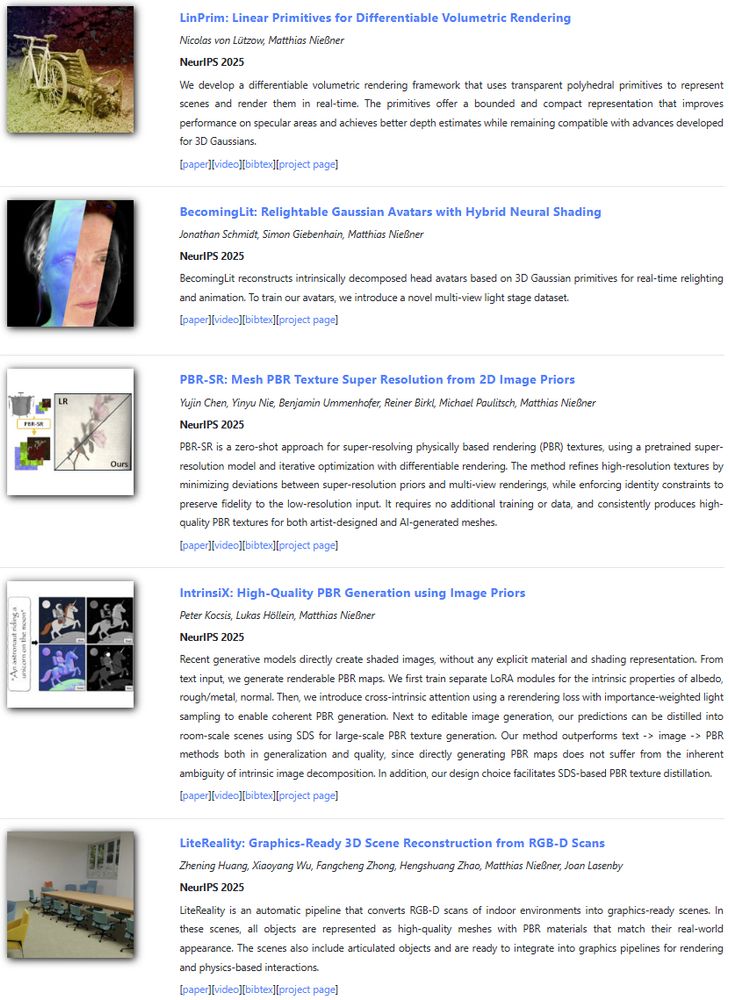

Awesome works about Gaussian Splatting Primitives, Lighting Estimation, Texturing, and much more GenAI :)

Great work by Peter Kocsis, Yujin Chen, Zhening Huang, Jiapeng Tang, Nicolas von Lützow, Jonathan Schmidt 🔥🔥🔥

Awesome works about Gaussian Splatting Primitives, Lighting Estimation, Texturing, and much more GenAI :)

Great work by Peter Kocsis, Yujin Chen, Zhening Huang, Jiapeng Tang, Nicolas von Lützow, Jonathan Schmidt 🔥🔥🔥

𝐖𝐨𝐫𝐥𝐝𝐄𝐱𝐩𝐥𝐨𝐫𝐞𝐫 (#SIGGRAPHAsia25) creates fully-navigable scenes via autoregressive video generation.

Text input -> 3DGS scene output & interactive rendering!

🌍http://mschneider456.github.io/world-explorer/

📽️https://youtu.be/N6NJsNyiv6I

𝐖𝐨𝐫𝐥𝐝𝐄𝐱𝐩𝐥𝐨𝐫𝐞𝐫 (#SIGGRAPHAsia25) creates fully-navigable scenes via autoregressive video generation.

Text input -> 3DGS scene output & interactive rendering!

🌍http://mschneider456.github.io/world-explorer/

📽️https://youtu.be/N6NJsNyiv6I

We reconstruct ultra-high fidelity photorealistic 3D avatars capable of generating realistic and high-quality animations including freckles and other fine facial details.

shivangi-aneja.github.io/projects/sca...

We reconstruct ultra-high fidelity photorealistic 3D avatars capable of generating realistic and high-quality animations including freckles and other fine facial details.

shivangi-aneja.github.io/projects/sca...

-> converts RGB-D scans into compact, realistic, and interactive 3D scenes — featuring high-quality meshes, PBR materials, and articulated objects.

📷https://youtu.be/ecK9m3LXg2c

🌍https://litereality.github.io

-> converts RGB-D scans into compact, realistic, and interactive 3D scenes — featuring high-quality meshes, PBR materials, and articulated objects.

📷https://youtu.be/ecK9m3LXg2c

🌍https://litereality.github.io

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

We have several fully-funded 𝐏𝐡𝐃 & 𝐏𝐨𝐬𝐭𝐃𝐨𝐜 𝐨𝐩𝐞𝐧𝐢𝐧𝐠𝐬 in our Visual Computing & AI Lab in Munich!

Apply here: application.vc.in.tum.de

Topics have a strong focus on Generative AI, 3DGs, NeRFs, Diffusion, LLMs, etc.

We have several fully-funded 𝐏𝐡𝐃 & 𝐏𝐨𝐬𝐭𝐃𝐨𝐜 𝐨𝐩𝐞𝐧𝐢𝐧𝐠𝐬 in our Visual Computing & AI Lab in Munich!

Apply here: application.vc.in.tum.de

Topics have a strong focus on Generative AI, 3DGs, NeRFs, Diffusion, LLMs, etc.

Now over 11k / year with an expectation to grow even further. This comes with a lot of implications, how to handle the reviews, presentations, etc. Kudos to the organizers for all the efforts that went into it.

Now over 11k / year with an expectation to grow even further. This comes with a lot of implications, how to handle the reviews, presentations, etc. Kudos to the organizers for all the efforts that went into it.

Looking forward to catching up with everyone -- feel free to reach out if you want to chat!

Everything is a Honky Tonk :)

Looking forward to catching up with everyone -- feel free to reach out if you want to chat!

Everything is a Honky Tonk :)

Great work by Jonathan Schmidt and Simon Giebenhain.

Great work by Jonathan Schmidt and Simon Giebenhain.

We propose a hybrid neural shading scheme for creating intrinsically decomposed 3DGS head avatars, that allow real-time relighting and animation.

🌍https://lnkd.in/evNt8bV2

📷https://lnkd.in/ekB5QeEK

We propose a hybrid neural shading scheme for creating intrinsically decomposed 3DGS head avatars, that allow real-time relighting and animation.

🌍https://lnkd.in/evNt8bV2

📷https://lnkd.in/ekB5QeEK

Looking for a robust and accurate face tracker?

We handle challenging in-the-wild settings, such as extreme lighting conditions, fast movements, and occlusions.

👨💻https://lnkd.in/e3dX23WV

🌍https://lnkd.in/eQ3Zpn3J

Pixel3DMM can be run on videos and single images.

Looking for a robust and accurate face tracker?

We handle challenging in-the-wild settings, such as extreme lighting conditions, fast movements, and occlusions.

👨💻https://lnkd.in/e3dX23WV

🌍https://lnkd.in/eQ3Zpn3J

Pixel3DMM can be run on videos and single images.

We propose a new optimization to up-sample textures of 3D assets (albedo, roughness, metallic, and normal maps) by leveraging 2D super-resolution models.

📝http://arxiv.org/abs/2506.02846

📽️https://youtu.be/eaM5S3Mt1RM

We propose a new optimization to up-sample textures of 3D assets (albedo, roughness, metallic, and normal maps) by leveraging 2D super-resolution models.

📝http://arxiv.org/abs/2506.02846

📽️https://youtu.be/eaM5S3Mt1RM

We reconstruct hair strands from colorless 3D scans by extracting orientation cues directly from the mesh surface geometry by finding local characteristic lines and from shaded renderings using a neural 2D line detector.

We reconstruct hair strands from colorless 3D scans by extracting orientation cues directly from the mesh surface geometry by finding local characteristic lines and from shaded renderings using a neural 2D line detector.

-> highly accurate face reconstruction by training powerful VITs via surface normals & UV-coordinates estimation.

These cues from our 2D foundation model constrain the 3DMM parameters, achieving great accuracy.

-> highly accurate face reconstruction by training powerful VITs via surface normals & UV-coordinates estimation.

These cues from our 2D foundation model constrain the 3DMM parameters, achieving great accuracy.

We use octahedra or tetrahedra as explicit as volumetric building blocks for gradient-based novel view synthesis - as an alternative to 3D Gaussians with discrete, bounded geometry.

🌍 nicolasvonluetzow.github.io/LinPrim/

We use octahedra or tetrahedra as explicit as volumetric building blocks for gradient-based novel view synthesis - as an alternative to 3D Gaussians with discrete, bounded geometry.

🌍 nicolasvonluetzow.github.io/LinPrim/

Given a single input image, we predict accurate 3D head geometry, pose, and expression.

🌍 nlml.github.io/sheap/

🎥 youtu.be/vhXsZJWCBMA/

Great work by Liam Schoneveld, Davide Davoli, Jiapeng Tang

Given a single input image, we predict accurate 3D head geometry, pose, and expression.

🌍 nlml.github.io/sheap/

🎥 youtu.be/vhXsZJWCBMA/

Great work by Liam Schoneveld, Davide Davoli, Jiapeng Tang

Two tasks with hidden test sets:

- Dynamic Novel View Synthesis on Heads

- Monocular FLAME-driven Head Avatar Reconstruction

Our goal is to make research on 3D head avatars more comparable and ultimately increase the realism of digital humans.

Two tasks with hidden test sets:

- Dynamic Novel View Synthesis on Heads

- Monocular FLAME-driven Head Avatar Reconstruction

Our goal is to make research on 3D head avatars more comparable and ultimately increase the realism of digital humans.

We animate 3D humanoid meshes using video diffusion priors given a text prompt.

🎥https://youtu.be/_YL1J_V3smI

🌍https://marcb.pro/atu

Realistic motion generation for 3D characters - without motion capture! 🚀

Great work by Marc Benedí, @adai.bsky.social

We animate 3D humanoid meshes using video diffusion priors given a text prompt.

🎥https://youtu.be/_YL1J_V3smI

🌍https://marcb.pro/atu

Realistic motion generation for 3D characters - without motion capture! 🚀

Great work by Marc Benedí, @adai.bsky.social

Users can interactively design 3D models just from a sketch-based interface - check it out!

We break down the design process into addition with an autoregressive generator and deletion operations by a classifier.

Users can interactively design 3D models just from a sketch-based interface - check it out!

We break down the design process into addition with an autoregressive generator and deletion operations by a classifier.

➡️We're looking for Diffusion/3D/ML/Infra engineers and scientists in Munich & London. Get in touch for details!

#3D #GenAI #spatialintelligence #foundationmodels

➡️We're looking for Diffusion/3D/ML/Infra engineers and scientists in Munich & London. Get in touch for details!

#3D #GenAI #spatialintelligence #foundationmodels

Avat3r creates high-quality 3D head avatars from just a few input images in a single forward pass with a new dynamic 3DGS reconstruction model.

youtu.be/P3zNVx15gYs

tobias-kirschstein.github.io/avat3r

Great work by Tobias Kirschstein with J. Romero, A. Sevastopolsky, S. Saito.

Avat3r creates high-quality 3D head avatars from just a few input images in a single forward pass with a new dynamic 3DGS reconstruction model.

youtu.be/P3zNVx15gYs

tobias-kirschstein.github.io/avat3r

Great work by Tobias Kirschstein with J. Romero, A. Sevastopolsky, S. Saito.