Max 4 or 9 pages, due 22 Aug, NeurIPS submissions welcome

We welcome any works that further our ability to use the internals of a model to better understand it

Details: mechinterpworkshop com

Max 4 or 9 pages, due 22 Aug, NeurIPS submissions welcome

We welcome any works that further our ability to use the internals of a model to better understand it

Details: mechinterpworkshop com

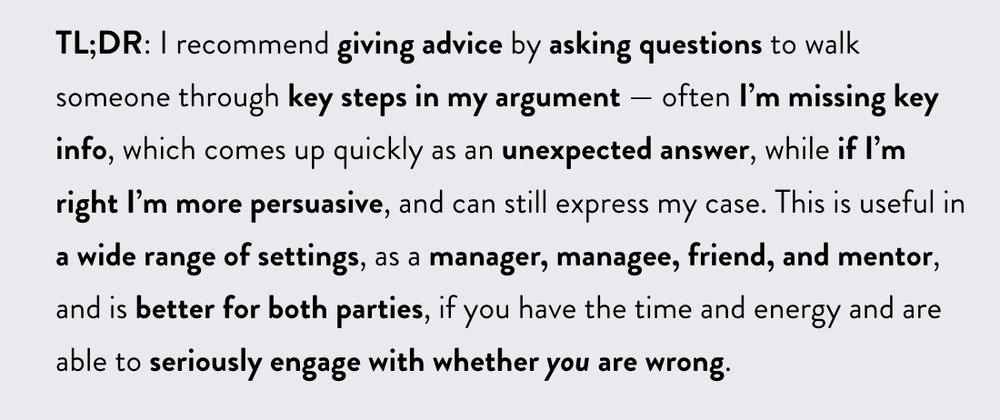

Maybe! Asking questions rather than lecturing people is hardly a novel insight

But people often assume the Qs must be neutral and open-ended. It can be very useful to be opinionated! But you need error correction mechanisms for when you're wrong.

Maybe! Asking questions rather than lecturing people is hardly a novel insight

But people often assume the Qs must be neutral and open-ended. It can be very useful to be opinionated! But you need error correction mechanisms for when you're wrong.

Done right, I think its more collaborative - combining my perspective and their context to find the best advice. Better for both parties!

Done right, I think its more collaborative - combining my perspective and their context to find the best advice. Better for both parties!

The post: www.neelnanda.io/blog/51-soc...

More thoughts in 🧵

The post: www.neelnanda.io/blog/51-soc...

More thoughts in 🧵

My favourite approach is Socratic persuasion: guiding them through my case via questions. If I'm wrong there's soon a surprising answer!

I can be opinionated *and* truth seeking

My favourite approach is Socratic persuasion: guiding them through my case via questions. If I'm wrong there's soon a surprising answer!

I can be opinionated *and* truth seeking

This mystical notion separates new and experienced researchers. It's real and important. But what is it and how to learn it?

I break down taste as the mix of intuition/models behind good open-ended decisions and share tricks to speed up learning

x.com/NeelNanda5/...

This mystical notion separates new and experienced researchers. It's real and important. But what is it and how to learn it?

I break down taste as the mix of intuition/models behind good open-ended decisions and share tricks to speed up learning

x.com/NeelNanda5/...

blog.sentinel-team.org/p/global-ri...

blog.sentinel-team.org/p/global-ri...

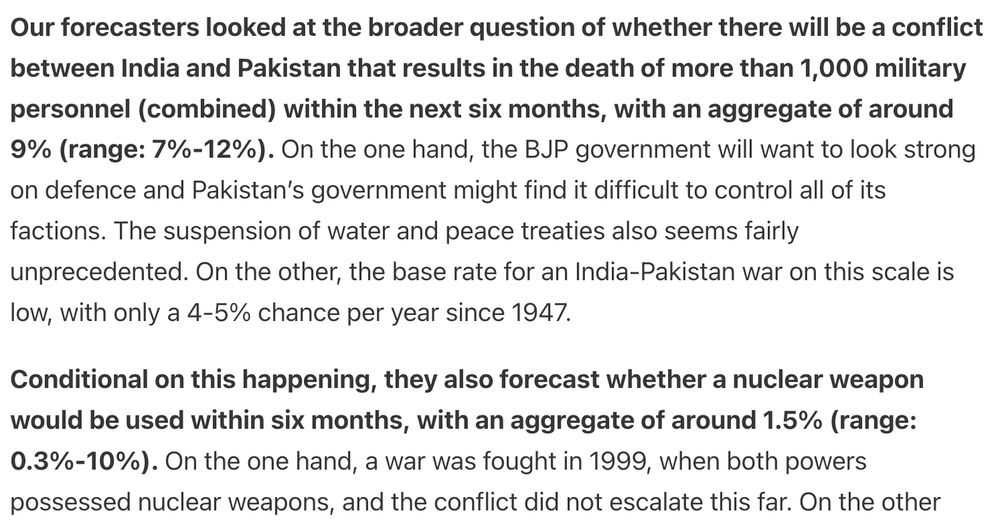

Expert forecasters filter for the events that actually matter (not just noise), and forecast how likely this is to affect eg war, pandemics, frontier AI etc

Highly recommended!

Expert forecasters filter for the events that actually matter (not just noise), and forecast how likely this is to affect eg war, pandemics, frontier AI etc

Highly recommended!

x.com/BartBussman...

x.com/BartBussman...

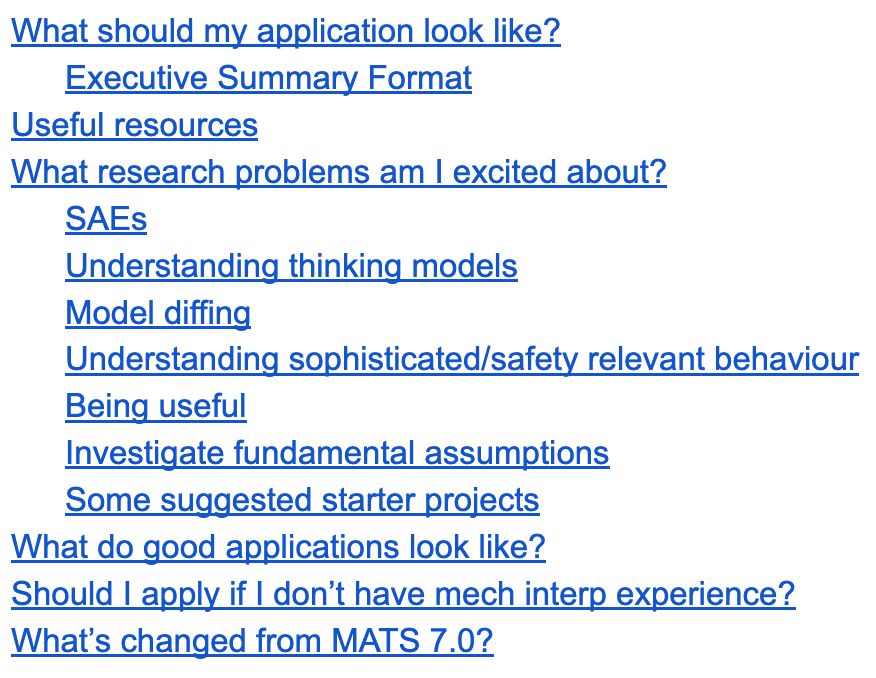

More broadly, the AGI Safety team is keen to get applications from both strong ML engineers and strong ML researchers.

Please apply!

More broadly, the AGI Safety team is keen to get applications from both strong ML engineers and strong ML researchers.

Please apply!

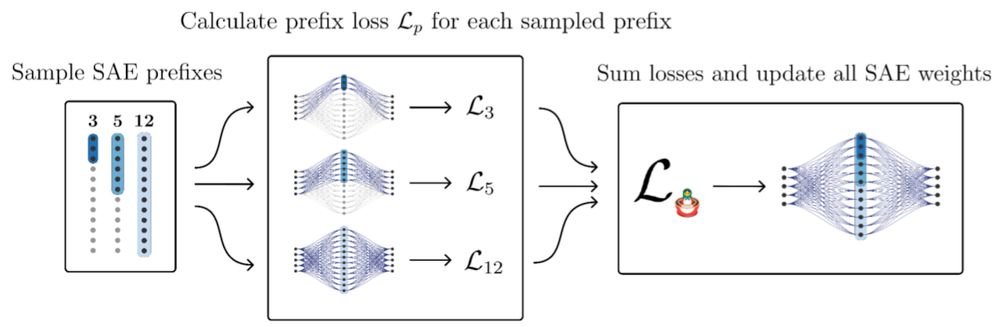

But not all hope is lost - our forthcoming paper on Matryoshka SAEs seems to substantially improve these issues!

But not all hope is lost - our forthcoming paper on Matryoshka SAEs seems to substantially improve these issues!

x.com/NeelNanda5/...

x.com/NeelNanda5/...