🏫 Assistant Professor at Illinois Tech

🛜 https://nathaniel-hudson.github.io/

chemrxiv.org/engage/api-g...

@danielgrzenda.bsky.social @nathaniel-hudson.bsky.social

chemrxiv.org/engage/api-g...

@danielgrzenda.bsky.social @nathaniel-hudson.bsky.social

https://bit.ly/46pyymr

https://bit.ly/46pyymr

Use the link below to check out the paper on FGCS:

www.sciencedirect.com/science/arti...

Use the link below to check out the paper on FGCS:

www.sciencedirect.com/science/arti...

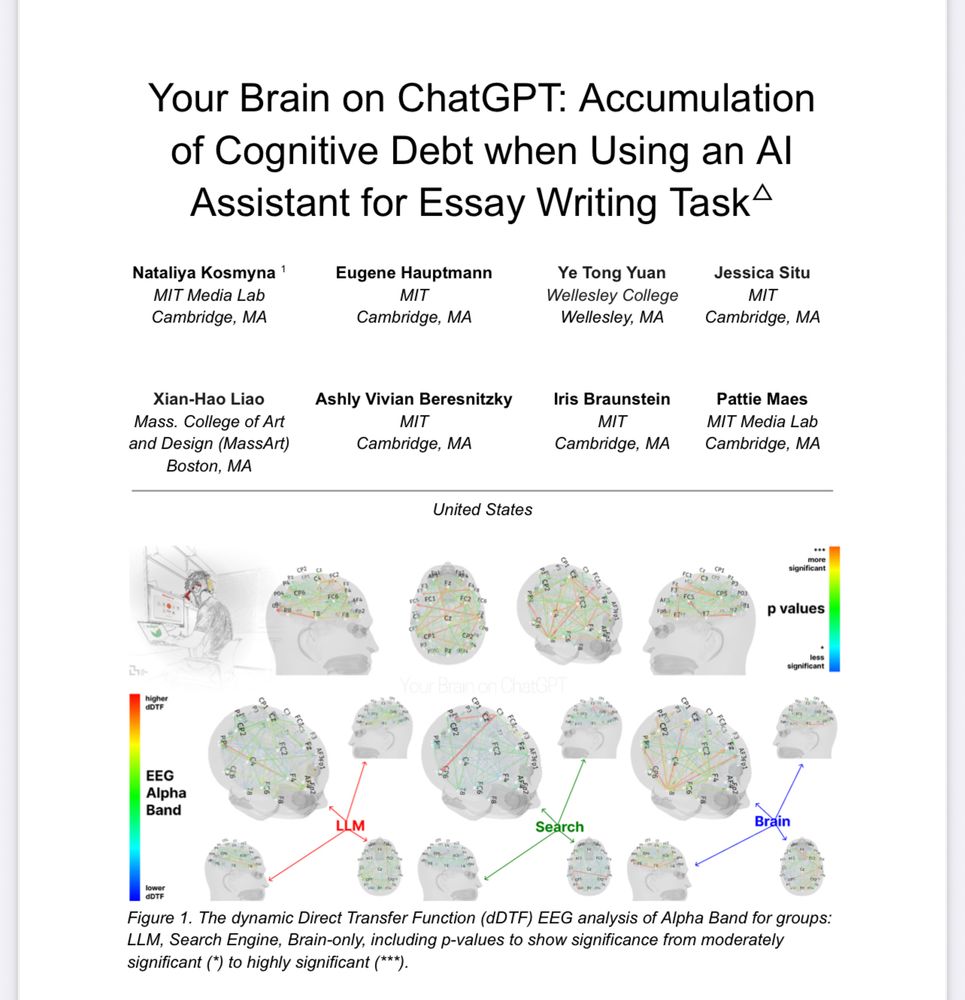

Key takeaway: the product doesn’t suffer, but the process does. And when it comes to essays, the process *is* how they learn.

arxiv.org/pdf/2506.088...

Language models (LMs) often "memorize" data, leading to privacy risks. This paper explores ways to reduce that!

Paper: arxiv.org/pdf/2410.02159

Code: github.com/msakarvadia/...

Blog: mansisak.com/memorization/

Language models (LMs) often "memorize" data, leading to privacy risks. This paper explores ways to reduce that!

Paper: arxiv.org/pdf/2410.02159

Code: github.com/msakarvadia/...

Blog: mansisak.com/memorization/