When constrained by a variety of reasons to where you can't include multiple copies (or mmaps) of datasets in memory, be it too many concurrent streams, low resource availability, or a slow CPU, dispatching is here to help.

When constrained by a variety of reasons to where you can't include multiple copies (or mmaps) of datasets in memory, be it too many concurrent streams, low resource availability, or a slow CPU, dispatching is here to help.

When performing distributed data parallelism, we split the dataset every batch so every device sees a different chunk of the data. There are different methods for doing so. One example is sharding at the *dataset* level, shown here.

When performing distributed data parallelism, we split the dataset every batch so every device sees a different chunk of the data. There are different methods for doing so. One example is sharding at the *dataset* level, shown here.

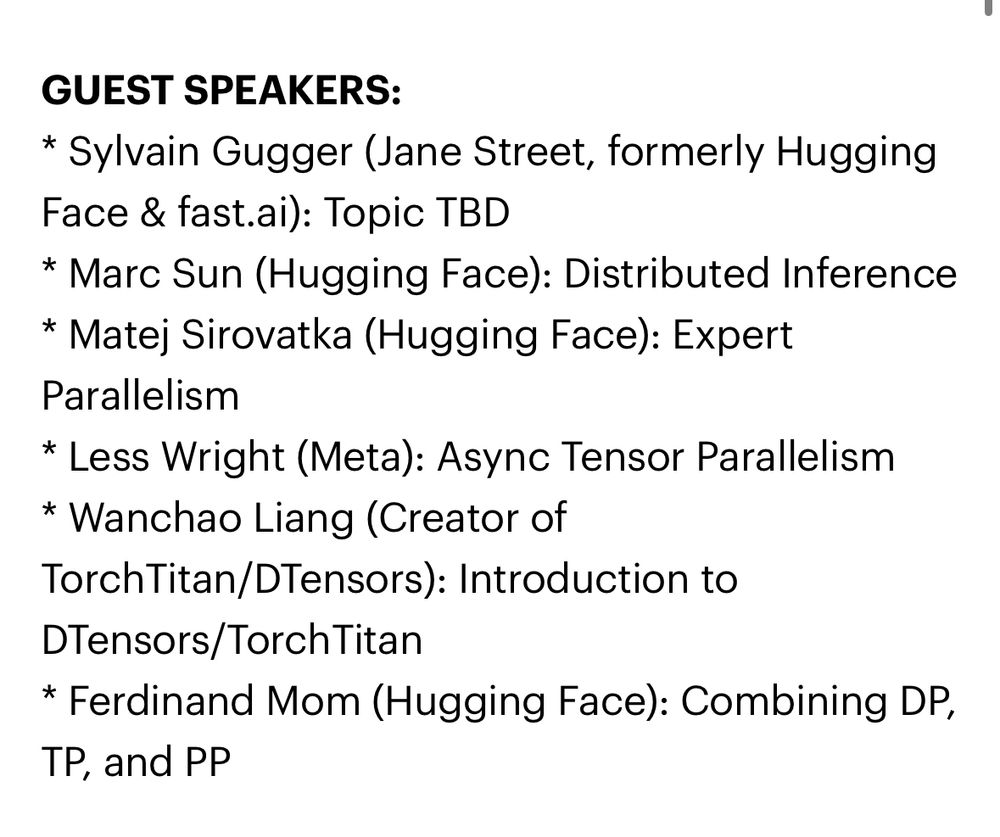

Back very briefly to mention I’m working on a new course, and there’s a star-studded set of guest speakers 🎉

From Scratch to Scale: Distributed Training (from the ground up).

From now until I’m done writing the course material, it’s 25% off :)

maven.com/walk-with-co...

Back very briefly to mention I’m working on a new course, and there’s a star-studded set of guest speakers 🎉

From Scratch to Scale: Distributed Training (from the ground up).

From now until I’m done writing the course material, it’s 25% off :)

maven.com/walk-with-co...

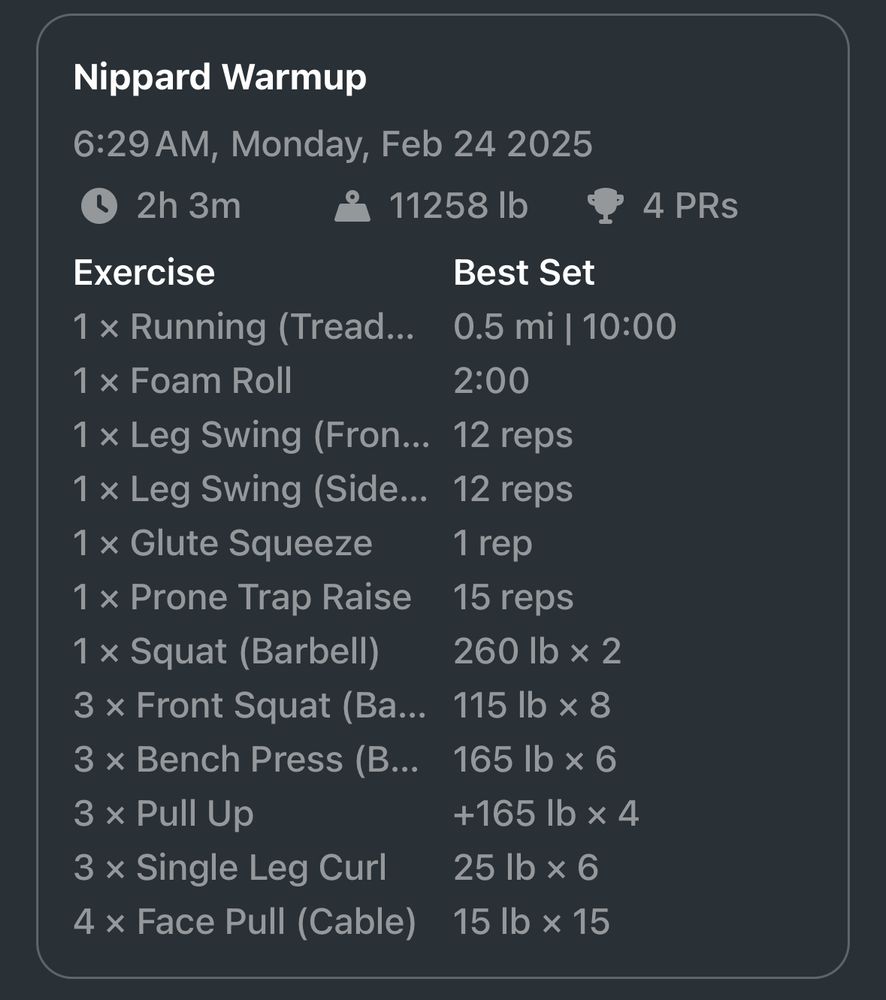

145kg squat

180kg deadlift

90kg bench

145kg squat

180kg deadlift

90kg bench

(Couldn’t find the original post that had the directions, just this saved screenshot)

(Couldn’t find the original post that had the directions, just this saved screenshot)

Verizon:

I suppose I’m forcibly done with work for the day, too bad I had to go to the next town over to say that 😅

Verizon:

I suppose I’m forcibly done with work for the day, too bad I had to go to the next town over to say that 😅

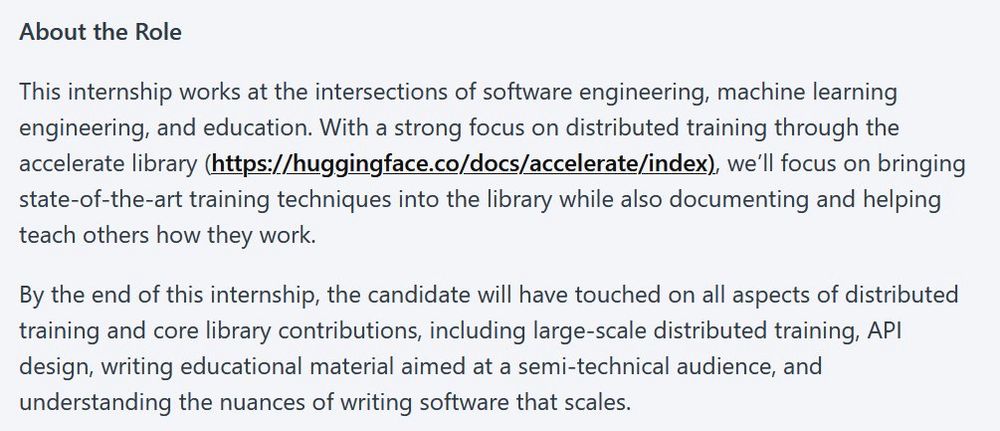

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

Huh. Weird. I wonder what's u-

Oh. Cool ☺️

Huh. Weird. I wonder what's u-

Oh. Cool ☺️

(Such as oooo, pretty flowers from yesterday)

(Such as oooo, pretty flowers from yesterday)