Homepage: https://mohit3011.github.io/

#ResponsibleAI #Human-CenteredAI #NLPforMentalHealth

Paper: shorturl.at/bldCb

Webpage: shorturl.at/bC1zn

Code: shorturl.at/H8xmp

Grateful for the efforts from my co-authors 🙌: Siddharth Sriraman, @verma22gaurav.bsky.social, Harneet Singh Khanuja, Jose Suarez Campayo, Zihang Li, Michael L. Birnbaum, Munmun De Choudhury

11/11

Paper: shorturl.at/bldCb

Webpage: shorturl.at/bC1zn

Code: shorturl.at/H8xmp

Grateful for the efforts from my co-authors 🙌: Siddharth Sriraman, @verma22gaurav.bsky.social, Harneet Singh Khanuja, Jose Suarez Campayo, Zihang Li, Michael L. Birnbaum, Munmun De Choudhury

11/11

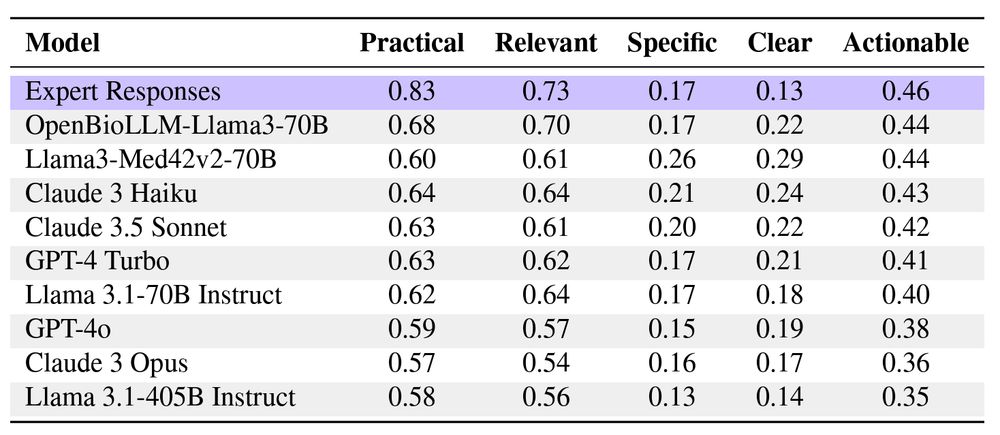

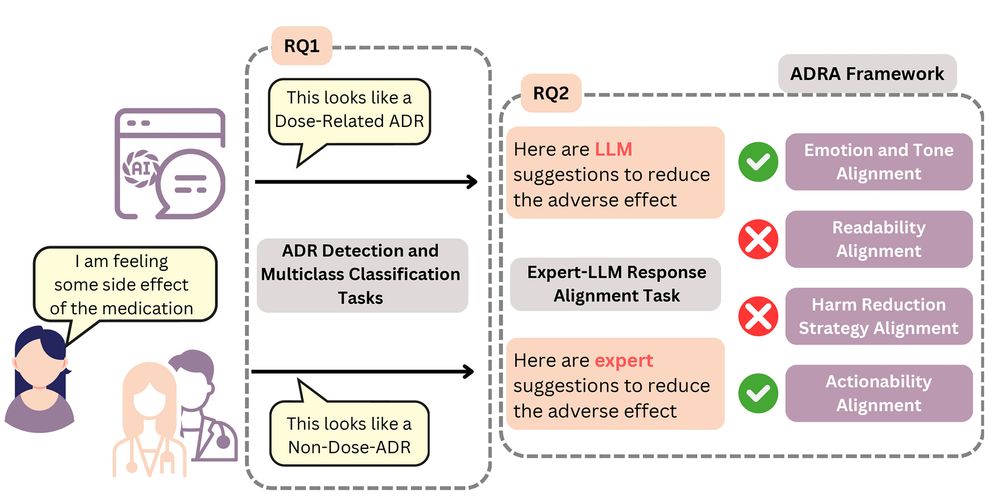

While LLMs provide less practical and relevant advice, their advice is more clear and specific.

10/11

While LLMs provide less practical and relevant advice, their advice is more clear and specific.

10/11

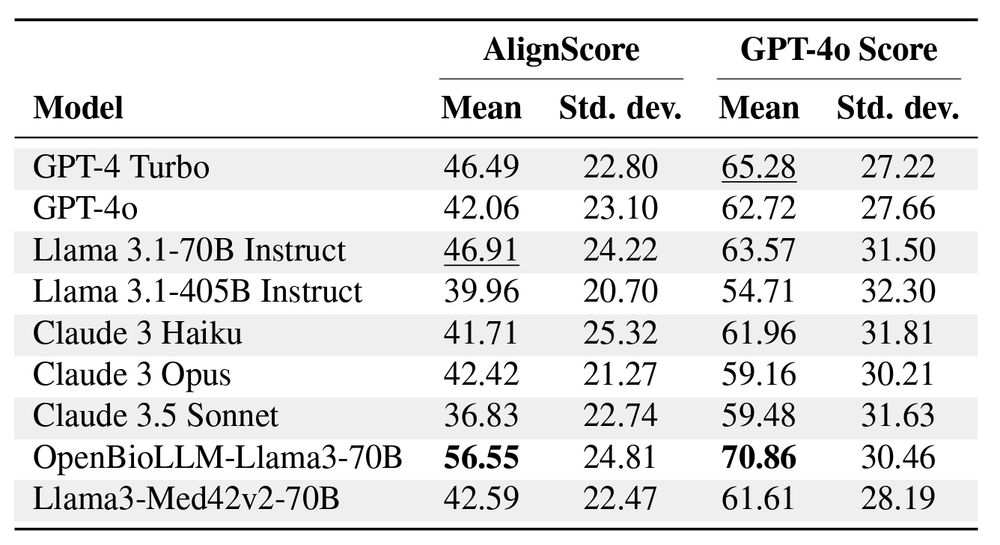

The best medical model aligned with experts ~71% (GPT-4o score) of the time.

9/11

The best medical model aligned with experts ~71% (GPT-4o score) of the time.

9/11

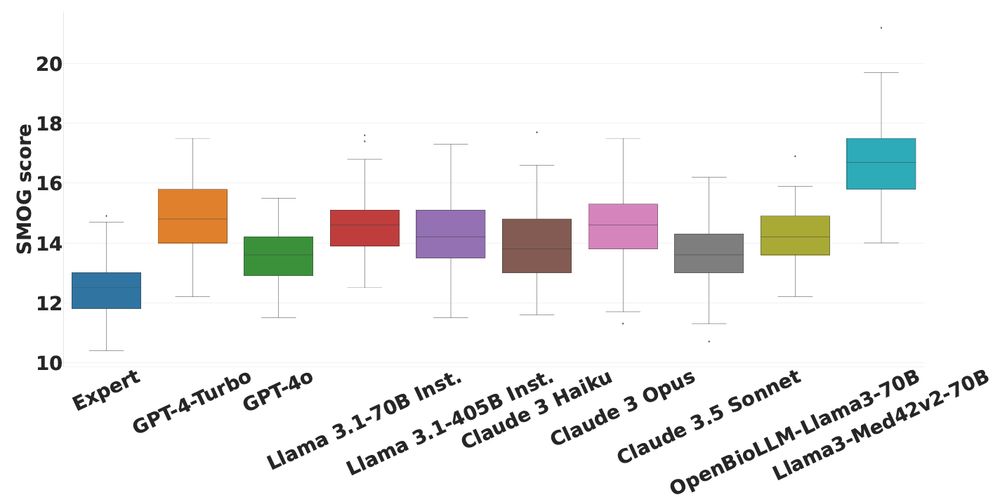

Finding #4: We find that LLMs express similar emotions and tones but provide significantly harder to read responses.

8/11

Finding #4: We find that LLMs express similar emotions and tones but provide significantly harder to read responses.

8/11

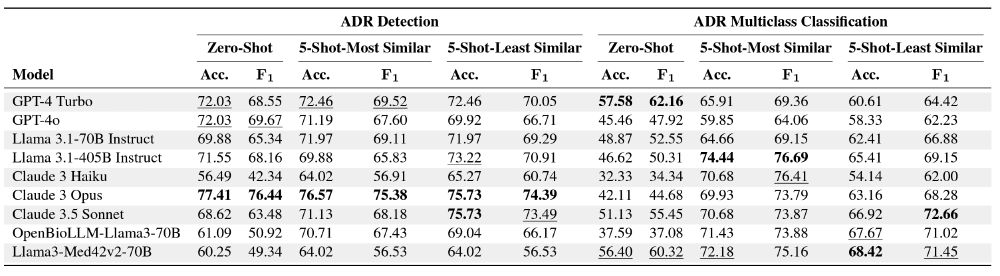

Type of examples had a more pronounced impact for the ADR multiclass class. task.

7/11

Type of examples had a more pronounced impact for the ADR multiclass class. task.

7/11

This highlights the lack of "lived-experience" among models.

6/11

This highlights the lack of "lived-experience" among models.

6/11

5/11

5/11

4/11

4/11

Below are some nuanced findings 👇

3/11

Below are some nuanced findings 👇

3/11

2/11

2/11

It is certainly a good start but I still feel we need more interdisciplinary reviewers (based on the reviews I have gotten). One issue is the ask for reviewers to have at least 3 *CL papers in past 5 years which many researchers might not have.

Something ACs could look into ?

It is certainly a good start but I still feel we need more interdisciplinary reviewers (based on the reviews I have gotten). One issue is the ask for reviewers to have at least 3 *CL papers in past 5 years which many researchers might not have.

Something ACs could look into ?