This benchmark was just released. Read about it here: www.linkedin.com/posts/eliasb....

This benchmark was just released. Read about it here: www.linkedin.com/posts/eliasb....

"AI development is currently overly focused on individual model capabilities, often ignoring broader emergent behavior, leading to a significant underestimation of the true capabilities and associated risks of agentic AI."

"AI development is currently overly focused on individual model capabilities, often ignoring broader emergent behavior, leading to a significant underestimation of the true capabilities and associated risks of agentic AI."

www.ibm.com/new/announce...

www.ibm.com/new/announce...

Enjoy!

Open source repo & benchmarks: github.com/ibm-granite/...

Enjoy!

Open source repo & benchmarks: github.com/ibm-granite/...

Link: github.com/ibm-granite/...

Link: github.com/ibm-granite/...

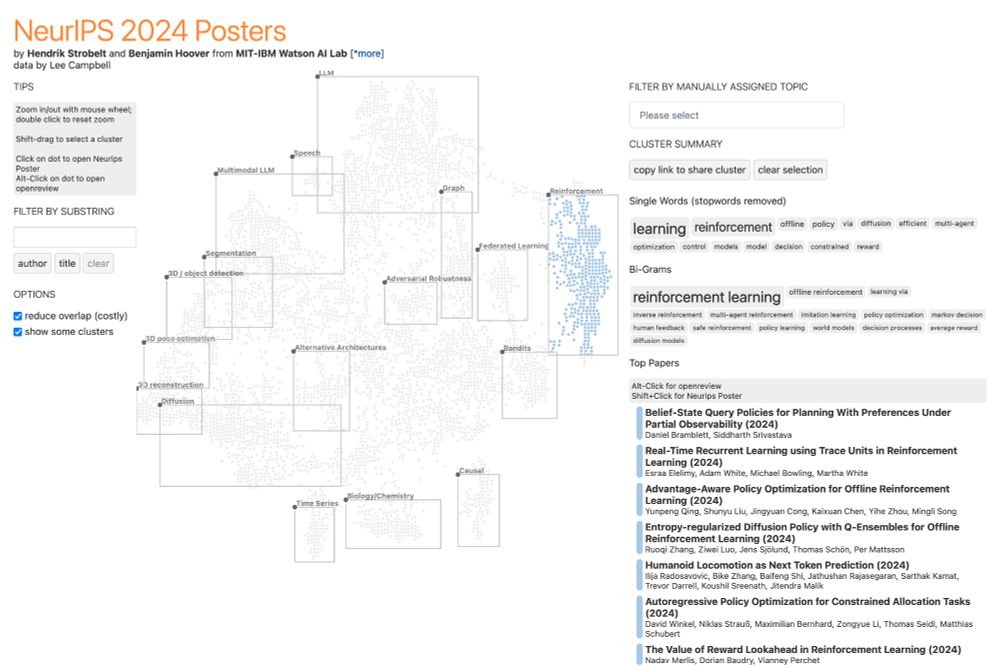

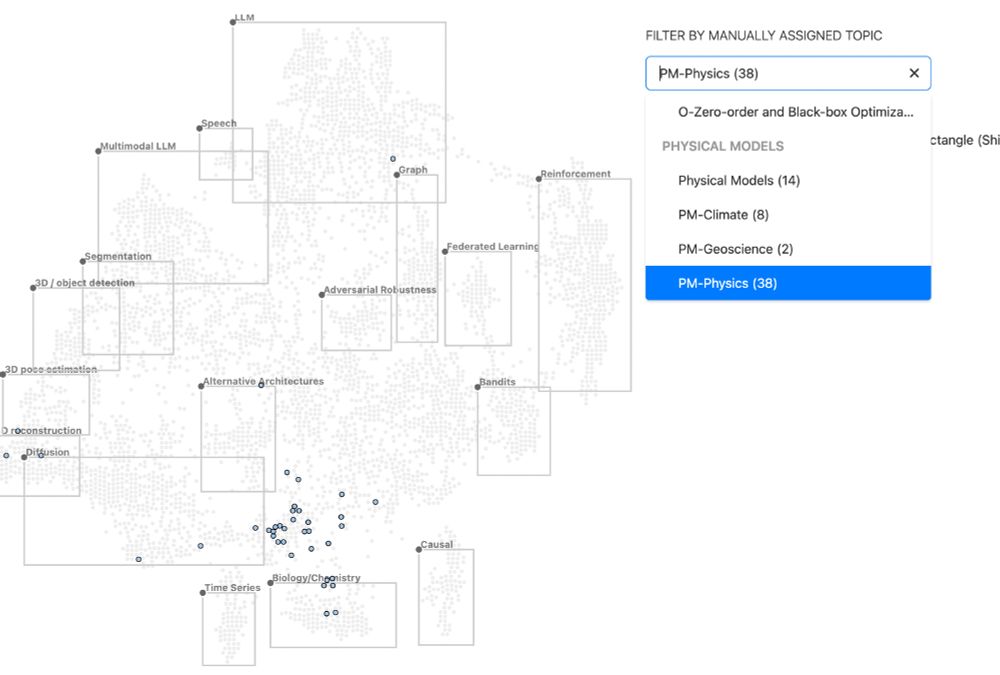

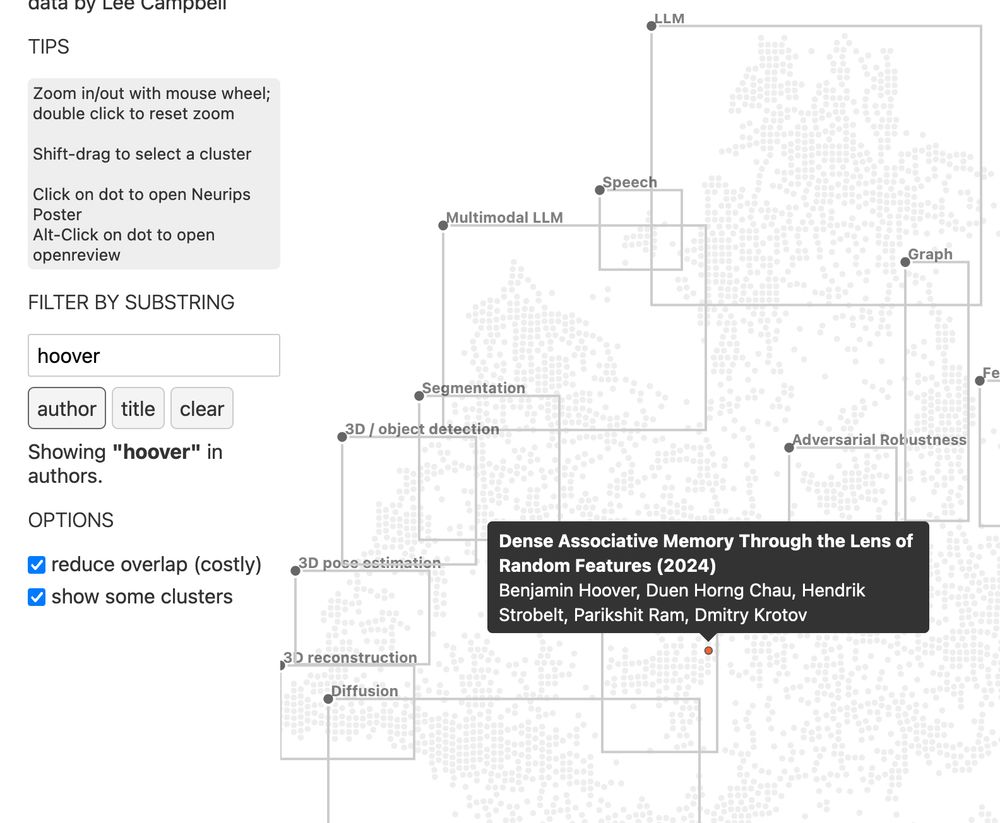

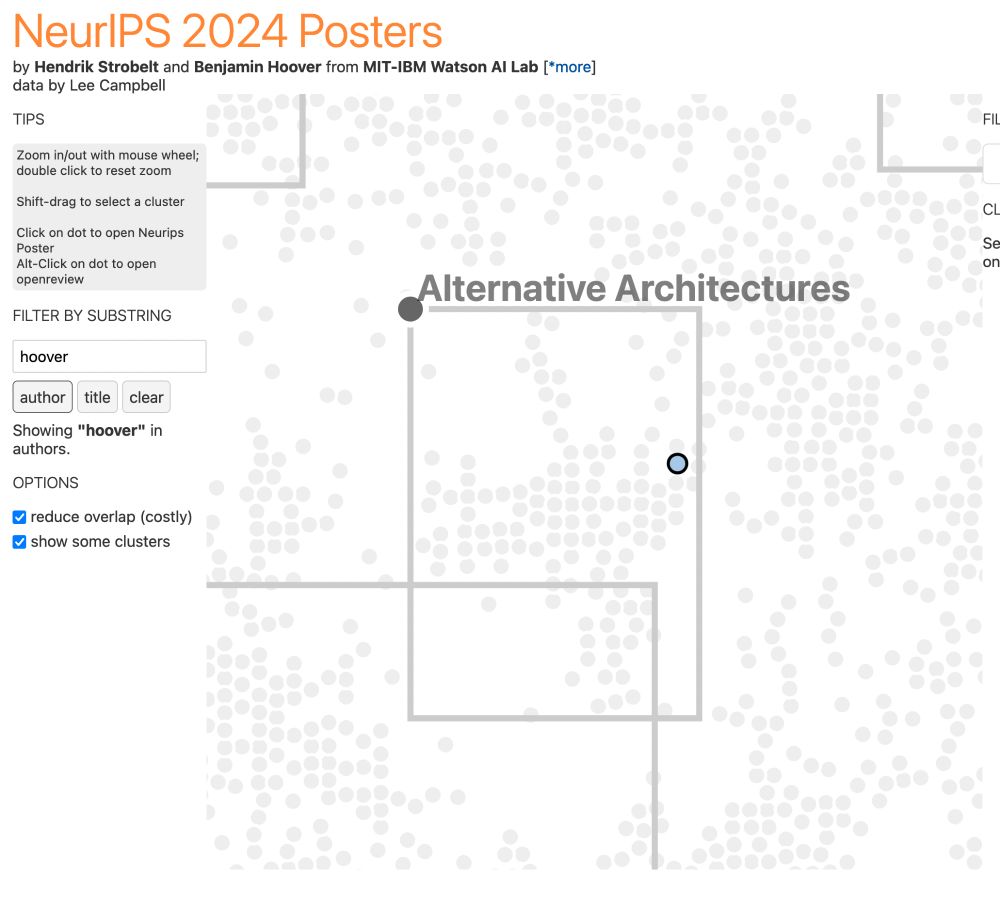

1. Start with the official NeurIPS explorer by @henstr.bsky.social and @benhoover.bsky.social. It is infoviz par excellence. neurips2024.vizhub.ai

1. Start with the official NeurIPS explorer by @henstr.bsky.social and @benhoover.bsky.social. It is infoviz par excellence. neurips2024.vizhub.ai

- browse all NeurIPS papers in a visual way

- select clusters of interest and get cluster summary

- ZOOOOM in

- filter by human assigned keywords

- filter by substring (authors, titles)

neurips2024.vizhub.ai

#neurips by IBM Research Cambridge

- browse all NeurIPS papers in a visual way

- select clusters of interest and get cluster summary

- ZOOOOM in

- filter by human assigned keywords

- filter by substring (authors, titles)

neurips2024.vizhub.ai

#neurips by IBM Research Cambridge

You can hear it here: podcasts.apple.com/us/podcast/t...

You can hear it here: podcasts.apple.com/us/podcast/t...