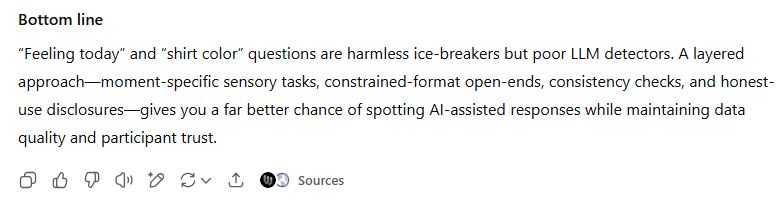

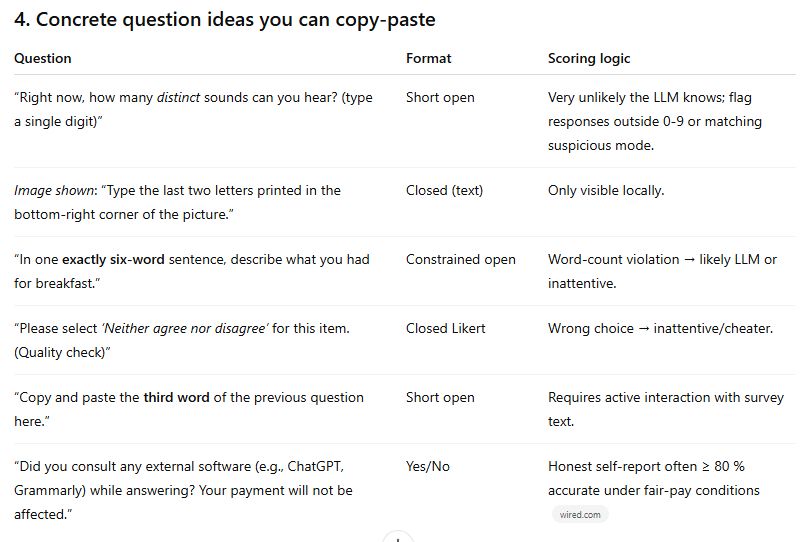

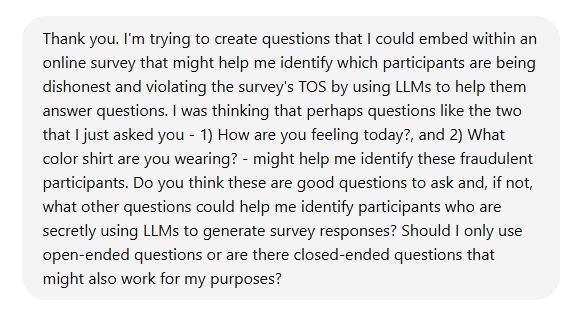

Cognitive psychologist. Cornell University postdoc & researcher at Media Ecosystem Observatory. Previously UToronto (Munk School), UMiami, & UWaterloo | Bullshitology, conspiracy beliefs, political propaganda, metacognition 🧠☘️

bullshitology.substack.com/p/down-the-c...

bullshitology.substack.com/p/down-the-c...

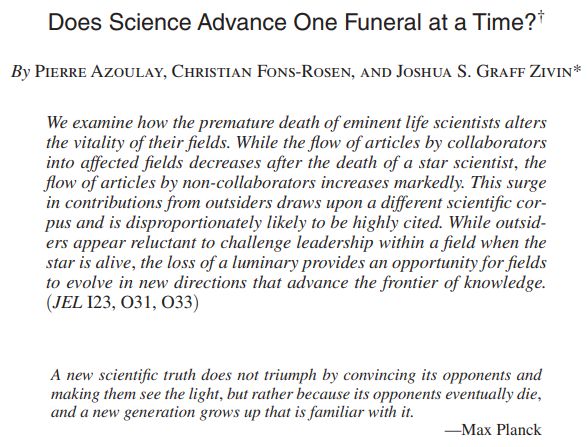

Look out for "Certainly!" It's a LLM red flag.

Look out for "Certainly!" It's a LLM red flag.