Postdoc at Columbia | ML PhD, Georgia Tech | https://www.mehai.dev/

We invite short papers or interactive demos on AI for neural, physiological or behavioral data.

Submit by Aug 22 👉 brainbodyfm-workshop.github.io

We invite short papers or interactive demos on AI for neural, physiological or behavioral data.

Submit by Aug 22 👉 brainbodyfm-workshop.github.io

This is x10 more data than POYO-1.

This is x10 more data than POYO-1.

We query POYO+ when decoding, meaning that it can be queried to decode any number of tasks, and these tasks can be different depending on the context.

We query POYO+ when decoding, meaning that it can be queried to decode any number of tasks, and these tasks can be different depending on the context.

I had fun making this plot for the opening talk. It's exciting to see the exponential growth in the amount of pretraining data 🚀

I compiled a list of neuro-foundation models for EPhys and OPhys: github.com/mazabou/awes...

I had fun making this plot for the opening talk. It's exciting to see the exponential growth in the amount of pretraining data 🚀

I compiled a list of neuro-foundation models for EPhys and OPhys: github.com/mazabou/awes...

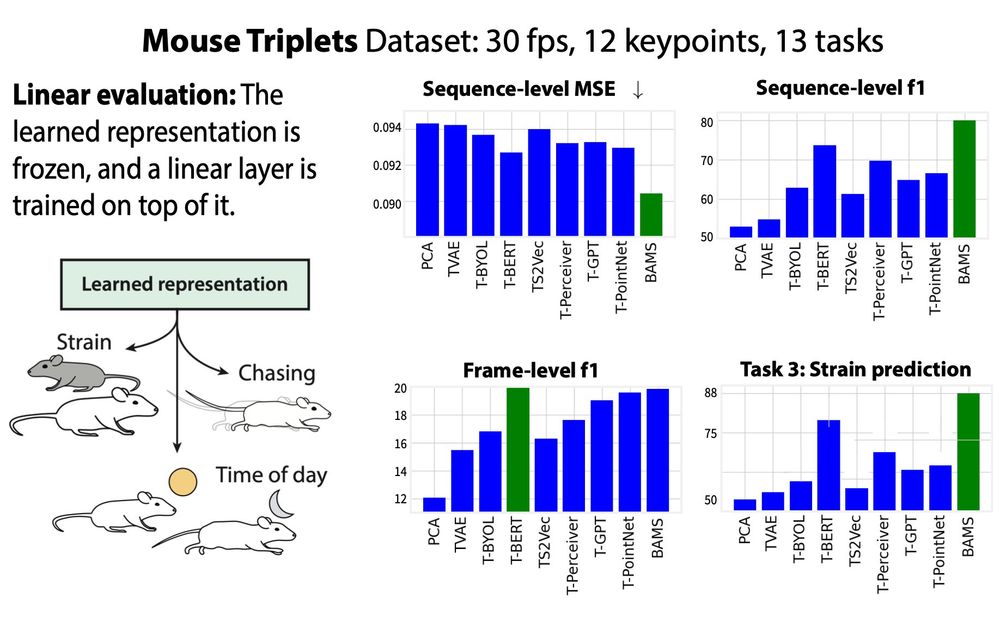

These multi-task benchmarks are designed to evaluate the learned representations of unsupervised methods using a set of 13 and 50 readout tasks for mice and flies resp.

These multi-task benchmarks are designed to evaluate the learned representations of unsupervised methods using a set of 13 and 50 readout tasks for mice and flies resp.

Check out our #NeurIPS2023 Spotlight Paper where we present a SSL method for learning multiscale representations of behavior! 🧠🟦

Link: multiscale-behavior.github.io

Check out our #NeurIPS2023 Spotlight Paper where we present a SSL method for learning multiscale representations of behavior! 🧠🟦

Link: multiscale-behavior.github.io

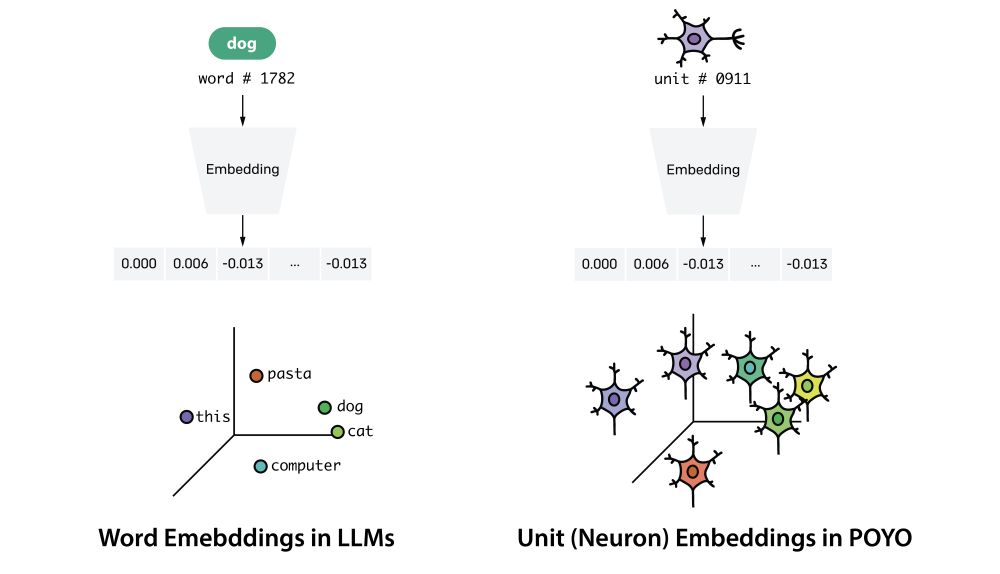

Check out our #NeurIPS2023 paper where we show that it’s possible to transfer from a large pretrained model to achieve SOTA! 🧠🟦

Link: poyo-brain.github.io 🧵

Check out our #NeurIPS2023 paper where we show that it’s possible to transfer from a large pretrained model to achieve SOTA! 🧠🟦

Link: poyo-brain.github.io 🧵