Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

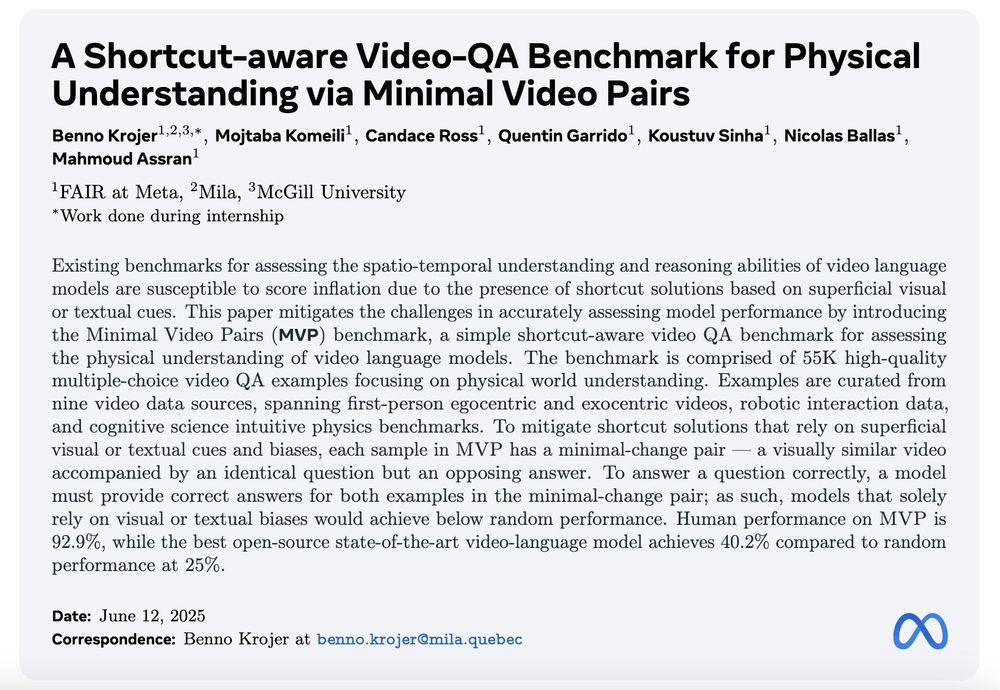

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

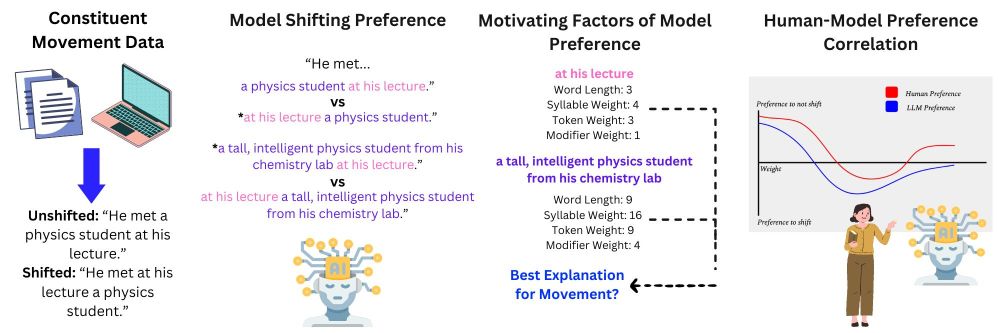

We examine constituent ordering preferences between humans and LLMs; we present two main findings… 🧵

We examine constituent ordering preferences between humans and LLMs; we present two main findings… 🧵

@emnlpmeeting.bsky.social just happened in Miami, and my colleagues just presented six papers there:

From interpretability to bias/fairness and cultural understanding -> 🧵

@emnlpmeeting.bsky.social just happened in Miami, and my colleagues just presented six papers there:

Let's complete the list with three more🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

Let's complete the list with three more🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

From interpretability to bias/fairness and cultural understanding -> 🧵