Looking forward to presenting this work at #JointActionMeeting2025 in my hometown (Turin, IT)!

Looking forward to presenting this work at #JointActionMeeting2025 in my hometown (Turin, IT)!

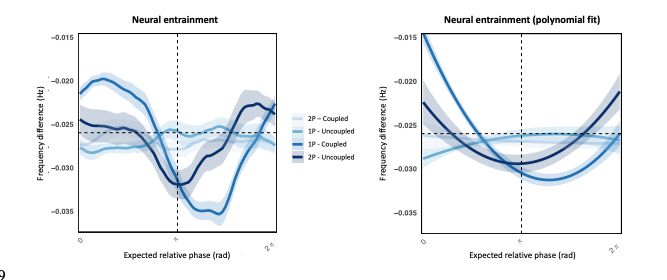

Neural entrainment tracks a partner’s rhythm more generally

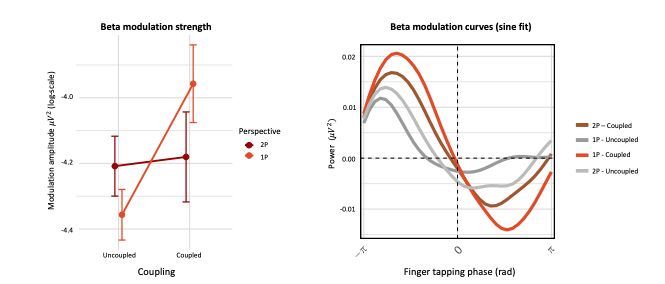

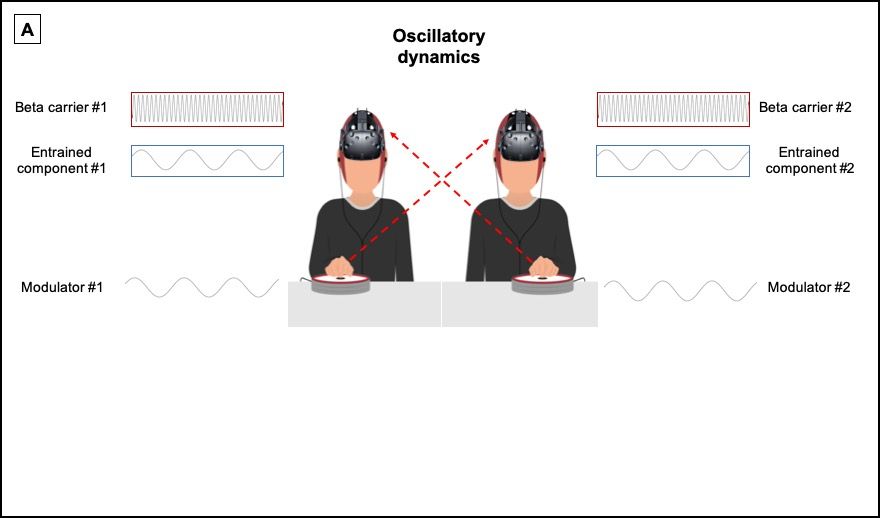

Beta modulation underlies deeper self–other integration, supporting a shared body schema for action

Neural entrainment tracks a partner’s rhythm more generally

Beta modulation underlies deeper self–other integration, supporting a shared body schema for action

- Neural entrainment (low-frequency convergence across brains)

- Beta modulation (~20 Hz signals tied to perceiving partner movements)

- Neural entrainment (low-frequency convergence across brains)

- Beta modulation (~20 Hz signals tied to perceiving partner movements)

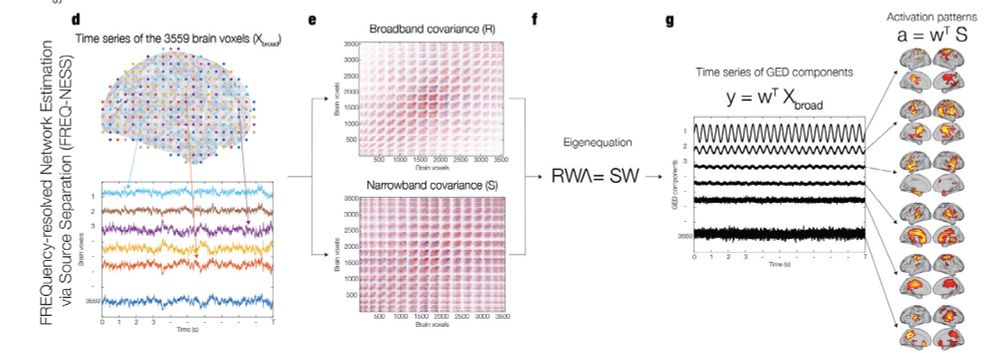

🛠️ FREQ-NESS has been a long time in the making.

If you’re interested in exploring frequency-resolved brain networks in your own data — check out the toolbox and documentation here:

👉 shorturl.at/mOVKF

Feel free to reach out for clarification or collaborations #OpenScience #Toolbox

🛠️ FREQ-NESS has been a long time in the making.

If you’re interested in exploring frequency-resolved brain networks in your own data — check out the toolbox and documentation here:

👉 shorturl.at/mOVKF

Feel free to reach out for clarification or collaborations #OpenScience #Toolbox

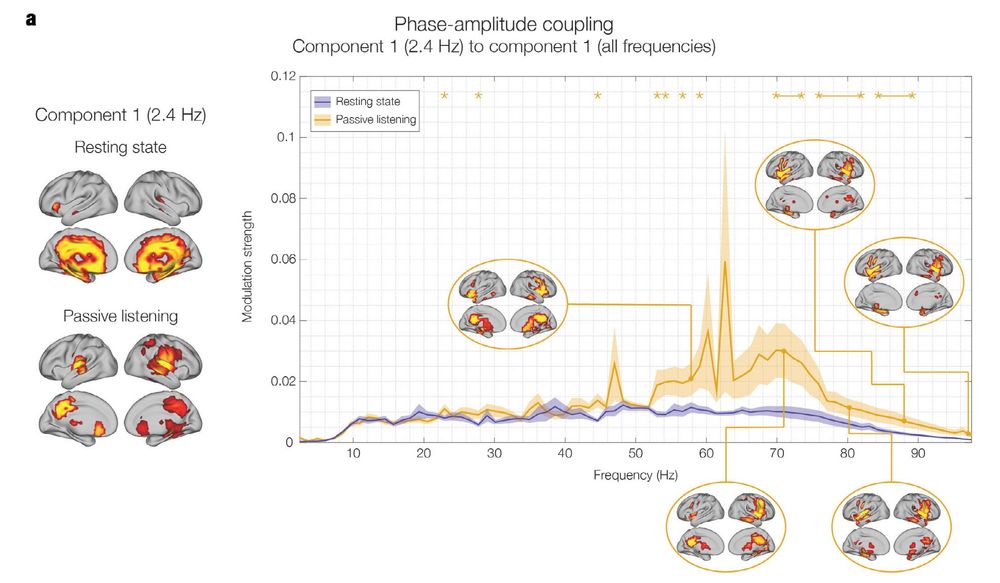

Provided the network separation, we also tracked cross-frequency coupling (CFC) between networks.

During passive listening to the metronome, the phase of low-freq (2.4 Hz) auditory networks selectively modulates the gamma band amplitude in more distributed medial temporal networks.

Provided the network separation, we also tracked cross-frequency coupling (CFC) between networks.

During passive listening to the metronome, the phase of low-freq (2.4 Hz) auditory networks selectively modulates the gamma band amplitude in more distributed medial temporal networks.

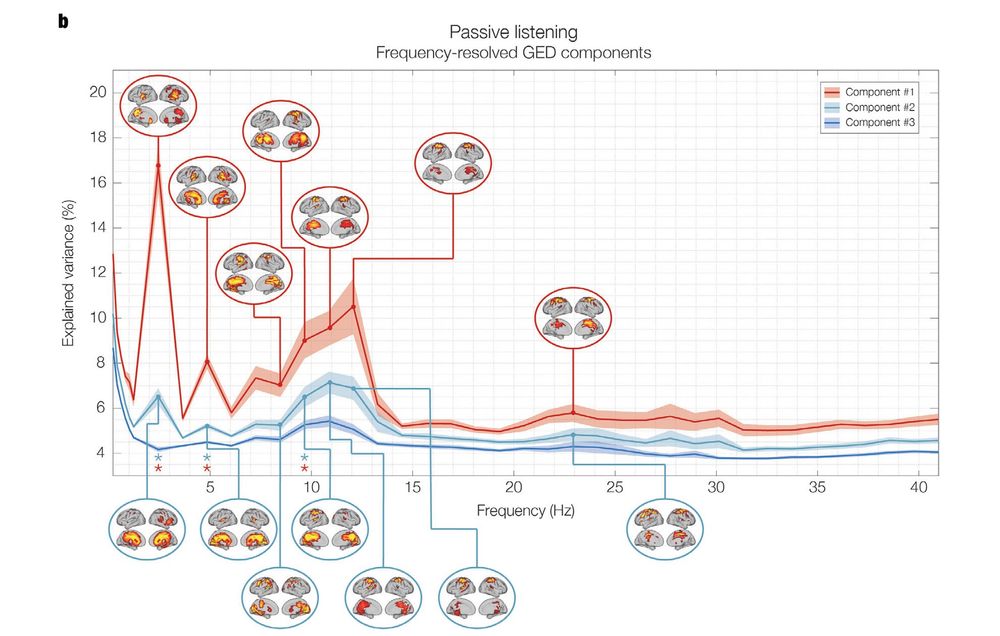

🎧 Auditory stimulation reshapes the entire frequency-resolved network landscape:

• EMERGENCE: Attunement to the 2.4 Hz stimulation

• RE-ARRANGEMENT: Spatial shift of alpha from occipital to sensorimotor, spectral shift to high alpha activity

• INVARIANCE: Beta networks remain unchanged

🎧 Auditory stimulation reshapes the entire frequency-resolved network landscape:

• EMERGENCE: Attunement to the 2.4 Hz stimulation

• RE-ARRANGEMENT: Spatial shift of alpha from occipital to sensorimotor, spectral shift to high alpha activity

• INVARIANCE: Beta networks remain unchanged

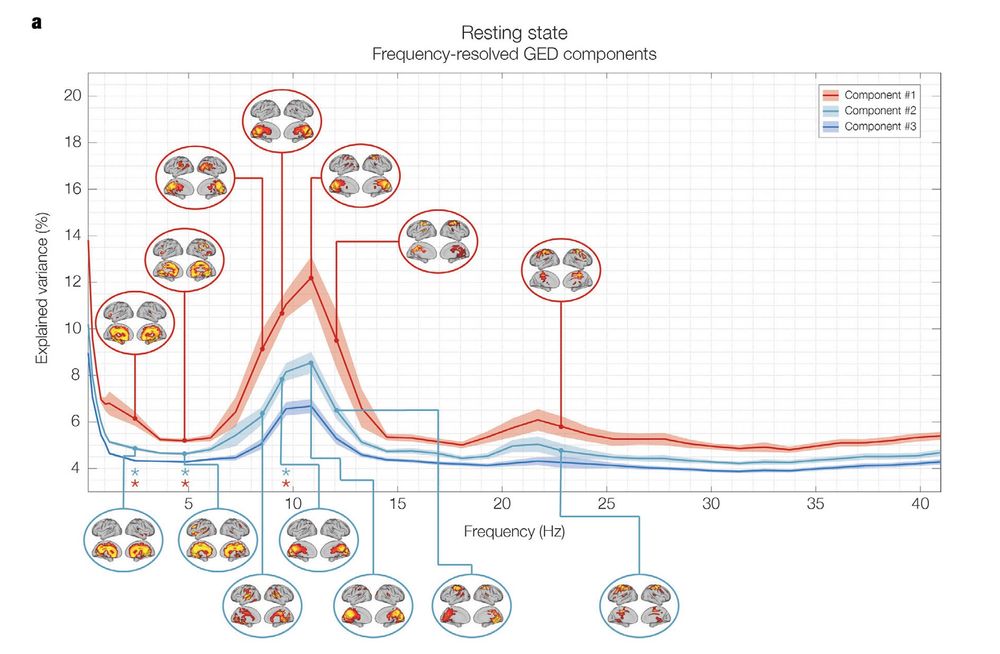

🧘♂️ During rest, FREQ-NESS reliably separates well-known resting state brain networks — the Default Mode Network, alpha-band parieto-occipital, and motor-beta sensorimotor topographies. Textbook configurations emerge directly from the data and based on frequency, without predefining regions.

🧘♂️ During rest, FREQ-NESS reliably separates well-known resting state brain networks — the Default Mode Network, alpha-band parieto-occipital, and motor-beta sensorimotor topographies. Textbook configurations emerge directly from the data and based on frequency, without predefining regions.

The method was co-developed by me and Leonardo Bonetti at @musicinthebrain.bsky.social.

🔧 The FREQ-NESS Toolbox is #open-source on GitHub:

shorturl.at/JT6iO

The method was co-developed by me and Leonardo Bonetti at @musicinthebrain.bsky.social.

🔧 The FREQ-NESS Toolbox is #open-source on GitHub:

shorturl.at/JT6iO

I would love to be added to the list.

Thanks in advance.

I would love to be added to the list.

Thanks in advance.