Interpretability and Computer Vision

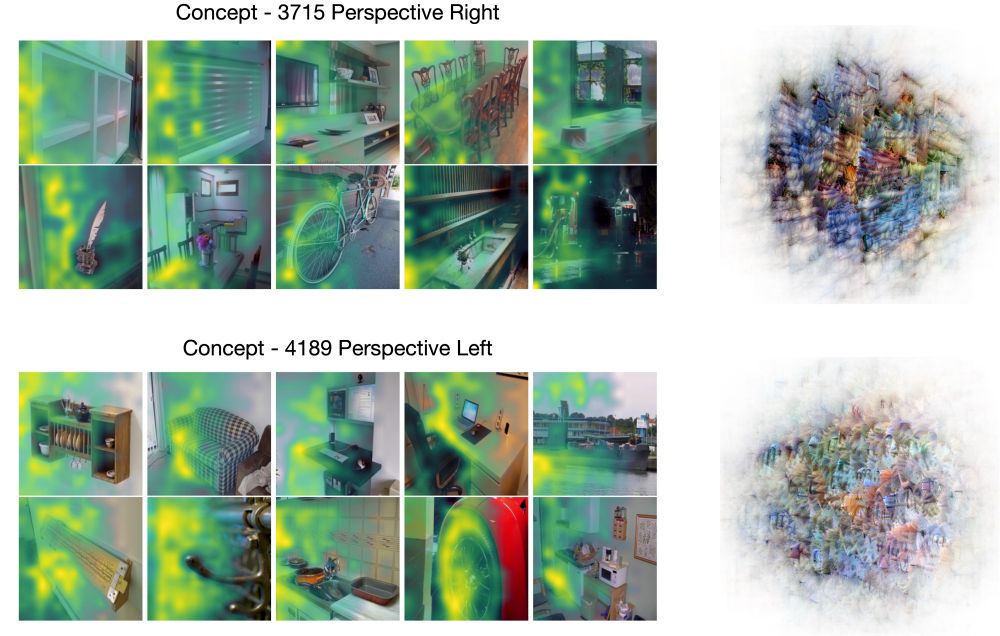

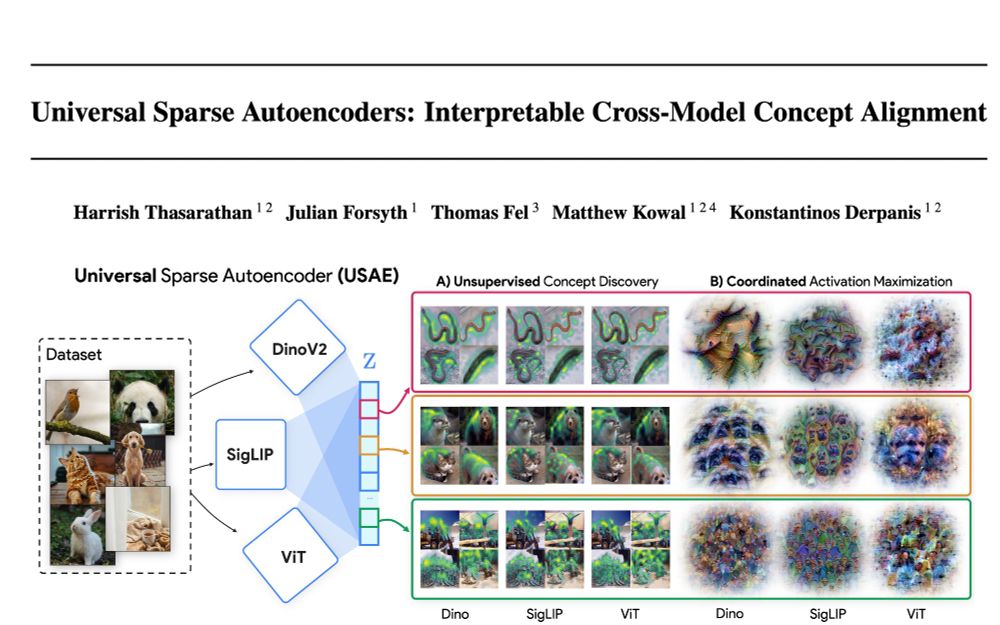

This opens new paths for understanding model differences!

(7/9)

This opens new paths for understanding model differences!

(7/9)

arxiv.org/abs/2502.03714

(1/9)

arxiv.org/abs/2502.03714

(1/9)