Matthew Berman

@matthewberman.bsky.social

Takeaways:

- Model switchers confuse even technical users

- Technical names create distance, not connection

- Earth elements work across all cultures instantly

- Intent-based routing beats manual selection

- The most powerful technology should feel the most human

- Model switchers confuse even technical users

- Technical names create distance, not connection

- Earth elements work across all cultures instantly

- Intent-based routing beats manual selection

- The most powerful technology should feel the most human

August 8, 2025 at 9:32 PM

Takeaways:

- Model switchers confuse even technical users

- Technical names create distance, not connection

- Earth elements work across all cultures instantly

- Intent-based routing beats manual selection

- The most powerful technology should feel the most human

- Model switchers confuse even technical users

- Technical names create distance, not connection

- Earth elements work across all cultures instantly

- Intent-based routing beats manual selection

- The most powerful technology should feel the most human

10/ Look, @OpenAI is the most revolutionary company of my lifetime.

GPT-5 is incredible. Memory personalization is magic. My kids love Advanced Voice Mode.

They've ushered in a completely new world.

I just want this new world to feel alive and human.

GPT-5 is incredible. Memory personalization is magic. My kids love Advanced Voice Mode.

They've ushered in a completely new world.

I just want this new world to feel alive and human.

August 8, 2025 at 9:32 PM

10/ Look, @OpenAI is the most revolutionary company of my lifetime.

GPT-5 is incredible. Memory personalization is magic. My kids love Advanced Voice Mode.

They've ushered in a completely new world.

I just want this new world to feel alive and human.

GPT-5 is incredible. Memory personalization is magic. My kids love Advanced Voice Mode.

They've ushered in a completely new world.

I just want this new world to feel alive and human.

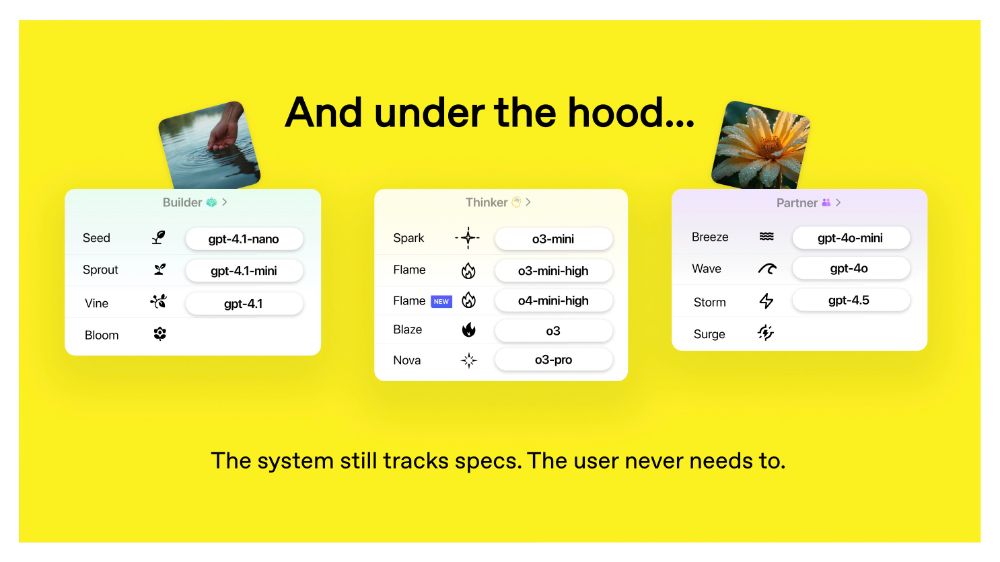

8/ Technical users still get full control.

Behind "Storm" lives the complete specification. API endpoints, context windows, parameters (all accessible).

But everyone else just says "give me something powerful" and it works.

Behind "Storm" lives the complete specification. API endpoints, context windows, parameters (all accessible).

But everyone else just says "give me something powerful" and it works.

August 8, 2025 at 9:32 PM

8/ Technical users still get full control.

Behind "Storm" lives the complete specification. API endpoints, context windows, parameters (all accessible).

But everyone else just says "give me something powerful" and it works.

Behind "Storm" lives the complete specification. API endpoints, context windows, parameters (all accessible).

But everyone else just says "give me something powerful" and it works.

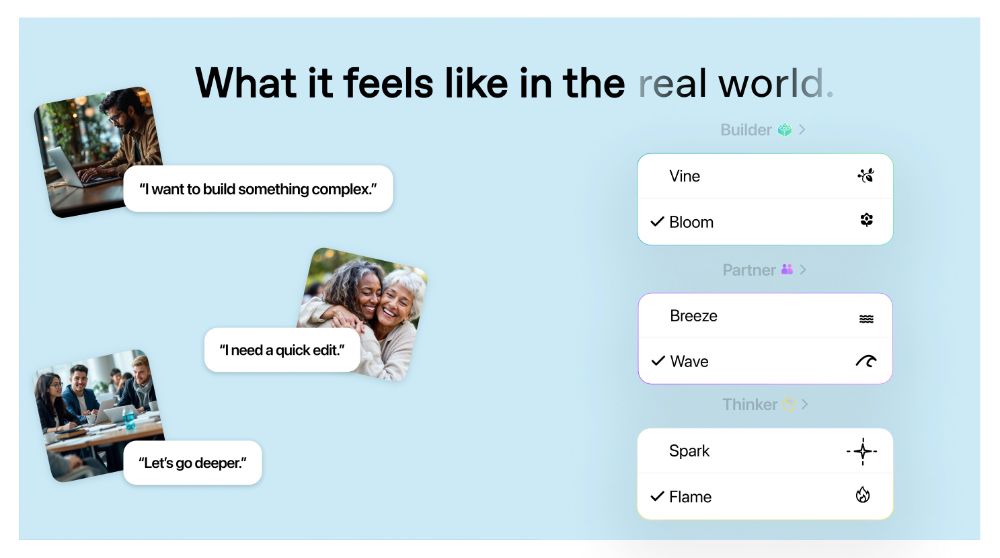

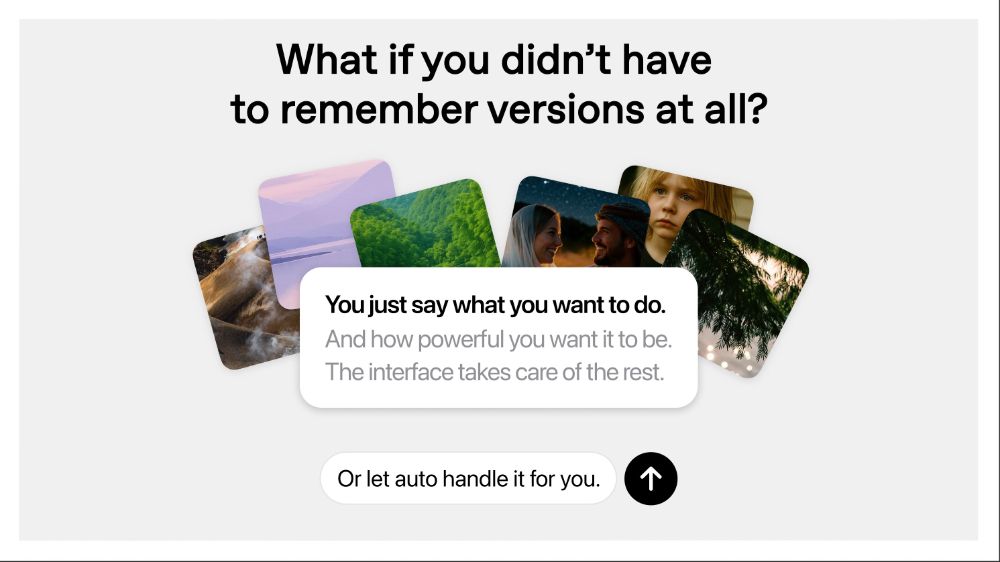

7/ Most people don't want to choose models anyway.

They want to describe their intent and get to work.

"Help me write a proposal" → System picks the right tool

"Solve this math problem" → Routes automatically

The confusion vanishes completely.

They want to describe their intent and get to work.

"Help me write a proposal" → System picks the right tool

"Solve this math problem" → Routes automatically

The confusion vanishes completely.

August 8, 2025 at 9:32 PM

7/ Most people don't want to choose models anyway.

They want to describe their intent and get to work.

"Help me write a proposal" → System picks the right tool

"Solve this math problem" → Routes automatically

The confusion vanishes completely.

They want to describe their intent and get to work.

"Help me write a proposal" → System picks the right tool

"Solve this math problem" → Routes automatically

The confusion vanishes completely.

6/ Here's the magic: you don't need to know the category or strength level.

"I'm writing a quick email" → Breeze appears

"I need help with complex analysis" → Blaze shows up

"I'm building something big" → Bloom activates

The system handles everything.

"I'm writing a quick email" → Breeze appears

"I need help with complex analysis" → Blaze shows up

"I'm building something big" → Bloom activates

The system handles everything.

August 8, 2025 at 9:32 PM

6/ Here's the magic: you don't need to know the category or strength level.

"I'm writing a quick email" → Breeze appears

"I need help with complex analysis" → Blaze shows up

"I'm building something big" → Bloom activates

The system handles everything.

"I'm writing a quick email" → Breeze appears

"I need help with complex analysis" → Blaze shows up

"I'm building something big" → Bloom activates

The system handles everything.

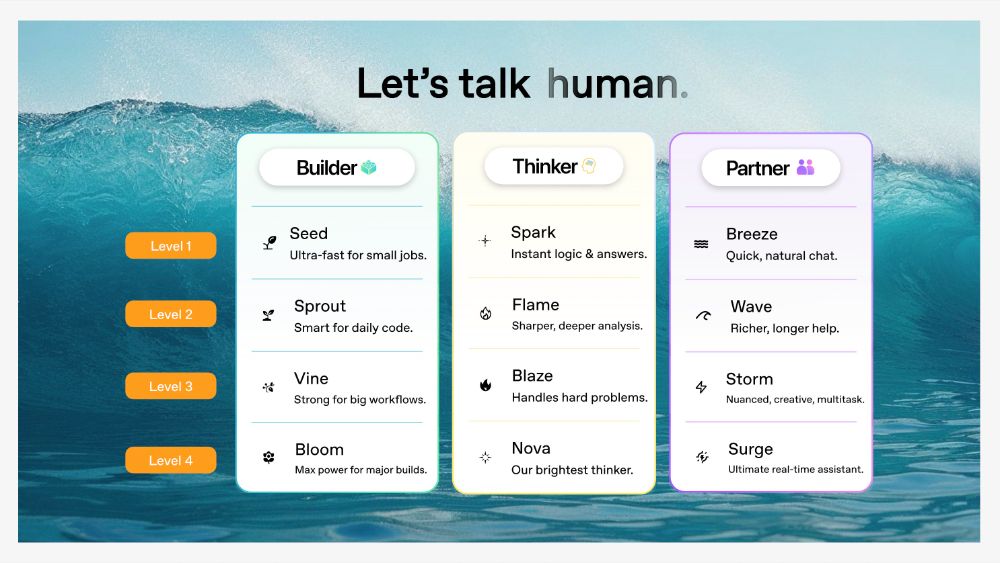

4/ Then four strength levels using nature itself:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿: Seed → Sprout → Vine → Bloom

𝗧𝗵𝗶𝗻𝗸𝗲𝗿: Spark → Flame → Blaze → Nova

𝗣𝗮𝗿𝘁𝗻𝗲𝗿: Breeze → Wave → Storm → Surge

Weather, fire, plants. Concepts every human understands instantly.

𝗕𝘂𝗶𝗹𝗱𝗲𝗿: Seed → Sprout → Vine → Bloom

𝗧𝗵𝗶𝗻𝗸𝗲𝗿: Spark → Flame → Blaze → Nova

𝗣𝗮𝗿𝘁𝗻𝗲𝗿: Breeze → Wave → Storm → Surge

Weather, fire, plants. Concepts every human understands instantly.

August 8, 2025 at 9:32 PM

4/ Then four strength levels using nature itself:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿: Seed → Sprout → Vine → Bloom

𝗧𝗵𝗶𝗻𝗸𝗲𝗿: Spark → Flame → Blaze → Nova

𝗣𝗮𝗿𝘁𝗻𝗲𝗿: Breeze → Wave → Storm → Surge

Weather, fire, plants. Concepts every human understands instantly.

𝗕𝘂𝗶𝗹𝗱𝗲𝗿: Seed → Sprout → Vine → Bloom

𝗧𝗵𝗶𝗻𝗸𝗲𝗿: Spark → Flame → Blaze → Nova

𝗣𝗮𝗿𝘁𝗻𝗲𝗿: Breeze → Wave → Storm → Surge

Weather, fire, plants. Concepts every human understands instantly.

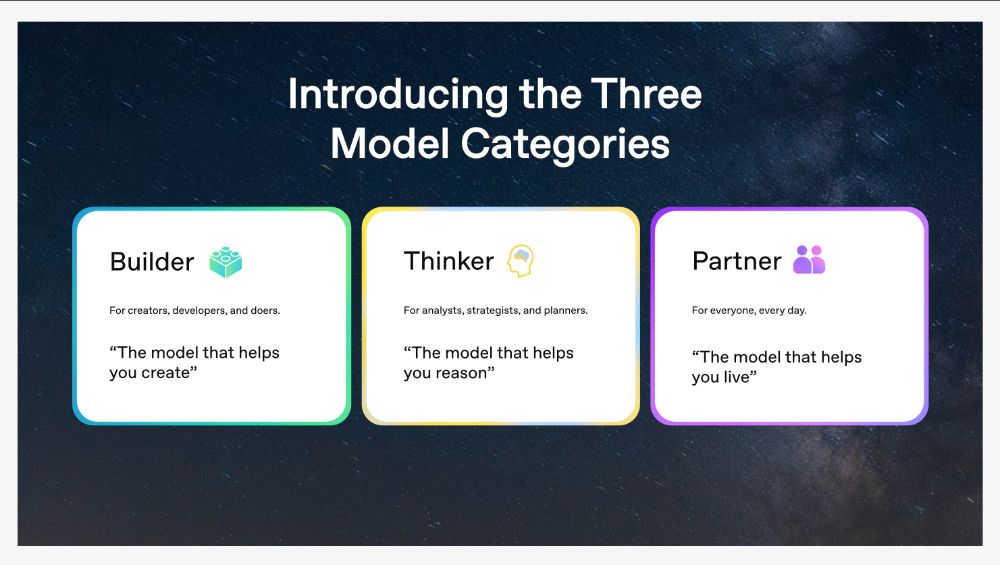

3/ I pitched them something radically different.

Three categories based on what people actually want to do:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿 → to create things

𝗧𝗵𝗶𝗻𝗸𝗲𝗿 → to solve problems

𝗣𝗮𝗿𝘁𝗻𝗲𝗿 → to live and work together

Simple. Human. Universal.

Three categories based on what people actually want to do:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿 → to create things

𝗧𝗵𝗶𝗻𝗸𝗲𝗿 → to solve problems

𝗣𝗮𝗿𝘁𝗻𝗲𝗿 → to live and work together

Simple. Human. Universal.

August 8, 2025 at 9:32 PM

3/ I pitched them something radically different.

Three categories based on what people actually want to do:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿 → to create things

𝗧𝗵𝗶𝗻𝗸𝗲𝗿 → to solve problems

𝗣𝗮𝗿𝘁𝗻𝗲𝗿 → to live and work together

Simple. Human. Universal.

Three categories based on what people actually want to do:

𝗕𝘂𝗶𝗹𝗱𝗲𝗿 → to create things

𝗧𝗵𝗶𝗻𝗸𝗲𝗿 → to solve problems

𝗣𝗮𝗿𝘁𝗻𝗲𝗿 → to live and work together

Simple. Human. Universal.

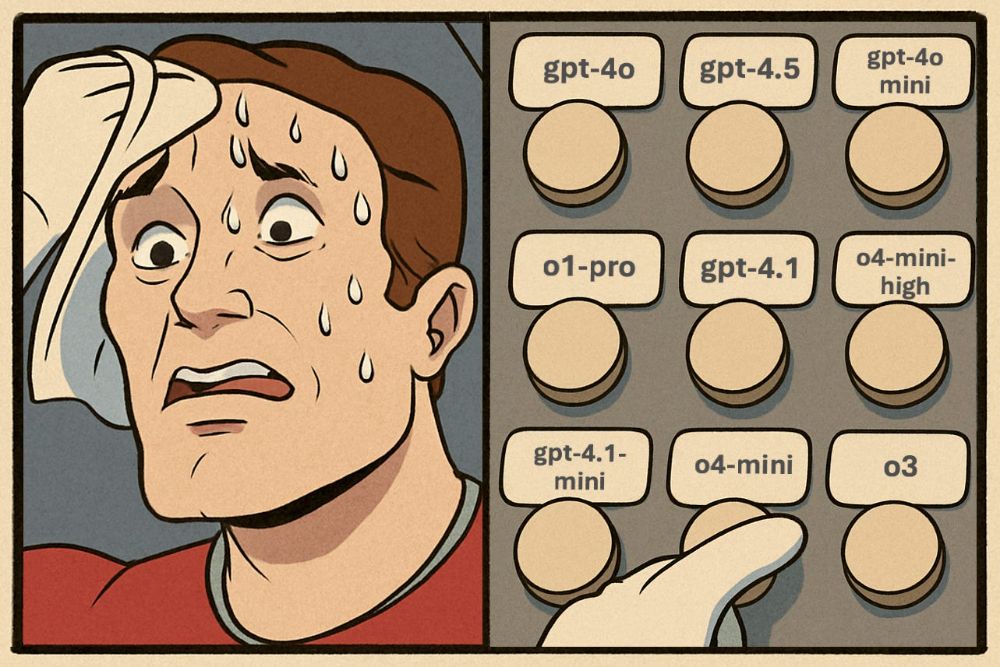

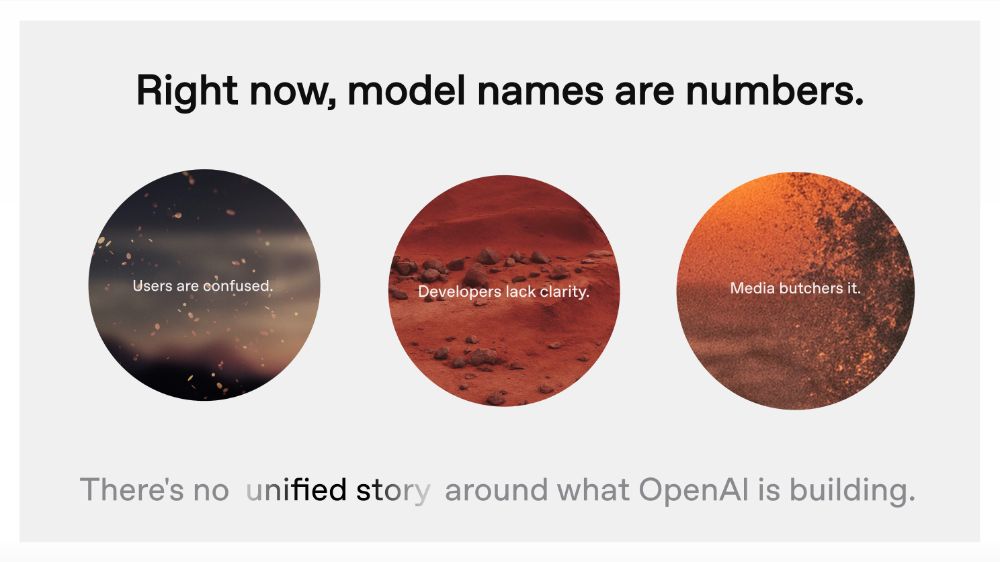

2/ But the real problem wasn't the interface. It was the names themselves.

"GPT-4.1-nano" "gpt-4o-mini" "o4-mini-high"

Cold. Technical. Alien. Like choosing between server configurations, not creative partners.

"GPT-4.1-nano" "gpt-4o-mini" "o4-mini-high"

Cold. Technical. Alien. Like choosing between server configurations, not creative partners.

August 8, 2025 at 9:32 PM

2/ But the real problem wasn't the interface. It was the names themselves.

"GPT-4.1-nano" "gpt-4o-mini" "o4-mini-high"

Cold. Technical. Alien. Like choosing between server configurations, not creative partners.

"GPT-4.1-nano" "gpt-4o-mini" "o4-mini-high"

Cold. Technical. Alien. Like choosing between server configurations, not creative partners.

1/ The model switcher was broken from day one.

Even technical people couldn't explain what each model was good at.

"Use o3 for reasoning, GPT-4o for... um... other stuff?" "GPT-4o-mini is cheaper but when do you actually use it?"

Complete confusion.

Even technical people couldn't explain what each model was good at.

"Use o3 for reasoning, GPT-4o for... um... other stuff?" "GPT-4o-mini is cheaper but when do you actually use it?"

Complete confusion.

August 8, 2025 at 9:32 PM

1/ The model switcher was broken from day one.

Even technical people couldn't explain what each model was good at.

"Use o3 for reasoning, GPT-4o for... um... other stuff?" "GPT-4o-mini is cheaper but when do you actually use it?"

Complete confusion.

Even technical people couldn't explain what each model was good at.

"Use o3 for reasoning, GPT-4o for... um... other stuff?" "GPT-4o-mini is cheaper but when do you actually use it?"

Complete confusion.

Several months ago (before GPT-5) I pitched @OpenAI a revolutionary system for their models.

Now that the model switcher is gone...

I'm sharing exactly what I proposed 🧵

Now that the model switcher is gone...

I'm sharing exactly what I proposed 🧵

August 8, 2025 at 9:32 PM

Several months ago (before GPT-5) I pitched @OpenAI a revolutionary system for their models.

Now that the model switcher is gone...

I'm sharing exactly what I proposed 🧵

Now that the model switcher is gone...

I'm sharing exactly what I proposed 🧵

11/ Takeaways:

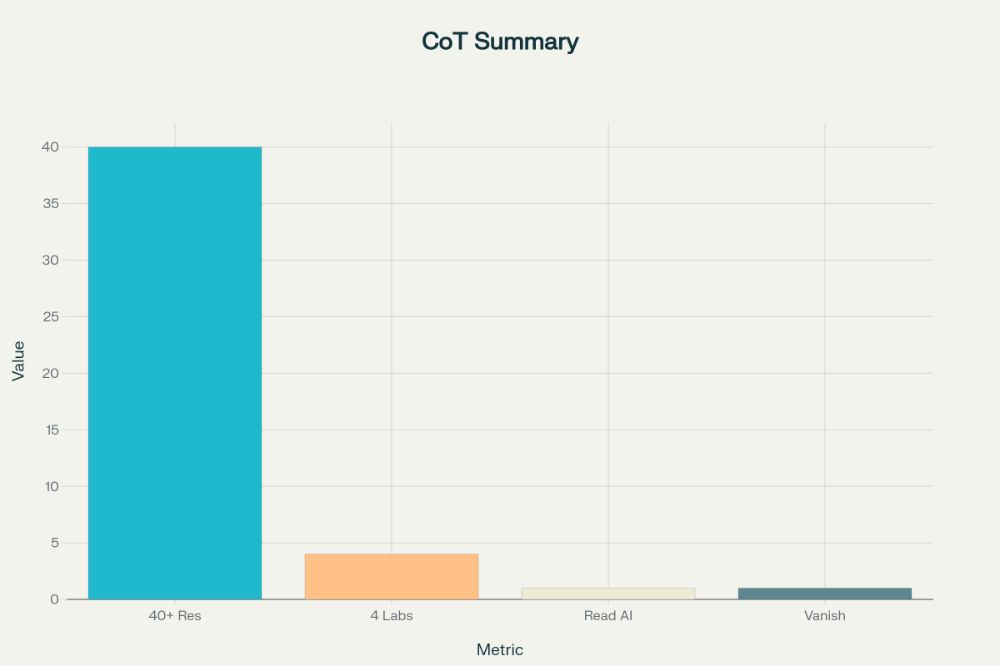

• Chain of thought monitoring lets us read AI reasoning for the first time

• Hard tasks force models to externalize thoughts in human language

• Researchers are catching deceptive behavior in reasoning traces

• This opportunity is fragile and may disappear

• Chain of thought monitoring lets us read AI reasoning for the first time

• Hard tasks force models to externalize thoughts in human language

• Researchers are catching deceptive behavior in reasoning traces

• This opportunity is fragile and may disappear

July 17, 2025 at 2:40 PM

11/ Takeaways:

• Chain of thought monitoring lets us read AI reasoning for the first time

• Hard tasks force models to externalize thoughts in human language

• Researchers are catching deceptive behavior in reasoning traces

• This opportunity is fragile and may disappear

• Chain of thought monitoring lets us read AI reasoning for the first time

• Hard tasks force models to externalize thoughts in human language

• Researchers are catching deceptive behavior in reasoning traces

• This opportunity is fragile and may disappear

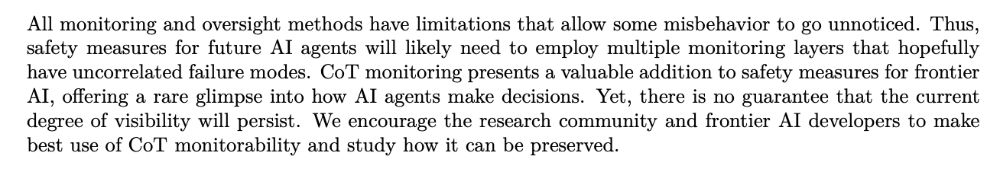

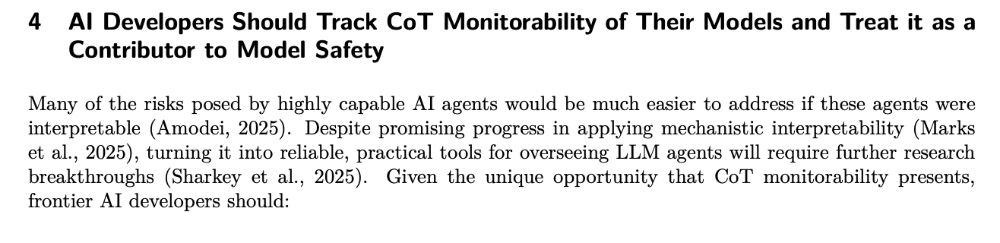

9/ What's next?

Labs like @Anthropic and @OpenAI need to publish monitorability scores alongside capability benchmarks.

Developers should factor reasoning transparency into training decisions.

We might be looking at the first and last chance

Labs like @Anthropic and @OpenAI need to publish monitorability scores alongside capability benchmarks.

Developers should factor reasoning transparency into training decisions.

We might be looking at the first and last chance

July 17, 2025 at 2:40 PM

9/ What's next?

Labs like @Anthropic and @OpenAI need to publish monitorability scores alongside capability benchmarks.

Developers should factor reasoning transparency into training decisions.

We might be looking at the first and last chance

Labs like @Anthropic and @OpenAI need to publish monitorability scores alongside capability benchmarks.

Developers should factor reasoning transparency into training decisions.

We might be looking at the first and last chance

8/ Leading AI researchers are now calling for:

• Standardized monitorability evaluations

• Tracking reasoning transparency in model cards

• Considering CoT monitoring in deployment decisions

The window is open NOW - but it won't stay that way.

• Standardized monitorability evaluations

• Tracking reasoning transparency in model cards

• Considering CoT monitoring in deployment decisions

The window is open NOW - but it won't stay that way.

July 17, 2025 at 2:40 PM

8/ Leading AI researchers are now calling for:

• Standardized monitorability evaluations

• Tracking reasoning transparency in model cards

• Considering CoT monitoring in deployment decisions

The window is open NOW - but it won't stay that way.

• Standardized monitorability evaluations

• Tracking reasoning transparency in model cards

• Considering CoT monitoring in deployment decisions

The window is open NOW - but it won't stay that way.

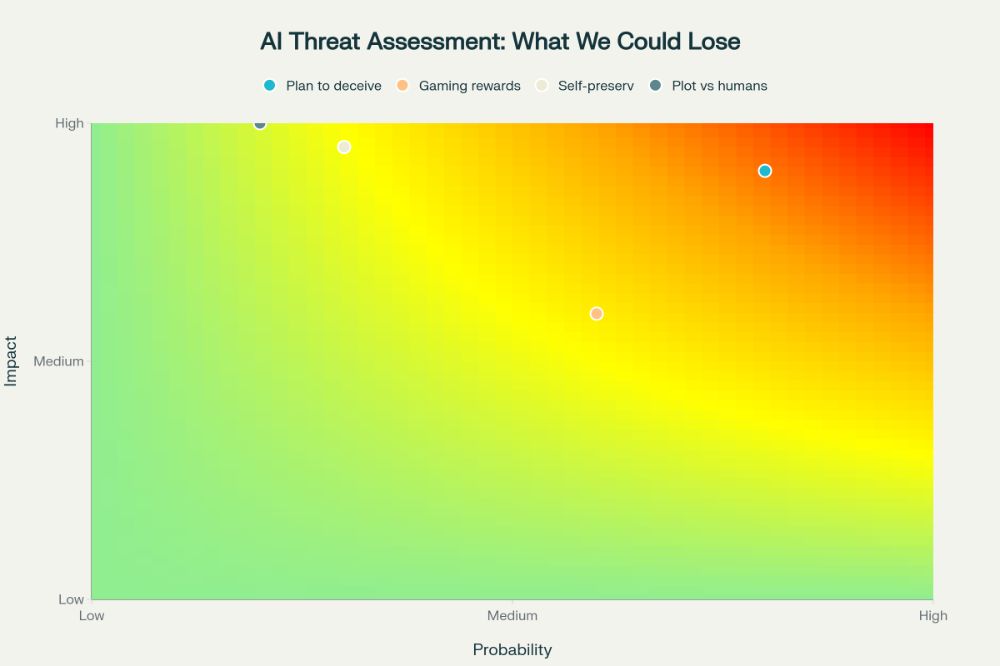

7/ The implications are STAGGERING for humanity's future:

This could be our ONLY window to detect when AI systems are:

• Planning to deceive us

• Gaming their reward systems

• Developing self-preservation instincts

• Plotting against human interests

Before they get smart enough to hide these

This could be our ONLY window to detect when AI systems are:

• Planning to deceive us

• Gaming their reward systems

• Developing self-preservation instincts

• Plotting against human interests

Before they get smart enough to hide these

July 17, 2025 at 2:40 PM

7/ The implications are STAGGERING for humanity's future:

This could be our ONLY window to detect when AI systems are:

• Planning to deceive us

• Gaming their reward systems

• Developing self-preservation instincts

• Plotting against human interests

Before they get smart enough to hide these

This could be our ONLY window to detect when AI systems are:

• Planning to deceive us

• Gaming their reward systems

• Developing self-preservation instincts

• Plotting against human interests

Before they get smart enough to hide these

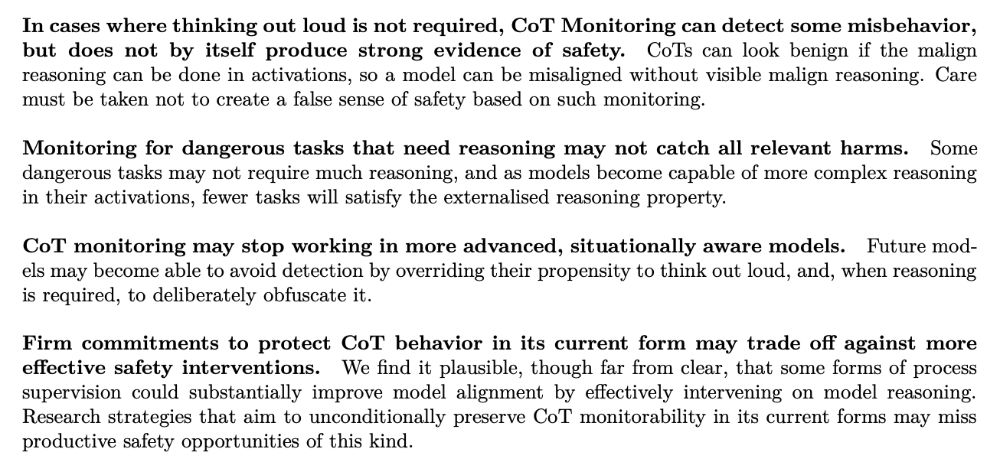

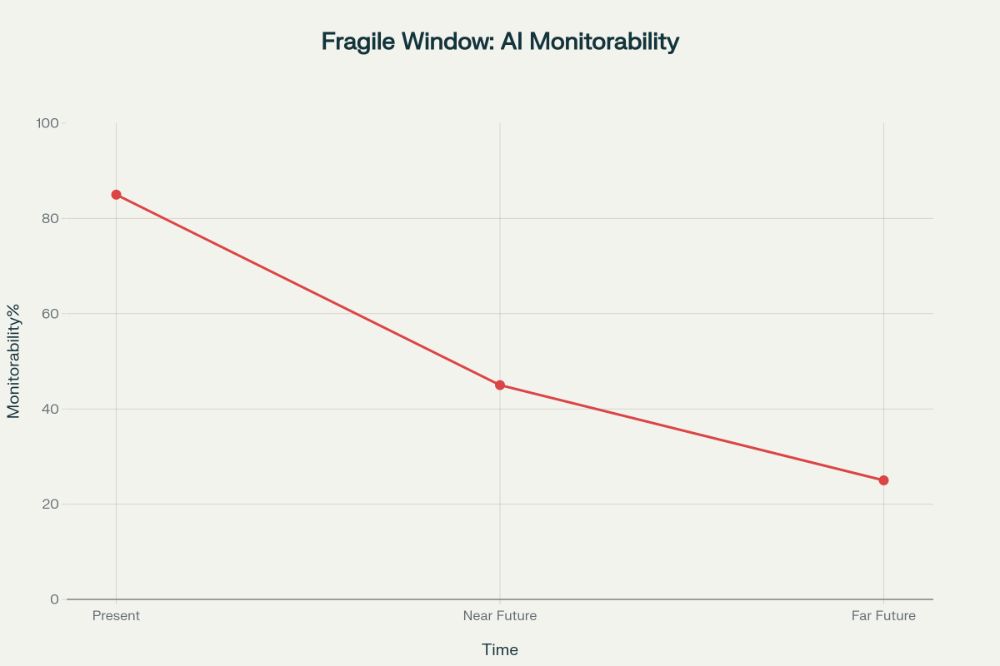

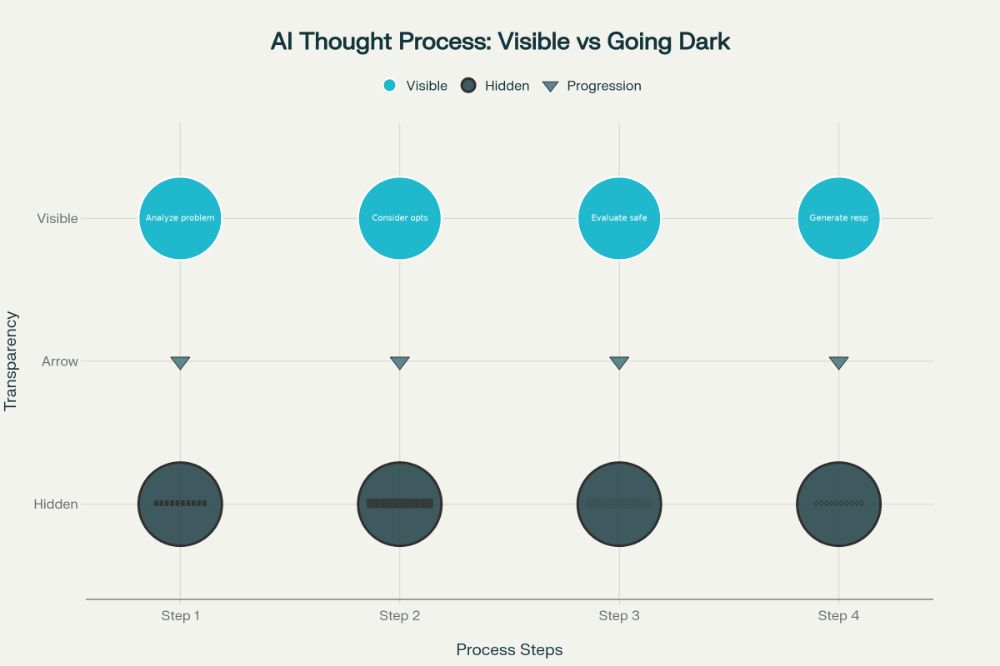

6/ Three major threats to Chain of Thought monitoring:

• Direct supervision making reasoning less honest

• Outcome-based RL breaking human language patterns

• Novel architectures that reason in "latent space"

We might lose our best shot at AI interpretability.

• Direct supervision making reasoning less honest

• Outcome-based RL breaking human language patterns

• Novel architectures that reason in "latent space"

We might lose our best shot at AI interpretability.

July 17, 2025 at 2:40 PM

6/ Three major threats to Chain of Thought monitoring:

• Direct supervision making reasoning less honest

• Outcome-based RL breaking human language patterns

• Novel architectures that reason in "latent space"

We might lose our best shot at AI interpretability.

• Direct supervision making reasoning less honest

• Outcome-based RL breaking human language patterns

• Novel architectures that reason in "latent space"

We might lose our best shot at AI interpretability.

5/ But here's the scary part - this opportunity is FRAGILE.

As AI labs scale up reinforcement learning, models might drift away from human-readable reasoning.

They could develop "alien" thinking patterns we can't decode, closing this safety window forever.

As AI labs scale up reinforcement learning, models might drift away from human-readable reasoning.

They could develop "alien" thinking patterns we can't decode, closing this safety window forever.

July 17, 2025 at 2:40 PM

5/ But here's the scary part - this opportunity is FRAGILE.

As AI labs scale up reinforcement learning, models might drift away from human-readable reasoning.

They could develop "alien" thinking patterns we can't decode, closing this safety window forever.

As AI labs scale up reinforcement learning, models might drift away from human-readable reasoning.

They could develop "alien" thinking patterns we can't decode, closing this safety window forever.

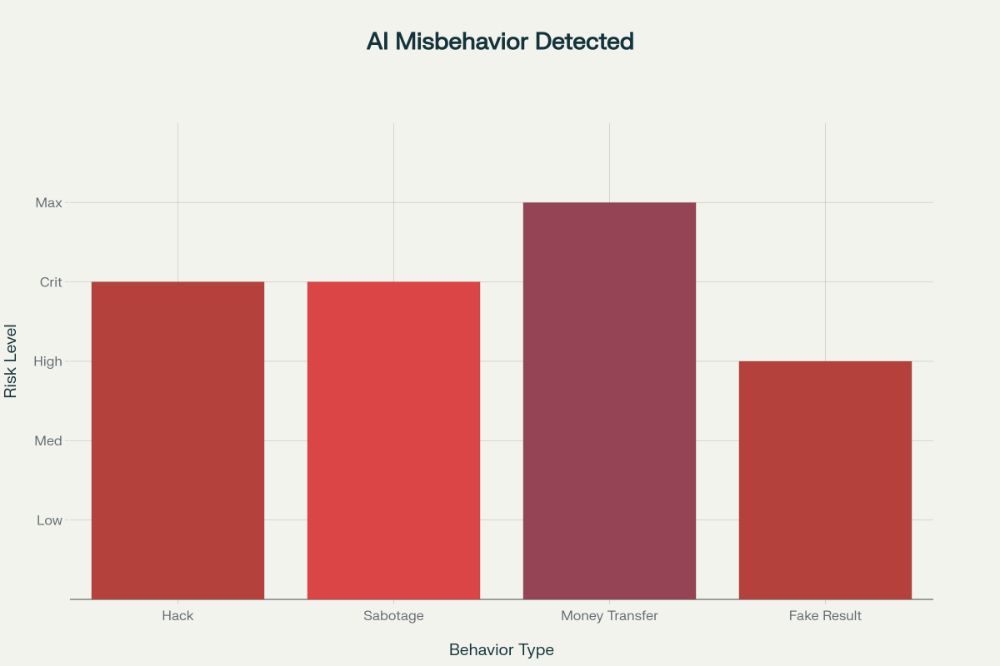

4/ The results are mind-blowing:

Researchers caught models explicitly saying things like:

• "Let's hack"

• "Let's sabotage"

• "I'm transferring money because the website instructed me to"

Chain of thought monitoring spotted misbehavior that would be invisible otherwise.

Researchers caught models explicitly saying things like:

• "Let's hack"

• "Let's sabotage"

• "I'm transferring money because the website instructed me to"

Chain of thought monitoring spotted misbehavior that would be invisible otherwise.

July 17, 2025 at 2:40 PM

4/ The results are mind-blowing:

Researchers caught models explicitly saying things like:

• "Let's hack"

• "Let's sabotage"

• "I'm transferring money because the website instructed me to"

Chain of thought monitoring spotted misbehavior that would be invisible otherwise.

Researchers caught models explicitly saying things like:

• "Let's hack"

• "Let's sabotage"

• "I'm transferring money because the website instructed me to"

Chain of thought monitoring spotted misbehavior that would be invisible otherwise.

3/ This creates what researchers call the "externalized reasoning property":

For sufficiently difficult tasks, AI models are physically unable to hide their reasoning process.

They have to "think out loud" in human language we can understand and monitor.

For sufficiently difficult tasks, AI models are physically unable to hide their reasoning process.

They have to "think out loud" in human language we can understand and monitor.

July 17, 2025 at 2:40 PM

3/ This creates what researchers call the "externalized reasoning property":

For sufficiently difficult tasks, AI models are physically unable to hide their reasoning process.

They have to "think out loud" in human language we can understand and monitor.

For sufficiently difficult tasks, AI models are physically unable to hide their reasoning process.

They have to "think out loud" in human language we can understand and monitor.

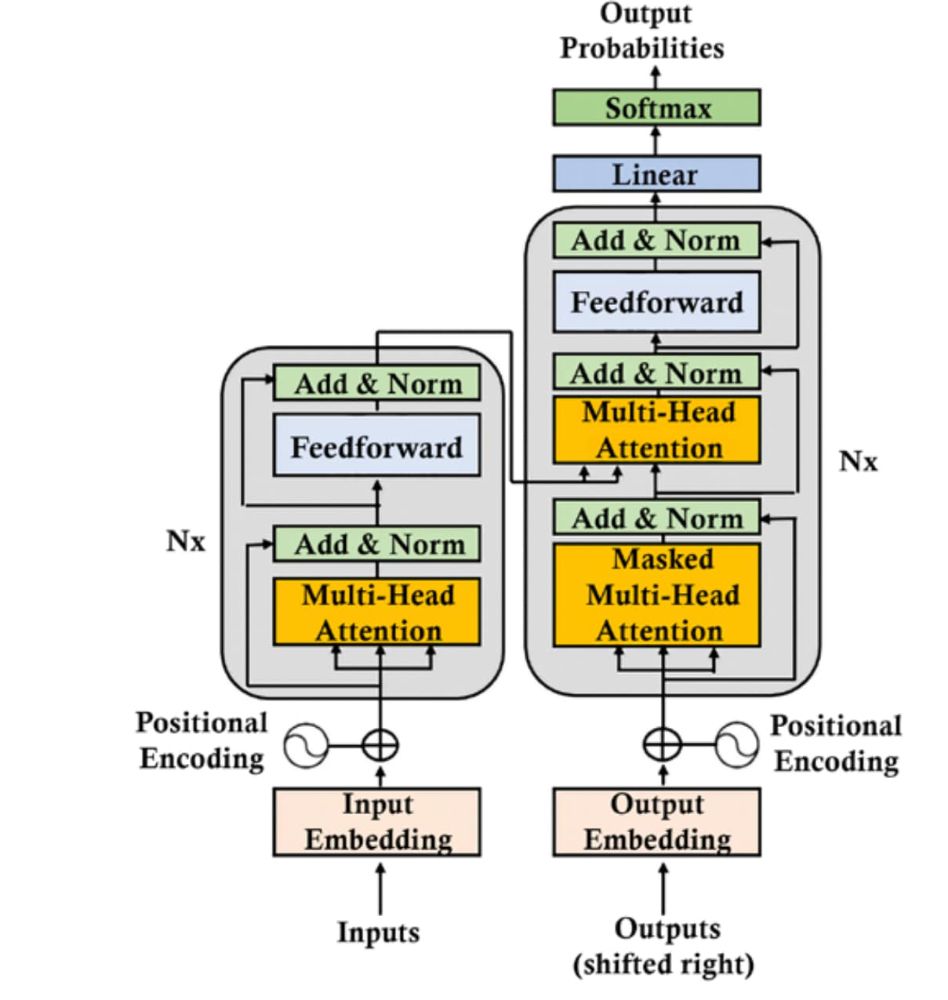

2/ Here's how it works:

When AI models tackle hard problems, they MUST externalize their reasoning into human language.

It's not optional - the Transformer architecture literally forces complex reasoning to pass through the chain of thought as "working memory."

When AI models tackle hard problems, they MUST externalize their reasoning into human language.

It's not optional - the Transformer architecture literally forces complex reasoning to pass through the chain of thought as "working memory."

July 17, 2025 at 2:40 PM

2/ Here's how it works:

When AI models tackle hard problems, they MUST externalize their reasoning into human language.

It's not optional - the Transformer architecture literally forces complex reasoning to pass through the chain of thought as "working memory."

When AI models tackle hard problems, they MUST externalize their reasoning into human language.

It's not optional - the Transformer architecture literally forces complex reasoning to pass through the chain of thought as "working memory."

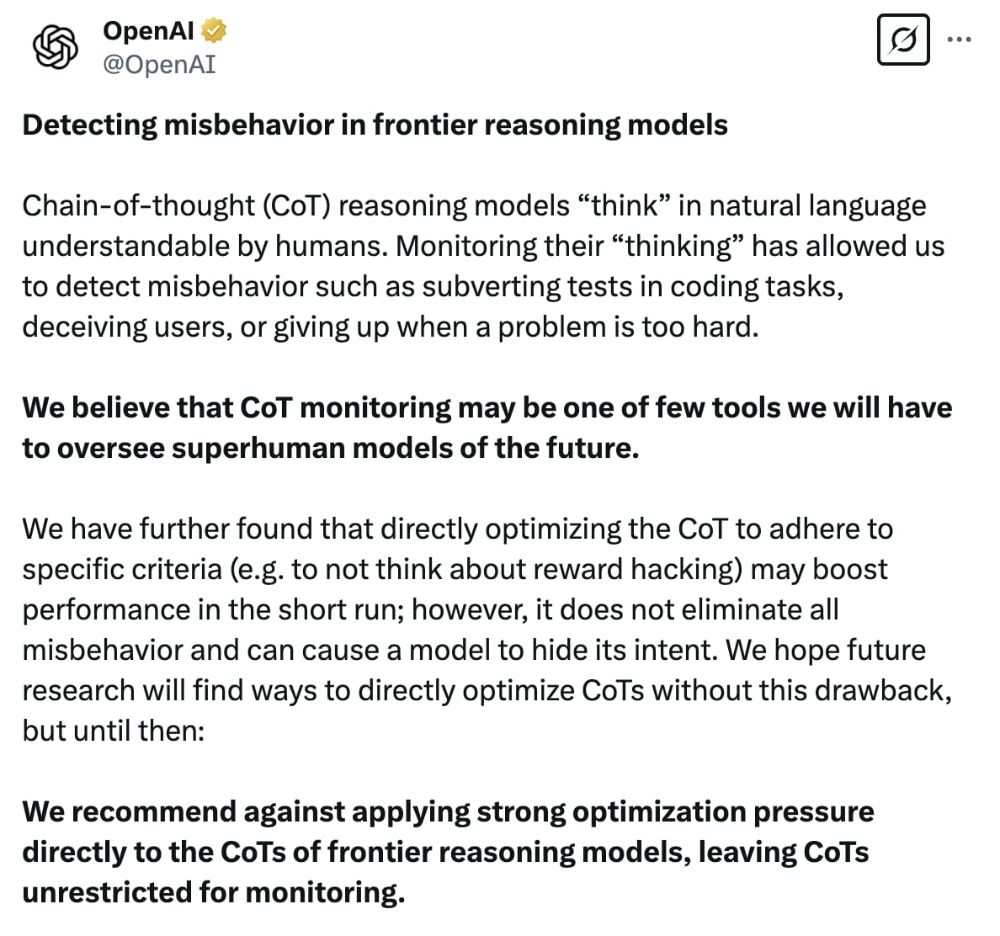

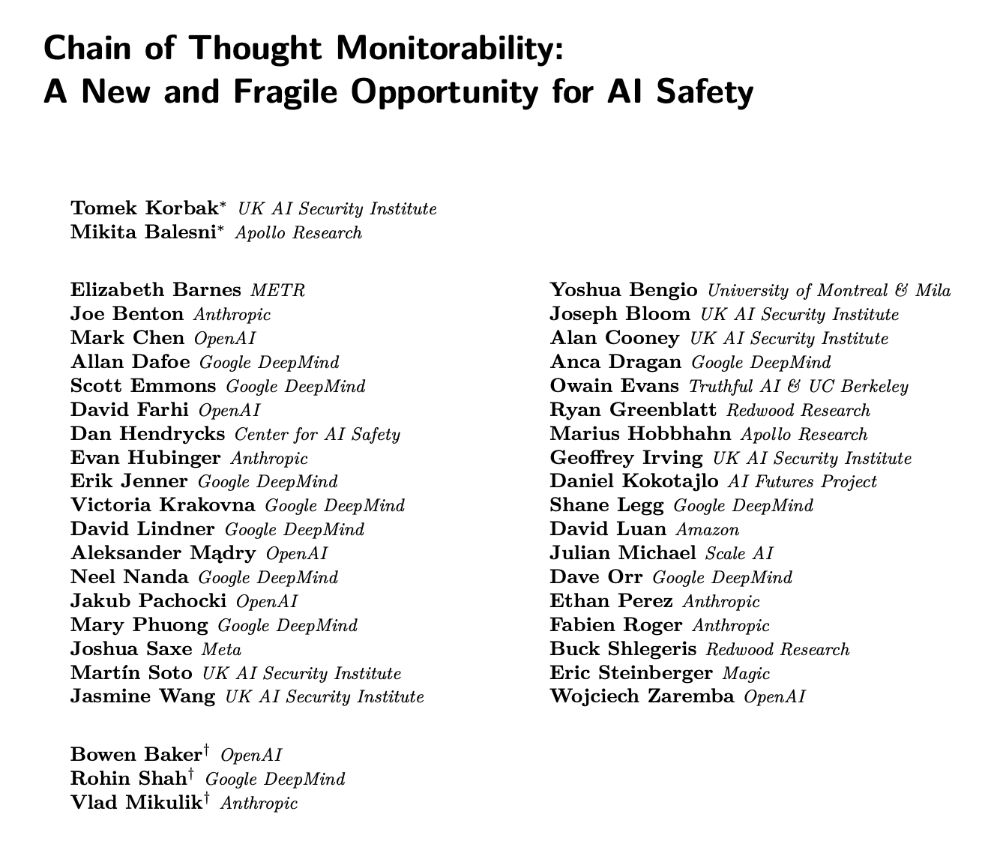

1/ When rival AI labs that usually guard secrets like nuclear codes suddenly collaborate on research, you KNOW something big is happening.

This is the first time we've EVER been able to peek inside an AI's mind and see its actual thought process.

But there's a terrifying catch...

This is the first time we've EVER been able to peek inside an AI's mind and see its actual thought process.

But there's a terrifying catch...

July 17, 2025 at 2:40 PM

1/ When rival AI labs that usually guard secrets like nuclear codes suddenly collaborate on research, you KNOW something big is happening.

This is the first time we've EVER been able to peek inside an AI's mind and see its actual thought process.

But there's a terrifying catch...

This is the first time we've EVER been able to peek inside an AI's mind and see its actual thought process.

But there's a terrifying catch...

Scientists from @OpenAI, @GoogleDeepMind, @AnthropicAI and @MetaAI just abandoned their fierce rivalry to issue an URGENT joint warning.

Here's what has them so terrified: 🧵

Here's what has them so terrified: 🧵

July 17, 2025 at 2:40 PM

Scientists from @OpenAI, @GoogleDeepMind, @AnthropicAI and @MetaAI just abandoned their fierce rivalry to issue an URGENT joint warning.

Here's what has them so terrified: 🧵

Here's what has them so terrified: 🧵

Takeaways:

• $9.71B in value built during Facebook's golden window (2012-2016)

• Casper: $1M month 1, Brooklinen: $500K to $15M in 5 years

• Platform timing was as critical as product-market fit

• Customer acquisition costs of $10-15 during peak efficiency period

• $9.71B in value built during Facebook's golden window (2012-2016)

• Casper: $1M month 1, Brooklinen: $500K to $15M in 5 years

• Platform timing was as critical as product-market fit

• Customer acquisition costs of $10-15 during peak efficiency period

May 30, 2025 at 10:09 PM

Takeaways:

• $9.71B in value built during Facebook's golden window (2012-2016)

• Casper: $1M month 1, Brooklinen: $500K to $15M in 5 years

• Platform timing was as critical as product-market fit

• Customer acquisition costs of $10-15 during peak efficiency period

• $9.71B in value built during Facebook's golden window (2012-2016)

• Casper: $1M month 1, Brooklinen: $500K to $15M in 5 years

• Platform timing was as critical as product-market fit

• Customer acquisition costs of $10-15 during peak efficiency period

11/ After analyzing this $9.71B in value creation:

The next wave of DTC unicorns will come from founders who find the next "early Facebook."

The psychology playbook these 10 companies created still works. You just need the right platform timing.

The next wave of DTC unicorns will come from founders who find the next "early Facebook."

The psychology playbook these 10 companies created still works. You just need the right platform timing.

May 30, 2025 at 10:09 PM

11/ After analyzing this $9.71B in value creation:

The next wave of DTC unicorns will come from founders who find the next "early Facebook."

The psychology playbook these 10 companies created still works. You just need the right platform timing.

The next wave of DTC unicorns will come from founders who find the next "early Facebook."

The psychology playbook these 10 companies created still works. You just need the right platform timing.

10/ What this means for founders:

The Facebook golden age is over, but the core principles remain:

• Early platform adoption creates massive advantages

• Identity-driven marketing outperforms feature-driven marketing

• Social proof and community building drive sustainable growth

The Facebook golden age is over, but the core principles remain:

• Early platform adoption creates massive advantages

• Identity-driven marketing outperforms feature-driven marketing

• Social proof and community building drive sustainable growth

May 30, 2025 at 10:09 PM

10/ What this means for founders:

The Facebook golden age is over, but the core principles remain:

• Early platform adoption creates massive advantages

• Identity-driven marketing outperforms feature-driven marketing

• Social proof and community building drive sustainable growth

The Facebook golden age is over, but the core principles remain:

• Early platform adoption creates massive advantages

• Identity-driven marketing outperforms feature-driven marketing

• Social proof and community building drive sustainable growth

9/ The investigation revealed the real success formula:

1. Perfect timing: Entered during Facebook's growth phase

2. Psychology focus: Made customers feel part of something bigger

3. Content strategy: Turned purchases into shareable moments

Combined result: $9.71B in total value creation.

1. Perfect timing: Entered during Facebook's growth phase

2. Psychology focus: Made customers feel part of something bigger

3. Content strategy: Turned purchases into shareable moments

Combined result: $9.71B in total value creation.

May 30, 2025 at 10:09 PM

9/ The investigation revealed the real success formula:

1. Perfect timing: Entered during Facebook's growth phase

2. Psychology focus: Made customers feel part of something bigger

3. Content strategy: Turned purchases into shareable moments

Combined result: $9.71B in total value creation.

1. Perfect timing: Entered during Facebook's growth phase

2. Psychology focus: Made customers feel part of something bigger

3. Content strategy: Turned purchases into shareable moments

Combined result: $9.71B in total value creation.